Artificial Intelligence Playbook for the UK Government (HTML)

Published 10 February 2025

Acknowledgements

The publication of this playbook has been made possible by the support from a large number of stakeholders.

Central government departments

Crown Commercial Service (CCS); Cabinet Office (CO), including the Number 10 Data Science (No.10 DS) and i.AI teams; Department for Business and Trade (DBT); Department for Education (DfE); Department for Environment, Food and Rural Affairs (DEFRA); Department for Energy Security and Net Zero (DESNZ); Department of Health and Social Care (DHSC); Department for Levelling Up, Housing and Communities (DLUHC); Department for Science, Innovation and Technology (DSIT), including the Government Office for Science (GO-Science) and the Responsible Technology Adoption Unit (RTA); Department for Transport (DfT); Department for Work and Pensions (DWP); Foreign, Commonwealth and Development Office (FCDO), including FCDO Services; Government Legal Department (GLD); HM Land Registry (HMLR); HM Revenue and Customs (HMRC); HM Treasury (HMT); Home Office (HO); Ministry of Defence (MoD); and Ministry of Justice (MoJ).

Arm’s length bodies, devolved administrations and public sector bodies

Driver and Vehicle Licensing Agency (DVLA); Government Communications Headquarters (GCHQ); Government Internal Audit Agency (GIAA); HM Courts and Tribunals Service (HMCTS); Information Commissioner’s Office (ICO); Met Office; National Health Service (NHS); Office for National Statistics (ONS); and the Scottish Government.

Industry

Amazon (AWS), Google, IBM and Microsoft.

Academic institutes

Alan Turing Institute; BCS, The Chartered Institute for IT; Oxford Internet Institute; Manchester Metropolitan University; and the University of Surrey.

User research

User research participants have come from a wide range of departments and have been very generous with their time.

Update log

We have made the following core updates since the previous edition:

- we have reviewed and updated the 10 principles and the guidance within the Generative AI Framework to cater for a wider range of AI technologies beyond generative AI

- we have added new sections on topics such as What is AI?, Fields of AI (including a new diagram), User research for AI, AI business cases, Societal wellbeing and public good, Security opportunities, Governance structures for teams, AI quality assurance and Managing risk. We have also included an Appendix with case studies on recent AI projects developed by public sector organisations

- we have reorganised and expanded the Working collaboratively, Using AI safely and responsibly and Ethics sections, to better articulate the importance of engaging with academia, industry and the broader civil society

- we have moved guidance and training on building AI solutions into separate AI insights articles and e-learning courses to allow for a more in-depth discussion

Foreword

I am pleased to introduce the AI Playbook for the UK Government, which updates and expands on the Generative AI Framework for HMG. This updated guidance will help government departments and public sector organisations harness the power of a wider range of AI technologies safely, effectively, and responsibly.

In January 2025 the government published the AI Opportunities Action Plan laying out a bold roadmap for maximising AI’s potential to drive growth and deliver real benefits to people across the UK.

The publication of the AI Playbook highlights the competence and extraordinary work already being done in the AI space across the public sector. Developed collaboratively, with input from many government departments, public sector institutions, academia, and industry, this guidance reflects our commitment to continuously engaging with and learning from wider civil society.

The AI Playbook will support the public sector in better understanding what AI can and cannot do, and how to mitigate the risks it brings. It will help ensure that AI technologies are deployed in responsible and beneficial ways, safeguarding the security, wellbeing, and trust of the public we serve.

The potential of AI to transform public services is enormous, giving us an unparalleled opportunity to do things differently and deliver more with less. AI is already helping civil servants spend less time on repetitive tasks, enabling teachers to personalise lessons, and can allow doctors to access life-saving insights faster, through AI-assisted diagnostics.

However, our journey with AI is just beginning. The AI Playbook is a launchpad that we will continuously revise and improve to help the UK public sector become a leading responsible user of AI technologies. As technology evolves, so too will our approach, ensuring we remain at the forefront of responsible innovation - always guided by the principle that technology must serve people.

I want to extend my sincere thanks to everyone who contributed their expertise to this AI Playbook, both within and beyond government. I look forward to ongoing collaboration as we continue to learn how to use AI safely, responsibly, and effectively to deliver solutions that are smarter, faster, and more responsive to the collective needs of our society.

Feryal Clark MP

Parliamentary Under-Secretary of State for AI and Digital Government

Department for Science, Innovation and Technology (DSIT)

Preface

When we published the Generative AI Framework for HMG in January 2024, we described it as ‘incomplete’ and ‘dynamic’. We didn’t pretend to have all of the answers in such a fast-moving field, but did aim to provide helpful, practical guidance to public servants on how to put generative AI to work confidently, responsibly and where it matters most.

The pace of change since we published that first version has not slowed, and interest in generative AI and other forms of AI has grown. The UK government continues to believe that AI has the power to drive productivity, innovation and economic growth. In 2021, the National AI Strategy set out a 10-year vision for AI, while the 2023 white paper A pro-innovation approach to AI regulation set out the government’s proposals for implementing a proportionate, future-proof and pro-innovation framework for regulating AI. In 2025, the AI Opportunities Action Plan highlighted how the government can leverage AI to boost productivity and improve services.

This updated version of the framework has been expanded to cover new developments, and we’ve retitled it to encompass all current forms of AI. As well as a general overview, it includes primers on various AI fields for the curious, and links to learning resources for those who want to dive deeper. The AI Playbook now covers the important and emerging discipline of conducting research with the users of AI systems. It addresses emerging cyber threats to those systems, and the ways that attackers are using AI to create new threats.

I would like to echo Minister Clark’s thanks to everyone who has contributed to this playbook. It has been a collective effort of experts from government departments, arm’s length bodies, other public sector organisations, academic institutions and industry partners. As we continue to advance together in the safe, responsible, and effective use of AI, I look forward to even broader collaboration and further contributions from an expanding community.

David Knott

Government Chief Technology Officer

Department for Science, Innovation and Technology (DSIT)

Principles

We have defined 10 common principles to guide the safe, responsible and effective use of artificial intelligence (AI) in government organisations. The white paper A pro-innovation approach to AI regulation sets out 5 principles for regulators to inform AI development in all sectors. This playbook builds on those principles and defines 10 core principles for AI use in government and public sector organisations.

- Principle 1: You know what AI is and what its limitations are

- Principle 2: You use AI lawfully, ethically and responsibly

- Principle 3: You know how to use AI securely

- Principle 4: You have meaningful human control at the right stage

- Principle 5: You understand how to manage the AI life cycle

- Principle 6: You use the right tool for the job

- Principle 7: You are open and collaborative

- Principle 8: You work with commercial colleagues from the start

- Principle 9: You have the skills and expertise needed to implement and use AI

- Principle 10: You use these principles alongside your organisation’s policies and have the right assurance in place

You can find posters on each of the 10 principles for you to display in your government organisation.

Principle 1: You know what AI is and what its limitations are

AI is a broad field subject to rapid research and innovation, and many claims have been made about both its promise and risks. You should learn about AI technology to understand what it can and cannot do, and the potential risks it poses.

AI systems currently lack reasoning and contextual awareness and their limitations vary depending on the tools you use and the context in which they operate. AI systems are also not guaranteed to be accurate. You should understand how to use AI tools safely and responsibly, employ techniques to increase the accuracy and correctness of their outputs, and have a process in place to test them.

You can find more about what AI is and its capabilities in the Understanding AI section.

Principle 2: You use AI lawfully, ethically and responsibly

AI solutions bring specific legal and ethical considerations. Your use of AI tools must be lawful and responsible. You should seek legal advice on the development and use of AI and engage with compliance, legal and data protection experts in your organisation early in your journey, including during product development. You may, for example, seek advice on equalities implications, fairness, intellectual property and other legal issues.

You should seek data protection advice on your use of AI. This may be from your lawyers or your data protection officer. AI systems can process personal data, so you need to consider how you protect this personal data, be compliant with data protection legislation, and minimise the risk of privacy intrusion from the outset.

You should establish and communicate how you will address ethical concerns throughout your project, from design to deployment, so that diverse and inclusive participation is built into the project life cycle. You should also consider the ethical principles presented in this playbook, and establish robust measures to suit your technological and deployment context.

AI models are trained on data which may include biased or harmful materials. As a result, AI systems may display biases and produce harmful outputs, such as unfair, prejudicial or derogatory representations of groups or individuals. You should consider all potential sources of bias throughout the development life cycle, including unrepresentative data sets and deployment scenarios that have unfair or undesirable impacts.

You must ensure that AI systems generate a positive impact on stakeholders and civil society at large while minimising potential harms as much as possible. When defining and deploying AI systems, you must understand people’s needs and priorities by conducting user research and engaging with the public as appropriate, including civil society groups, underrepresented individuals, those most likely to experience harm, NGOs, academia and industry.

You should understand and manage the environmental impact of the AI systems you are planning to use. You should also use AI technologies only when relevant, appropriate and proportionate. Choose the most suitable and sustainable option for your organisation’s needs.

You can find out more in the Using AI safely and responsibly section.

Principle 3: You know how to use AI securely

When building and deploying AI services, you must make sure that they are secure to use and resilient to cyber attacks, as laid out in the Government Cyber Security Strategy. Your service must comply with the Secure by Design principles, which were developed by the Central Digital and Data Office (CDDO), and the government’s Cyber Security Standard.

Different types of AI are susceptible to different security risks. Some threats – such as data poisoning, perturbation attacks, prompt injections and hallucinations – are specific to AI. However, AI systems can also amplify generic risks such as phishing and cyber attacks. You must understand the risks associated with your use of AI and of adversaries potentially using AI against you.

To minimise these risks you should build in safeguards and put technical controls in place. These include security testing and, in the case of generative AI, content filtering to detect malicious activity, as well as validation checks to ensure responses are accurate and do not leak data.

You can find out more in the Security and Data protection and privacy sections.

Principle 4: You have meaningful human control at the right stages

You need to monitor the AI’s behaviour and have plans in place to prevent any harmful effects on users. This includes ensuring that humans validate any high-risk decisions influenced by AI and that you have strategies for meaningful intervention.

For applications where instant responses are required and human review is not possible in real time, such as chatbots, it’s important that you ensure human control at other stages of the AI’s development and deployment.

You should fully test the product before deployment, and have robust assurance and regular checks of the live tool in place. Since AI models can sometimes produce unwanted or inaccurate results, incorporating feedback from users is crucial. You should have systems in place that allow users to report issues and prompt a human review.

You can find out more in the Human oversight section.

Principle 5: You understand how to manage the full AI life cycle

AI solutions, like other technology deployments, have a full product life cycle that you need to understand. You should know how to choose the right tool for the job, be able to set it up and have the right resource in place to support day-to-day maintenance of it. You should also know how to update the system and how to securely close it down at the end of its useful life.

You should understand how to monitor and mitigate for potential drift, bias, and, in the case of generative AI, hallucinations. You should also have a robust testing and monitoring process in place to catch these problems. You should use the Technology code of practice to build a clear understanding of technology deployment life cycles, and understand and use the NCSC cloud security principles.

You should understand the benefits, use cases and other applications that your solution could support across government and the wider public sector. The Rose Book provides guidance on government-wide knowledge assets and The Government Office for Technology Transfer can provide support and funding to help develop government-wide solutions. If you develop a service, you must use the government Service Standard.

You can find out more about development best practices for AI in the Building AI solutions section.

Principle 6: You use the right tool for the job

You should select the most appropriate technology to meet your needs. AI is good at many tasks, but there are a wide range of models and products. You should be open to solutions involving AI because they can allow organisations to develop new or faster approaches to the delivery of public services, can provide a springboard for more creative and innovative thinking about policy and public sector problems, and help your team with time-consuming tasks. However, you should also be open to the conclusion that, sometimes, AI is not the best solution for your problem: it may be more easily solved with more established technologies.

When implementing AI solutions, you should select the most appropriate deployment patterns and choose the most suitable model for your use case. You can learn about how to choose the right technologies for your task or project in the Identifying use cases for AI and Use cases to avoid sections.

Principle 7: You are open and collaborative

There are many teams across government and the wider public sector using or exploring AI tools in their work. You should make use of existing cross-government communities where there is a space to solve problems collaboratively, such as the AI community of practice. You should also engage with other government departments that are trying to address similar issues and reuse ideas, code and infrastructure.

Where possible, you should engage with the wider civil society including groups, communities, and non-governmental, academic and public representative organisations that have an interest in your project. Collaborating with people both inside and outside government will help you ensure we use AI to deliver tangible benefits to individuals and society as a whole. Make sure you have a clear plan for engaging and communicating with these stakeholders at the start of your work.

You should be open with the public about where and how algorithms and AI systems are being used in official duties. If you’re a central government department or an arm’s length body within scope, you’re required to use the Algorithmic Transparency Recording Standard (ATRS). This means you must document information about any algorithmic tools you use in decision-making processes and make it clearly accessible to the public. The ATRS is not a requirement for all arm’s length bodies and other public sector institutions yet, but we still encourage you to use it. You should also clearly identify any automated response to the public. For example, a response generated via a chatbot interface should include something like ‘this response has been written by an automated AI chatbot’.

You can find out more in the Ethics section.

Principle 8: You work with commercial colleagues from the start

AI is a rapidly developing market, and you should get specific advice from commercial colleagues on the implications for your project. Reach out to them early in your journey to understand how to use AI in line with commercial requirements.

You should also work with commercial colleagues to ensure that the expectations around the responsible and ethical use of AI are the same between AI systems developed in-house and those procured from a third party. For example, contracts between the public sector and third parties can be drafted to require transparency from the supplier on the different information categories, as set out in the ATRS. You can find out more in the Buying AI section.

Principle 9: You have the skills and expertise needed to implement and use AI solutions

You should understand the technical and ethical requirements for using AI tools and have them in place within your team.

You and your team should gain the skills needed to use, design, build and maintain AI solutions, keeping in mind that developing bespoke AI solutions and training your own models require different specialist skills to using pre-trained models accessible through application programming interfaces (APIs).

Decision makers, policy professionals and senior responsible owners (SROs) should gain the skills they need to understand the risks and opportunities of AI, including its potential impact on organisational culture, governance, ethics and strategy.

You should take the free AI courses on Civil Service Learning and proactively keep track of developments in the field. You can find out more in our Acquiring skills and talent section.

Principle 10: You use these principles alongside your organisation’s policies and have the right assurance in place

These principles and this playbook set out a consistent approach for the UK government to use AI tools. While you should use these principles when working with AI, many government organisations have their own governance structures and policies in place. You should follow any organisation-specific policies, especially ones about security and data handling.

You should understand, monitor and mitigate the risks that using AI tools can bring. Connect with the right assurance teams in your organisation early in the project life cycle for your AI solutions. You should have clearly documented review and escalation processes in place, and have an AI review board or programme-level board.

You can find out more in the Governance section.

Understanding AI

This section explains what AI is, what its main fields are, the applications of AI and generative AI in government and their limitations. It supports Principle 1: You know what AI is and what its limitations are.

What is AI?

AI is not new. The term ‘artificial intelligence’ was coined in 1956 during the Dartmouth workshop, a gathering of scientists intent on exploring the potential of computing to emulate human reasoning. Since then, there have been recurring waves of progress and excitement, followed by periods of waning interest and investment referred to as ‘AI winters’. We use the definition of AI adopted by OECD countries:

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

The UK government’s paper Establishing a pro-innovation approach to regulating AI suggests that these systems are ‘adaptable’ because they can find new ways to meet the objectives set by humans, and ‘autonomous’ because, once programmed, they can operate with varying levels of autonomy, including without human control.

Fields of AI

AI comprises a complex and evolving set of fields. These include a broad, interconnected range of algorithms, models and processes. Advances in one area typically propagate throughout these networks of technologies, conferring novel behaviours and increases in accuracy and capability to ancestor models.

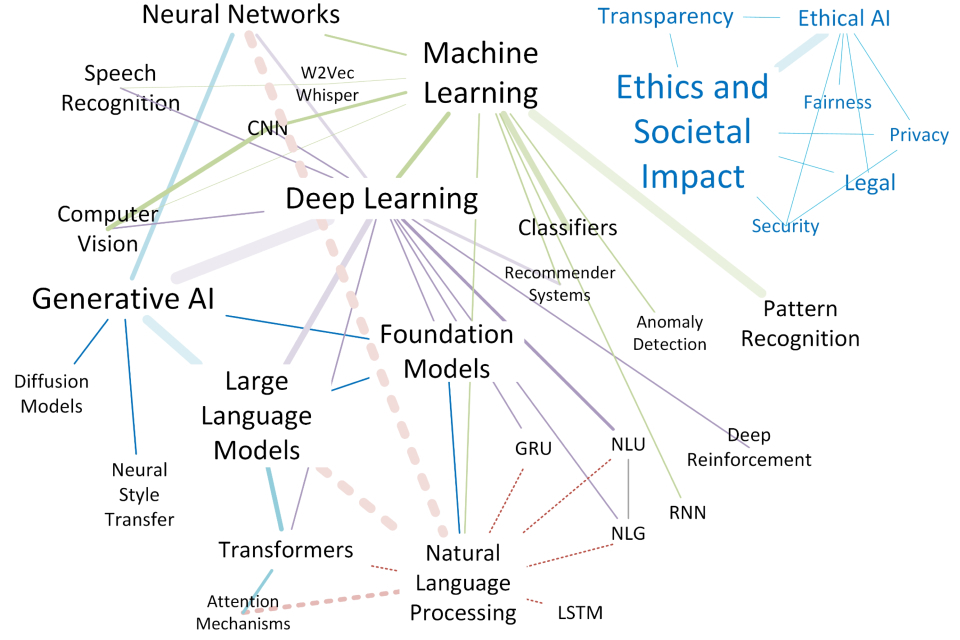

The following diagram illustrates the interdependencies between some existing and emerging fields of AI. As you can see, capturing the evolving complexity of AI is a challenging task.

The complexity of the AI space continues to increase: as AI technologies evolve they often branch out, advancing some research areas while leaving others unchanged. For example, in the field of computer vision (CV) some legacy systems still thrive today due to the simplicity of their behaviours, their low computational and memory requirements, and their stability and well-proven performance. These legacy CV systems fall within the domain of machine learning (ML). However, more recent and capable CV systems, such as those used in self-driving or medical imaging systems, are considerably more complex and belong to CV as part of deep learning (DL).

As software and algorithms are advancing, so too is hardware infrastructure. Traditional computer processing units (CPUs) can struggle with the large amounts of data and calculations AI requires. New types of hardware have been implemented to train and run AI models faster and more efficiently. For example, the largest modern AI systems (ranging in the hundreds of billions to trillions of parameters) are trained on networks of thousands of graphics processing units (GPUs), while Tensor Processing Units (TPUs) and Neural Processing Units (NPUs) are increasingly used to optimise ML training.

Below we provide an overview of the main fields of AI. For a deeper understanding of these fields you can:

- take the free e-learning courses available on Civil Service Learning

- use the other training opportunities mentioned in the Acquiring skills and talent section

- refer to the AI insights articles

Neural networks

Neural networks (NNs), or artificial neural networks (ANNs), are a computational model inspired by biological NNs in the human brain. They were initially created as an aspect of ML in the 1940s but they may now be considered as components of more elaborate DL systems.

How do NNs work?

An NN learns through exposure to data in training cycles. During these cycles, the model gradually adjusts the connections between different parts of the network, setting the weights between nodes of adjacent layers. When the model makes an error, the network calculates it as data and checks how far off it was. The error is then adjusted backwards through the network by updating the weights by a very small amount (the learning rate) in the direction that will serve to minimise that error. Repeated executions of this process during the training phase tune the network so that it can generalise faithfully on related data it has never seen before.

Applications of NNs

NNs have a wide range of applications due to their ability to recognise patterns, process new data, make predictions and improve over time. NNs are often deployed in image recognition applications, medical imaging, speech recognition models, autonomous systems, and in many other examples.

Machine learning

ML is the branch of AI that learns from data. It does this by extracting features from data and learning the relationships between those features. Anywhere there is data, there is an opportunity to learn from it. ML can provide the public sector with unique and quantifiable insights into data that were previously impossible, expensive, or of limited or short-term utility.

How does ML work?

ML uses algorithms to analyse data, learn patterns, and then make predictions or decisions based on new data. To work effectively, ML systems must be trained using carefully selected information. This training helps create an optimised model that identifies pertinent features in the data, and weights their relationships to understand how they relate to each other.

ML systems can be trained using either ‘labelled’ or ‘unlabelled’ data, or a combination of both. Labelled data is data that has been tagged or categorised in advance. This form of training is usually called ‘supervised learning’ because the model is trained under the supervision of labelled data. Each example in the training data set comes with a correct answer, or label, from which the model is supposed to learn. When unlabelled data is used, the model is programmed to identify patterns, groupings or structures on its own. This is often referred to as ‘unsupervised learning’.

Applications of ML

The ability of ML models to learn from data, identify changes, and update based on changes in underlying data enables a host of capabilities that would simply not be possible through traditional linear, deterministic systems. ML models can be used as elements of much larger DL models, or as data processing participants in a sequence of operations, and they underpin many applications including fraud detection, feedback analysis, image processing, summarisation, and more. Modern large language models (LLMs) are also examples of ML systems.

Deep learning

DL is a subset of ML that involves more complex model structures and architectures, including sophisticated NNs, to learn complex patterns and representations from large amounts of data. DL has complemented and expanded upon ML, but ML remains the first-choice candidate for simpler tasks and can be easier to implement with less data and lower computational power. DL, while more resource-intensive, excels in handling complex tasks and larger data sets.

How does DL work?

DL models initially detect simple features – edges in an image, for example – and gradually combine them to recognise more complex patterns, such as identifying a face or understanding speech.

Applications of DL

DL is used in advanced applications like biomedical research and autonomous driving, but also in image and speech recognition (SR), natural language processing (NLP), personalised recommendations, and more.

Speech recognition

SR, sometimes referred to as automatic speech recognition (ASR), is a field of ML dedicated to processing speech. SR includes both systems that convert speech into text (STT) and new speech-to-speech (S2S) systems.

How does SR work?

Computers only understand numbers, so the challenge with SR is to turn spoken words into numbers while keeping their meaning and context. To do so, SR models first convert speech into numeric representations called spectrograms, which show sound frequencies over time. DL models then analyse these spectrograms to identify sounds, words or sentences. In STT, this process results in written text. In S2S, the recognised words are further processed by another model that translates the text into a different language, which is then turned back into spoken words.

Applications of SR

SR has expanded considerably over recent years, especially in terms of voice recognition. This technology is now used in banking, personal agents like Siri and Alexa, and general SR functionality built into phones and cars, among other things. Modern SR applications include call analytics, emergency services, meeting summarisation and media subtitling, and more.

Computer vision

CV is a field of AI and ML that enables computers to interpret, analyse and understand visual information (images and videos) to perform tasks such as object recognition, facial recognition and scene understanding. Although CV has been researched since the 1960s, it has progressed considerably in recent decades due to a combination of developments in model architecture, hardware performance, and data quality and volumes.

How does CV work?

CV systems are intended to make image data comprehensible and interpretable so that it can be consumed and evaluated appropriately. These evaluations most commonly include classification, object detection, video analysis and image segmentation.

To perform these tasks, CV uses algorithms to break down images into pixels and process the pixels to detect edges, shapes and colours. The system then uses this information to recognise objects, people or scenes – similarly to how our brains process visual information – allowing computers to identify the content of images.

Applications of CV

CV has many applications in fields such as facial recognition, quality control, healthcare and medical imaging, surveillance, robotics, and more.

Natural language processing

NLP is a field within AI that focuses on processing human language. NLP combines computational linguistics and machine learning to analyse large amounts of natural language data and comprehend, interpret, translate, and generate language and language-related data. LLMs, which are in widespread use, are a subset of NLP, with NLP preceding them by several decades.

How does NLP work?

NLP uses algorithms to convert text into numerical representations that are then processed by an ML model. This process involves a series of steps including noise reduction, tokenisation, stop-words removal, stemming or lemmatisation, vectorisation, and embeddings. The model uses the weights learned during training to adjust the importance of different features in the input data, and considers various elements, including the order and context of words, to understand the overall meaning of the text and produce the required outputs.

Applications of NLP

NLP is used for a wide variety of applications and tasks related to language, including machine translation, document classification, sentiment analysis, parts-of-speech tagging, named-entity recognition, text summarisation and conversational AI.

For government departments, NLP can play an important role in managing and processing large volumes of human language data from sources like emails, letters and online forms, enabling efficient and meaningful analysis.

Generative AI

Generative AI is a subset of AI capable of generating text, images, video or other forms of output by using probabilistic models trained across various domains. Generative AI learns from large amounts of specially curated training data to discern and replicate complex patterns and structures. The output of generative AI models mimics the characteristics learned from the training data, enabling a range of novel applications. These include personalised content generation, advanced analysis and evaluation, and aiding creative processes.

Examples of publicly accessible generative AI tools are ChatGPT, Claude, Gemini and Dall-E. Generative AI is also becoming increasingly integrated into mainstream products. Examples include the Adobe Photoshop Generative Fill tool, AWS ChatOps Chatbot, Microsoft 365 Copilot and Google Duet AI.

How does generative AI work?

Generative AI uses large quantities of carefully selected data to train models so they may learn the underlying patterns and structure of data. A well-known example of generative AI is LLMs, which are large neural networks specifically trained on text and natural language data to generate high quality, text-based outputs. However, there are many other generative AI models that are not purely text based.

Once trained, generative AI models are capable of generating new content consistent with the features and relationships learned from the training data. When a user provides a prompt or input to an LLM, the model evaluates the probability of potential responses based on what it has learned from its training data. It then selects and presents the response that is likeliest to be the right fit for the given prompt.

Applications of generative AI

The applications of generative AI go beyond the simple generation of new text or images. For example, generative AI is used in medicine to test molecular structures for drug discovery, and in the financial sector to generate multimodal data surfaces approximating trading and market conditions in order to test safety, security, trading and trade surveillance models. Generative Adversarial Networks (GANs) are also used for creating synthetic data sets to train ML models, as well as in other areas such as deepfake detection, self-driving model ensembles, and text-to-image synthesis. You can explore more applications of generative AI in the Applications of AI in government section.

For more on how to build, fine-tune and use generative AI solutions, refer to our series of AI insights.

Agentic AI

Agentic AI refers to autonomous AI systems that can make decisions and perform actions with minimal human intervention. These agents are capable of understanding their environment, identifying the set of tools and functions at their disposal, and using those to take actions to achieve their objectives.

Agent-based systems use Foundation Models (primarily LLMs) to match their capabilities with their objectives. For example, an order processing system may have multiple agents autonomously capturing pricing and market-related data. When a request for an order is raised, the agentic system may utilise those prices or, seeing that they haven’t been updated recently, may use another agent to retrieve the latest price available.This all happens automatically in the background because the system knows which agents can perform each task.

These systems provide a simpler way to build powerful AI-driven solutions by focusing on the capabilities of each agent and letting the AI model itself figure out the best way to achieve the system’s objectives. The high level of abstraction involved in this technology makes it easier to create more efficient and effective systems that take full advantage of AI.

Ethics and societal impact

As research developments have increased the presence of AI in the public sector and everyday life, ethical and societal considerations remain essential to the adoption and use of AI.

Applications of AI in government offer important benefits, such as improving productivity and enhancing access to services. However, AI systems also have limitations and can have two-sided impacts if not used in responsible ways and with the appropriate safeguards in place.

You can read more on the legal, ethical and security implications of AI in the Using AI safely and responsibly section.

Applications of AI in government

AI has a broad range of capabilities and has been relevant to the work of government for many years. The ability of generative AI to process language and produce new text, images and code has further enhanced the potential applications of AI technology.

You can find an overview of how government bodies are using AI – including the central government’s plans for supporting the adoption of AI – in the report on the Use of artificial intelligence in government (2024) by the National Audit Office (NAO).

The following table presents some examples of potential applications of both AI and generative AI technologies in government.

| Application | AI | Generative AI |

| Speed up the delivery of services | Machine learning (ML) and optical character recognition (OCR) algorithms can support the processing of thousands of handwritten letters per day, reducing response times. | Can retrieve relevant organisational information faster to answer digital queries or route email correspondence to the right parts of the business. |

| Reduce staff workload | AI technologies for facial recognition and data analytics allow for the automatic control of passports in airports, freeing staff from this task. | Can suggest first drafts of routine email responses or computer code, acting as an autocomplete tool for algorithms. |

| Perform complicated tasks | ML can analyse very large data sets, identify trends and anomalies in complex historical data, and support data-driven decision making. | Can help review and summarise huge amounts of information, as well as identify and correct errors in long algorithms. |

| Perform specialist tasks more cost-effectively | Predictive analytics can identify future needs for resources, optimising budget allocations and planning. ML can detect fraudulent activities in different fields, preventing financial losses and protecting against cyber threats. | Can summarise documentation containing specialist language like financial or legal terms, or translate a document into several different languages. |

| Improve the quality of services | Recommender systems can help users navigate government web pages and find the information they need. AI can also analyse thousands of feedback messages and suggest service improvements. | Can improve the readability and accessibility of information on web pages. For example, by simplifying complex language, improving formatting and generating alternative text for images. |

The Identifying use cases for AI section can help you select appropriate applications of AI tools. For real-life examples of some of these applications, refer to the AI use cases in the public sector appendix.

However, AI systems still have limitations. You should make sure that you understand these and build appropriate testing and controls into any AI solutions.

Limitations of AI

The capabilities of AI are improving over time. However, they do not provide the answer to every problem. Some of the limitations of AI systems are:

- bias: AI systems lack consciousness and their outputs tend to replicate the bias present in the data they were trained on. For example, their performance can be affected by model bias – an innate deviation in the model giving rise to an error between predicted and actual values – and algorithmic bias – which refers to systematic inequality in outcome from a model. For more information, refer to the Review into bias in algorithmic decision-making (PDF, 9.9MB) and the Introduction to AI assurance (PDF, 1,149KB) guidance. There is more about the ethical implications of bias in the Fairness, bias and discrimination section

- data quantity and quality: AI systems heavily rely on the quality and quantity of data to drive accuracy. Insufficient data can lead to the model failing to generalise effectively, and, similarly, poor quality, biased or noisy data can lead to inaccurate outcomes if not managed correctly

- accuracy: it’s difficult to produce an AI system which provides 100% accurate outputs under all conditions. You must be clear about what objective measures you’re assessing the AI outputs against and any factors that impact them

- transparency and explainability: some AI models – for example, deep learning architectures and convolutional or recurrent neural networks – can be so sophisticated that it is challenging to trace how a specific input leads to a specific output

- cost and sustainability: depending on the tool you use, AI implementation can be complex, time consuming and have considerable compute costs. Ongoing investment is needed to maintain these systems, update them with new data if required, and ensure their performance remains stable over time. It’s important to consider the sustainability impact of deploying AI systems and check if there are better alternatives

Generative AI also has further specific limitations. You need to be aware of these limitations and have checks and assurance in place when using generative AI in your organisation.

- hallucination (confabulation): large language models (LLMs) and other probabilistic generative models are vulnerable to creating content that appears plausible but may actually be factually incorrect, as they generate content by returning the most likely output for a given input based on the patterns learnt from the data they were trained on, without an actual understanding of the content

- lack of critical thinking, personal experience and judgement: although some LLM-based AI systems can give the appearance of reasoning and their outputs may appear as if they come from a person, they are in no way sentient

- sensitive or inappropriate context: LLMs can generate offensive or inappropriate content if not properly guided, since they can replicate any bias or toxic material present in the data they were trained on

-

domain expertise: LLMs are not domain experts. They are not a substitute for professional advice, especially in legal, medical or other critical areas where precise and contextually relevant information is essential

- dynamic real-time information retrieval: some well-known LLMs such as ChatGPT, Gemini and Copilot are now able to include access to real-time internet data in their results. But there are still many LLMs that do not have real-time access to the internet or data outside their training set

Building AI solutions

This section outlines the practical steps you’ll need to take when building AI solutions, including defining the goal, building the team, managing business change, buying AI and building the solution. It supports:

- Principle 1: You know what AI is and what its limitations are

- Principle 3: You know how to use AI securely

- Principle 4: You have meaningful human control at the right stage

- Principle 5: You understand how to manage the AI life cycle

- Principle 6: You use the right tool for the job

- Principle 8: You work with commercial colleagues from the start

- Principle 9: You have the skills and expertise needed to implement and use AI

- Principle 10: You use these principles alongside your organisation’s policies and have the right assurance in place

However, following the guidance in this section is only part of what is needed to build AI solutions. You also need to make sure that you’re using AI safely and responsibly.

Building the team

Like any other digital service, developing AI projects requires more than just technology. You will need to work collaboratively in a multidisciplinary team with diverse expertise.

The government Service Manual offers step-by-step guidance on how to set up a service team for the development and management of digital services. At the outset of your AI project, you need to form a service team and identify key collaborations with other teams and departments.

Your minimum viable AI team must be able to:

- identify user needs and accessibility requirements

- manage and report to stakeholders and other teams, collaborating with different field experts

- design, build, test and iterate AI products, using agile methodologies

- ensure the responsible development of lawful, ethical, secure and safe-by-design AI services

- be able to collect, process, store and manage data ethically, safely and securely

- test with real users and measure the performance of the service

- support the live running of the service, iterate and retire it

You can use the Government Digital and Data Profession Capability Framework to identify skill gaps in your team, forecast workforce needs and create effective and consistent job adverts. Be aware that your capability needs will change during the project life cycle: use the Service Manual to understand the roles you will need in the different phases of your project.

You will need the right balance between technical and domain expertise, depending on the nature of your project. As well as building a team that contains the right skills, make sure it includes a diversity of groups and viewpoints to help you stay alert to risks of bias and discrimination. Your team should include or be able to collaborate with:

- business leaders and experts who understand the context and impact on users and services

- data scientists who understand the relevant data, how to use it effectively, and how to build and train and test models

- software engineers who can build and integrate solutions

- user researchers and designers who can help understand user needs and design compelling experiences

- legal, commercial and security colleagues, as well as ethics and data privacy experts, who can help you make your AI solution safe and responsible

Considering the current shortage of AI talent, consider adopting strategies that combine new hires, working with contractors or third parties, and internal upskilling.

Acquiring skills and talent

The professional skills required for working in the digital space are outlined in the Government Digital and Data Profession Capability Framework. You can find more information on building a digital and data team in the government Service Manual.

As AI skills are in high demand, their inclusion in the government’s talent strategy is critical. Currently, the Government Digital and Data Profession’s approach involves integrating AI modules and learning materials into existing talent offerings. You can also source AI skills through senior digital secondments and internal collaboration with allied professions such as data engineering.

Raising general awareness of AI throughout the Civil Service and upskilling current digital and data professionals on AI remains crucial. Every civil servant can take up to 5 days of learning per year and some departments allow more days than others. It’s important that the members of your team have the necessary time and resources to update their knowledge and skills. Data, analytics and digital professionals can also benefit from having sandboxes and spaces to experiment with AI in a safe and secure way.

The cross-government user research on AI conducted by the Central Digital and Data Office (CDDO) in 2023 and 2024 identified the 5 groups of learners below.

- Beginners: civil servants who are new to AI and need to be familiar with its concepts, benefits, limitations and risks to be able to work with AI tools safely, responsibly and efficiently. Learning for this audience should focus on having an improved understanding of AI.

- Technical roles outside government digital and data: civil servants in roles such as operational delivery and policy profession who are likely to use AI in their work for tasks such as information retrieval and text or image generation. Learning for this audience should provide the necessary knowledge and skills to make effective and responsible use of appropriate AI tools – with particular attention given to generative AI, its limitations and prompt engineering.

- Data and analytics professionals: civil servants who work on the collection, organisation, analysis and visualisation of data, with varying levels of expertise in AI. Learning for this audience should advance their understanding of AI with a focus on the implementation and use of AI to facilitate automated data analysis, the synthesis of complex information, and the generation of predictive models.

- Digital professionals: civil servants with advanced digital skills who are likely to work on the development of AI solutions in government. Learning for this audience should address the technical aspects and implementation challenges associated with fostering AI innovation, and provide opportunities to collaborate with leading industries and academic institutions.

- Leaders: decision makers and senior civil servants who are responsible for creating an AI-ready culture in government. Learning for this audience should provide resources and workshops that are accessible to non-digital and data professionals and focus on the latest trends in AI, including its potential impact on organisational culture, governance, ethics and strategy.

The AI Policy Directorate in the Department for Science, Information and Technology (DSIT) has published, in collaboration with Innovate UK and the Alan Turing Institute, AI Skills for Business Guidance. While designed for business, this guidance offers a helpful overview of the knowledge and skills needed to harness the opportunities and navigate the practical challenges of AI.

Learning resources

We’ve launched a series of free online learning resources for all civil servants. The resources cover multiple topics:

- fundamentals of AI and generative AI, including their capabilities and limitations

- understanding AI ethics

- the business value of AI

- an overview of the main generative AI tools and applications

- courses on working with large language models, machine learning and deep learning, natural language processing and speech recognition and computer vision

- a technical curriculum with courses leading to certificates in different AI fields

AI courses are freely available within Civil Service Learning, as well as a series of AI courses from the Government Campus which can be accessed through the learning frameworks.

Senior civil servants, grade 7s and grade 6s, can also sign up to the AI course within The Digital Excellence Programme. This was designed by CDDO and the Government Skills and Curriculum Unit (GSCU) to support leaders outside of the digital and data profession to become pioneers of the government’s digital transformation.

Practical recommendations

- Make full use of the training resources available, including those on Civil Service Learning.

- Build a multidisciplinary team with all the expertise and support you need.

- Use multiple talent acquisition strategies and upskill current digital and data professionals in your team.

- Consider providing data, analytics and digital professionals with sandboxes and safe spaces to experiment with AI securely.

Working collaboratively

You need to work collaboratively to develop effective AI solutions. Your team should establish and maintain relationships with internal and external stakeholders with varying levels of familiarity with AI technologies.

You must be ready to collaborate with other teams in your organisation to address occasional knowledge and capability gaps in your team. Building AI solutions requires industry knowledge, whereas scaling and deploying these solutions will require considerations from software and/or technical infrastructure teams. Engaging these teams early on creates clear requirements and parameters for the proposed solutions, and sets a path for success.

To avoid siloed approaches and duplication of work, look for opportunities for cross-government and industry events that will help your team stay updated on the latest developments and best practices.

For example, consider joining the AI community of practice. This offers monthly meetings for people working on or interested in AI in the public sector. You can sign up to this community by using the dedicated form.

Depending on the nature of your project, you should also consider working with industry experts and academic institutions to foster knowledge exchange and access to cutting-edge research. Additionally, engaging with broader civil society will help you understand people’s values, concerns, and priorities, ensuring your AI solution meets the needs of the public we serve.

Defining the goal

Like all technology, using AI is a means to an end – not an objective in itself. Whether planning your first use of AI or a broader transformation programme, you should be clear on:

- the goals you want to achieve

- the needs of your users

- where you can most effectively use AI technologies, and where you should avoid them entirely

For example, you can use AI to automate and streamline processes, optimise the use of resources, foster data-driven decision making, and improve the quality and accessibility of some services. Goals for the use of generative AI may include improved public services or productivity, increased staff satisfaction, cost savings or risk reduction.

The sections below will help you understand when AI might be the right tool for your project.

User research for AI

User research (UR) can be critical to the success of your AI project. It is an important mechanism for engaging with people both inside and outside government to understand their needs, values and priorities. It provides key insights into the way the humans who will use the product or service behave, think and feel. This insight helps us ensure we use AI to deliver tangible benefits to individuals and society as a whole. It’s an exciting opportunity to collaborate on the design of an experimental approach, including the development of metrics, which will support the continuous improvement of your AI solution.

Doing UR for an AI project helps you keep the human in the loop and understand the human intelligence that the AI will replicate or imitate. Through UR, you can observe how people complete tasks and solve problems, understand their attitudes, and uncover the cultural issues that may impact the adoption and use of an AI solution. These insights will help you to identify where and how a human needs to be involved. You can find information about how to do user research in the government Service Manual.

Developing AI products and services involves some specific activities that user research can be particularly useful for. Some of these are critical to your AI project and some supplement the existing data science process. These include:

- understanding if AI is the right tool: do early UR to observe and speak with people who are involved in processes, whether these are internal users or the general public. This can help you understand users’ problems and aspirations. At this stage, a user researcher can work with a business analyst to find efficiencies in business processes and measure dissatisfaction. This will help establish a baseline of metrics to measure your project outcomes against. UR insights can also help you to work out an appropriate level of model accuracy when working with subjective outputs, and understand biases in the existing human process

- defining performance metrics: user researchers can work with analytical colleagues to use insights from research to define model and system metrics and methodologies for measuring ongoing performance

- preparing data: understand what data your users use, and how they think and talk about an activity. This will help you find, categorise and summarise appropriate training data. User researchers can help with supervised learning if you need human judgement to produce correct labelling of your training data. To consider how bias can affect labelling, refer to the Fairness, bias and discrimination section

- synthesising data: user researchers can help review and assess the appropriateness of generated synthetic data by developing and managing a process for bringing subject matter experts (SMEs) into the loop. If you’re planning to use synthetic data, check the UK Statistic Authority’s Ethical considerations relating to the creation and use of synthetic data

- evaluating your model’s output: as well as methods like statistical evaluation, it’s good practice to test the accuracy and relevance of your model’s outputs with a sample of your users. Methods such as reinforcement learning from human feedback (RLHF) are important to consider when training models – for example, when ranking different outputs. Humans can be particularly helpful when working on tasks that are difficult to specify but easy to judge, such as producing text that lacks bias, toxicity or other harmful content

- measuring usability of the product: observing how users respond to and use the outputs of your AI system will help you understand if they can use it to confidently complete their tasks. Design this testing into the way the AI solution is monitored, so that you can better understand data drift through user behaviour and changes over time

- understanding attitudes to the product or system: UR will help you understand levels of trust, confidence, and how the solution is used for decision making. These insights are useful to understand why model metrics might look good but service metrics are less positive. Model metrics measure how well your technology is performing, whereas service metrics help you understand if users’ needs and business goals are being met, which can be very different. For example, a machine learning (ML) algorithm could have a very high accuracy rate, but the users of the wider system could misinterpret its output and follow an unexpected course of action or completely ignore its recommendation through lack of trust

- accessibility: it’s particularly important to work out whether any users are being excluded from using your product or service, either intentionally or unintentionally. UR with a realistic sample can help you identify groups that may be excluded and why

- identifying how the system is being used: your AI solution may be used in ways you did not originally intend. Understanding why and what is happening can help you better meet the needs of your users and identify both risks and opportunities for innovation

- monitoring the AI solution while in service: building regular UR into how the solution is managed will help you continuously improve your product

The skills needed to collaborate on the steps above are within the usual skillset of most experienced user researchers. A user researcher on an AI project will need to:

- engage with the technology

- be adaptable in their approach

- design studies with technical and analytical colleagues

- use attitudinal methods

- understand how to do research that supports change management

- design studies to assess how user behaviour changes over time. For example, developing strategies for creating continuous feedback loops

- have experience doing generative research

- do concept testing

- understand privacy and data ethics

- be able to communicate in non-technical language to explain the AI system from a user perspective

Practical recommendations

- Consult the government Service Manual’s sections on user research.

- Get your organisation’s user researchers involved in the project from the start so that research and evaluation is designed into the way the AI solution is supported.

- Work with user researchers to design into the product or service ways of continuously assessing how effectively the AI model meets user needs.

- Read the example use case GOV.UK Chat: doing user research for AI products.

Identifying use cases for AI

When thinking about how your organisation could benefit from AI, consider the possible situations or appropriate use cases. This must be led by business and user needs, pain points and inefficiencies, not what the technology can do.

Once you’ve identified the challenges and opportunities through user research, focus on use cases that can only be solved by AI or where AI offers significant advantages over existing techniques.

You can do this by considering whether traditional solutions might be unable to handle the volume, complexity or real-time nature of the task; or whether the problem relates to, for example, advanced pattern detection in large data sets, automating complex, dynamic decision-making processes, or providing personalisation. Evaluate the potential impact of implementing AI solutions on these problems using tools like cost-benefit analysis to consider improvements in efficiency, accuracy or cost reduction.

You should also assess the feasibility of implementing AI in your team. Are the necessary skills and infrastructure in place to implement and maintain AI solutions? Would you need to train current employees, hire new talent or partner with AI technology providers?

When deciding if you’re going to use AI, you should also consider the capabilities and limitations of AI, the use cases to avoid, and discuss your project with technical experts.

To consider potential use cases of AI in your organisation, check the:

- examples of applications of AI in government

- appendix with case studies on AI projectsrecently developed in the public sector

- projects recorded in the Algorithmic Transparency Recording Standard (ATRS) register

Use cases to avoid

Given the current limitations of AI, and their ethical, legal and social implications, there are use cases that are not appropriate and which should be avoided in the public sector. These include but are not limited to:

- fully automated decision making: be cautious about any use case involving significant decisions, such as those involving someone’s health or safety. For more detail, refer to the section on Human oversight

- high-risk or high-impact applications: AI should not be used on its own in high-risk areas which could cause harm to someone’s health, safety, fundamental rights or the environment

Follow the principles of using AI safely and responsibly to check if your use case involves significant risks related to bias, fairness, transparency, privacy or human rights.

Practical recommendations

- Define clear goals for your use of AI, and ensure they’re consistent with your organisation’s AI roadmap.

- Select use cases which meet a clear need and fit the capabilities of AI.

- Find out what use cases other government organisations are considering.

Understand the limitations of AI and avoid high-risk use cases, considering the principles of using AI safely and responsibly.

Creating the AI support structure

To ensure your organisation adopts AI smoothly, consider how AI will change the way your people and processes work. Check that you have the structures in place to support its adoption.

These structures do not need to be fully mature before your first project. Your experience in your first AI project will shape the way you organise these structures.

However, you should make sure that you have sufficient control over how you use AI and ensure all AI systems operate in a safe and responsible environment. If you do not already have them in place, you should establish the following:

- AI strategy and adoption plan: a clear statement of the way that you plan to use AI within your organisation, including the impact on existing organisation structures and change management plans

- AI principles: a simple set of top-level principles which embody your values and goals, and that can be followed by all the people building solutions

- AI governance board: a group of senior leaders and experts to set principles and review and authorise uses of AI which fit these principles

- AI communication strategy: your approach for engaging with internal and external stakeholders to gain support, share best practice and show transparency

- AI sourcing and partnership strategy: a definition of which capabilities you will build within your own organisation, and which you will seek from partners

- AI training: resources that your team can use to upskill, based on a learning needs analysis

You should also consider using:

- a change management team: a small team with access to senior leadership that can shape your approach

- a use cases register: a way of capturing use cases and prioritising which you would like to explore first

- monitoring systems: to gather feedback and quickly identify emerging risks throughout change processes and for the duration of the system’s life cycle

- review and change processes: to provide staff with sufficient time, information and tools to identify and adapt to risks that emerge during a change process

- fallback processes: to ensure that critical functionality and services can be maintained if a change must be reverted and/or an AI system terminated

Practical recommendations

- Identify the support structures you need for your level of AI maturity and adoption, or reuse support structures already in place for other technologies.

- Design an appropriate method of capturing and prioritising opportunities based on feasibility and business value.

- Develop a communication strategy for engaging a wider community of leaders and staff to explain AI, demonstrate activity and reduce resistance to change.

Buying AI

While AI is not new, the AI supply market is evolving rapidly. It’s important that you engage with commercial colleagues to discuss partners, pricing, products and services.

The Crown Commercial Service (CCS) can guide you through:

- existing guidance

- routes to market

- specifying your requirements

- running your procurement

They can also help you navigate:

- running your procurement in an emerging market

- regulation and policy

- aligning procurement and ethics

AI business cases

Creating the business case for your AI project is an important stage in the process of AI adoption as it gives decision makers the opportunity to assess the return of investment in both resources and costs.

Before writing your business case, you should engage with stakeholders and discuss your project to understand if AI needs to be used, and, if so, which solutions offer the best improvements in productivity.

There are several resources you can use to write a strong business case. Consider following the Treasury’s Green Book (2022) guidance to create a fully fledged, five-part business case. This may be a requirement depending on the scale of your project and investment.

Your organisation will likely have investment thresholds. Typically, any investment approaching £10 million will require a five-part business case. You must use the Green Book when that is the case. If the scale of investment is below this threshold, you should strongly consider using the guidance on agile business cases, which was developed by the Government Digital Service (GDS).

When working on your business case, note that it’s mandatory to assure all digital and technology spend above £100,000 for anything public facing and £1 million for anything else, through your assurance boards. The process for doing so will be subject to your organisation’s requirements. Follow GDS’s guidance on getting spend approvals.

In the following section, we summarise existing guidance on purchasing AI products and services. However, as the field is evolving rapidly this is not exhaustive, and engaging with commercial experts early in your project remains crucial.

Existing guidance

There is detailed guidance to support the procurement of AI in the public sector. You should familiarise yourself with this guidance and make sure you’re taking steps to align with best practice. The:

- Guidelines for AI procurement provide a summary of best practice when buying AI technologies in government, including preparation and planning, publication, selection, evaluation and award and contract implementation and ongoing management

- Digital, Data and Technology Playbook provides general guidance on sourcing and contracting for digital and data projects and programmes. All central government departments and their arm’s length bodies are expected to follow this on a ‘comply or explain’ basis. It includes specific guidance on AI and machine learning, as well as Intellectual Property Rights (IPR)

- Sourcing Playbook defines the commercial process as a whole and includes key policies and guidance for making sourcing decisions for the delivery of public services

- Knowledge asset management in government (The Rose Book) provides guidance on managing and exploiting the wider value of knowledge assets (including software, data and business processes). Annex D contains specific guidance on managing these in procurement

- Digital and technology spend control version 6 details in which instances your requirements will be subject to functional assurance of central government spending. Spend controls enable the UK government to achieve greater efficiency and better outcomes

- Procurement Policy Note (PPN) 02/24: Improving Transparency of Artificial Intelligence Use in Procurement provides optional questions to help identify suppliers’ use of AI in response to government procurements and in the delivery of services to government

Routes to market

Consider the available routes to market and commercial agreements and determine which one is most suitable based on your requirements.

However you decide to source your requirement, you must ensure you comply with procurement legislation. This is primarily the Public Contract Regulations 2015 and the Procurement Act 2023, which is set to come into effect in 2025. Commercial colleagues will be able to assist with this.

There are various routes to market to purchase AI systems. Depending on the kind of challenges you’re addressing, you may prefer to use a framework or a Dynamic Purchasing System (DPS). CCS offers several frameworks and DPSs for the public sector to procure AI.

A public-sector procurement route also exists, sometimes known as a ‘Find a tender service procurement’ due to the requirement to advertise on that platform. This may be appropriate for bespoke requirements or contractual terms, or where there is no suitable standard offering.

The table below summarises the differences between a framework agreement and a DPS. There’s more information on the CCS website and, on the use of frameworks, in the Digital, Data and Technology Playbook.

| Category | Framework | DPS |

|---|---|---|

| Supplier access | Successful suppliers are awarded to the framework at launch. Closed to new supplier registrations. Prime suppliers can request to add new subcontractors. |

Open for new supplier registrations at any time. |

| Structure | Often divided into lots by product or service type. | Suppliers filterable by categories. |

| Compliance | Thorough ongoing supplier compliance checks carried out by CCS, meaning buyers have less to do at call-off (excluding G-Cloud). | Basic compliance checks are carried out by CCS, allowing the buyer to complete these at the call-off. |

| Buying options | Various options, including direct award, depending on the agreements. | Further competition only. |

Note that a number of CCS agreements include AI within their scope.

Examples of DPSs include:

Examples of frameworks include:

- Big Data and Analytics

- Technology Products & Associated Services 2

- Technology Services 3

- Back Office Software

- Cloud Compute 2

In addition to commercial agreements, CCS has signed a number of Memorandums of Understanding (MoUs) with suppliers. These MoUs set out preferential pricing and discounts on products and services across the technology landscape, including cloud, software, technology products and services, and networks. You can access MoU savings through any route to market. To get support or find out more, email info@crowncommercial.gov.uk.

Specifying your requirements

When buying AI products and services, you’ll need to document your requirements to tell your suppliers what you need. To define what you need, you should engage with subject matter experts (SMEs) as soon as possible, and take time to consider the most appropriate type of AI solution for your project. This might be an off-the-shelf product, an existing technology with bolt-on AI elements (paid or free), outsourcing AI builds (if applicable), or co-creating AI with suppliers. The Digital, Data and Technology Playbook has guidance on commercial off-the-shelf (COTS) software licensing terms and build versus buy decisions.

When drafting requirements for AI, you should:

- start with your problem statement

- highlight your data strategy and requirements

- focus on data quality, bias (mitigation) and limitations

- underline the need for you to understand the supplier’s AI approach

- consider strategies to avoid vendor lock-in

- apply the Data Ethics Framework principles and consider the appropriate Data ethics requirements and questions (PDF, 62.7KB)

- mention any integration with associated technologies or services

- consider your ongoing support and maintenance requirements

- consider data format and provide suppliers with dummy data where possible

- provide guidance on budget to consider hidden costs

- consider intellectual property rights and who will have these if new software is developed

- consider any acceptable liabilities and appetite for risk, to match against draft terms and conditions, once provided

For more information, read the ‘Selection, evaluation and award’ section of the Guidelines for AI Procurement and CCS’s guide on How to write a specification.

Having prepared your procurement strategy, defined your requirements and selected your commercial agreement, you can proceed with your procurement. Your commercial team will lead this.

If you’re using an existing commercial agreement such as a framework or DPS, you’ll conduct a call-off in accordance with the process set out in the relevant commercial agreement. Non-framework or DPS procurements must comply with procurement legislation and relevant policy – for example, the Public Contract Regulations 2015, the Procurement Act 2023 and PPNs.

CCS offers buyer guidance tailored to each of its agreements, which describe each step in detail, including completing your order contract and compiling your contract.

Running your procurement in an emerging market

Commercial agreements

While AI is not new, it is an emerging market from a commercial perspective. As well as rapidly evolving technology, there are ongoing changes in the supply base and the products and services it offers. DPSs offer flexibility for new suppliers to join, which often complement these dynamics for buyers.

Any public sector buyers interested in shaping CCS’s longer term commercial agreement portfolio should express their interest by emailing info@crowncommercial.gov.uk.

Regulation and policy

Regulation and policy will also evolve to keep pace. However, there are already a number of legal and regulatory provisions which are relevant to the use of AI technologies. These include:

- A pro-innovation approach to AI regulation: government response: this details the government’s response to the white paper consultation published in March 2023, which set out early steps towards establishing a regulatory regime for AI, including 5 principles to guide responsible AI innovation in all sectors

- Portfolio of AI assurance techniques: this portfolio has been developed by the Responsible Technology Adoption Unit (RTAU), initially in collaboration with techUK. It’s useful for anybody involved in designing, developing, deploying or procuring AI-enabled systems. It shows examples of AI assurance techniques being used in the real world to support the development of trustworthy AI

- Introduction to AI assurance: this guidance has been developed by the Department for Science, Information and Technology (DSIT) to help private sector organisations better understand how to implement AI assurance to ensure the responsible development and deployment of AI systems. It’s designed to be accessible to a range of users, especially those who may not engage with assurance on a day-to-day basis. It introduces them to core assurance definitions and concepts and then how these can be applied to support the development and use of trustworthy AI

- AI Management Essentials: DSIT is developing guidance to support private sector organisations to engage in the development of ethical, robust and responsible AI organisational practice. This self-assessment tool will distil key principles from existing AI-related standards and frameworks and provide simple baseline requirements for government suppliers of AI products and services. After testing and consultation, DSIT is planning to work with the Cabinet Office to embed the tool in government procurement frameworks

This list is not exhaustive. For further guidance, refer to the Legal considerations section.

Aligning procurement and ethics

It’s important to consider and factor data ethics into your commercial approach from the outset.

There’s a range of guidance relating to AI and data ethics to support public servants working with data and/or AI. This guidance collates existing ethical principles, developed by government and public sector bodies. You can also refer to the: