Forensic science providers: validation (accessible)

Updated 22 July 2024

Issue 2

1. Executive summary

1.1.1 Forensic science is science applied to matters of the law. It is an applied discipline, meaning scientific principles and practices are employed to obtain results that the courts have a very reasonable expectation can be shown to be reliable.

1.1.2 Validation involves demonstrating that a method used for any form of analysis is fit for the specific purpose intended, i.e. the results can be relied on. It is the expectation of the Forensic Science Regulator (the Regulator) that all methods routinely employed within the Criminal Justice System (CJS), whether for intelligence or evidential use, will be validated prior to their use on live casework material.

1.1.3 The term forensic science provider has been replaced in favour of the term ‘forensic unit’. The term is used to refer to all units that provide forensic science services, including small teams in larger organisations, internal suppliers, sole practitioners or large forensic science providers. It encompasses those units instructed by the prosecution or the defence, as well those conducting forensic science activities as part of other investigative activities.

1.1.4 The requirement to use valid methods, and the responsibility for demonstrating that the forensic unit’s methods are fit for purpose resides with the forensic unit itself. That said, this guidance recognises that data from the scientific literature, manufacturers or central agencies can form part of this determination.

1.1.5 The requirement is for each of the steps of the validation process detailed here to be completed, whether the forensic unit is producing the data for the objective evidence against their requirements themselves or objectively reviewing data produced by others for relevance, reliability and completeness. The requirement is to assess if the current data is relevant to the defined end-user requirement and specification and/or if further validation data is required. Then it may be possible to produce verification data to demonstrate that the forensic unit is competent to perform the test/examination. This second stage is sometimes summed up as ‘demonstrating that it works in your hands’. In such cases, a forensic unit is expected to produce adequate objective evidence to show that the method works, to the required standards, at each site [footnote 1] where it is employed and, in each configuration, employed.

1.1.6 The risk assessment element of this framework is an important way of ensuring that the validation study is scaled appropriately to the needs of the end-user, which for the most part is assumed to be the CJS rather than any particular analyst or intermediate user.

1.1.7 The Regulator’s Codes of Practice and Conduct for Forensic Science Providers and Practitioners in the Criminal Justice System (the Codes) require that the completed validation paperwork contains similar comparable features irrespective of the approach taken in the validation study, whether it was developed in house or is an adopted method.

1.1.8 Part of that completed paperwork is a short statement of validation completion (i.e. two sides of A4) signed off on behalf of the forensic unit to provide those making decisions on the use of the results with an executive summary of the validation, and key issues or caveats about a method.

1.1.9 Producing a short document illustrating what a method can and cannot do is a key requirement in the Codes and the Crown Prosecution Service’s ‘Core Foundation Principles for Forensic Science Providers’.

2. Issue

2.1.1 Issue 2 of this guidance was issued on 22 September 2020.

3. Modification

3.1.1 This is the second issue of this document.

3.1.2 Significant changes to the text have been marked up as inserts.

3.1.3 The modifications made to create Issue 2 of this document were, in part, to ensure compliance with The Public Sector Bodies (Websites and Mobile Applications) (No. 2) Accessibility Regulations 2018. [footnote 2]

3.1.4 The Regulator uses an identification system for all documents. In the normal sequence of documents this identifier is of the form ‘FSR-#-###’ where (a) the ‘#’ indicates a letter to describe the type or document and (b) ‘###’ indicates a numerical, or alphanumerical, code to identify the document. For example, the Codes are FSR-C-100. Combined with the issue number this ensures each document is uniquely identified.

3.1.5 In some cases, it may be necessary to publish a modified version of a document (e.g. a version in a different language). In such cases the modified version will have an additional letter at the end of the unique identifier. The identifier thus becoming FSR-#-####.

3.1.6 In all cases the normal document, bearing the identifier FSR-#-###, is to be taken as the definitive version of the document. In the event of any discrepancy between the normal version and a modified version the text of the normal version shall prevail.

4. Introduction

4.1 Background

4.1.1 In R. v. Sean Hoey, Justice Weir quoted from the House of Commons Science and Technology Committee’s report that:

[The] absence of an agreed protocol for the validation of scientific techniques prior to their being admitted in court is entirely unsatisfactory. Judges are not well placed to determine scientific validity without input from scientists.

4.1.2 Justice Weir went on to quote the UK Government’s response that:

Establishment of a regulator is one of the options to be considered, as is how the courts can be supported in appropriately weighing scientific evidence.

4.1.3 The Forensic Science Regulator (the Regulator) took up post in 2008 and published a protocol for method validation in the ‘Codes of Practice and Conduct for Forensic Science Providers and Practitioners in the Criminal Justice System’ (the Codes). This guidance document is published to support the Codes.

4.1.4 The Criminal Procedure Rules (CrimPR) requires that the expert’s statement explicitly provides information to assist the court in determining whether the evidence should be admissible (Rule 19.4.h).

4.1.5 To support this rule, the Lord Chief Justice of England and Wales has amended the Criminal Practice Directions, providing the following factors which the court may wish to take into account in determining the reliability of evidence.

a. The extent and quality of the data on which the expert’s opinion is based, and the validity of the methods by which they were obtained.

b. If the expert’s opinion relies on an inference from any findings, whether the opinion properly explains how safe or unsafe the inference is (whether by reference to statistical significance or in other appropriate terms).

c. If the expert’s opinion relies on the results of the use of any method (for instance, a test, measurement or survey), whether the opinion takes proper account of matters, such as the degree of precision or margin of uncertainty, affecting the accuracy or reliability of those results.

d. The extent to which any material upon which the expert’s opinion is based has been reviewed by others with relevant expertise (for instance, in peer- reviewed publications), and the views of those others on that material.

e. The extent to which the expert’s opinion is based on material falling outside the expert’s own field of expertise.

f. The completeness of the information which was available to the expert, and whether the expert took account of all relevant information in arriving at the opinion (including information as to the context of any facts to which the opinion relates).

g. If there is a range of expert opinion on the matter in question, where in the range the expert’s own opinion lies and whether the expert’s preference has been properly explained.

h. Whether the expert’s methods followed established practice in the field and, if they did not, whether the reason for the divergence has been properly explained.

4.1.6 The Criminal Practice Directions could be considered to clarify the end-user’s requirement (i.e. the courts in England and Wales) for the method to be valid, as well as certain features to be teased out in the validation. Time will tell how courts will interpret the directions and whether the other jurisdictions in the United Kingdom will adopt or give cognisance to them.

4.2 Nature of validation

4.2.1 The Regulator defines the validation of scientific methods in the Codes as:

The process of providing objective evidence that a method, process or device is fit for the specific purpose intended.

4.2.2 Validation is to ensure that a method produces results that can be relied on. The importance of ensuring that methods are validated before they are used in casework should, therefore, be self-evident. There are notable cases where reliability and/or admissibility of evidence arose, for example R. v. Sean Hoey, R. v. Reed & Anor and R. v. Broughton.

4.2.3 If a forensic unit says that they have not, or cannot, validate a method then are they saying that they cannot provide evidence that this method is reliable? It would be wise for a forensic unit to look to how they can demonstrate reliability before it is questioned.

4.2.4 The Court of Appeal (Criminal Division) in R. v. Harris & Ors. made it clear that the courts are free to consider the admissibility of developments in scientific thinking, techniques or methods where formal validation studies are outstanding or incomplete. The courts remain free to consider all possible sources of evidence no matter how novel, but may rule scientific results inadmissible in cases when the reliability of a method was not demonstrated.

4.2.5 It is also worth noting a method considered reliable in one setting, may not measure up to the more stringent requirements that arise in the setting of a criminal trial. In Lundy v The Queen, the Judicial Committee of the Privy Council noted:

It is important not to assume that well established techniques which are traditionally deployed for the purpose of diagnosis can be transported, without modification or further verification, to the forensic arena where the use to which scientific evidence is put is quite different from that involved in making a clinical judgment.

4.2.6 The Criminal Practice Directions suggest what the courts may wish to consider should admissibility be at issue - validity of the method is first on the list. With that in mind, the Codes require that the forensic unit ensures that the status of the validation for a product, method or service is clearly communicated to the customer and that is, in turn, made clear to the courts.

4.3 How to use this guidance

4.3.1 The Regulator has outlined the requirements for method validation in the Codes. This guidance has been produced to provide background information to the Codes and to assist in the implementation of its requirements.

4.3.2 This guidance adopts a descriptive rather than a prescriptive style, although it does contain some lists of things that it might be wise to consider when formulating plans. It is accepted that there may be other ways of achieving the same goals so it is implicit that a departure from the guidance may be entirely acceptable or even advisable if alternative approaches can give the same level of assurance in a more expedient way.

4.3.3 This is a guidance document and does not modify the requirements laid down in ISO17025, ISO17020, ISO15189, the International Laboratory Accreditation Cooperation’s document ILAC-G19:08/2014 or the Codes. In the event of any inadvertent conflict between the standards and this guidance then the standards prevail.

4.3.4 Validation is required whether the forensic unit implements ISO17025, ISO17020, ISO15189 or even no international standard. The requirement is that methods of inspection/examination/testing shall be fit for purpose and the method of demonstrating fitness for purpose is validation.

4.4 Further reading

4.4.1 Although this guide covers general issues, readers unfamiliar with the topic may wish to consult other material, including one or more of the following.

a. CITAC/Eurachem (2016) Guide to Quality in Analytical Chemistry – 3rd Edition.

b. Eurachem (2014) The fitness for purpose of analytical methods.

d. LGC (2003) In-House Method Validation: A Guide for Chemical Laboratories, ISBN: 978-0948926181.

5. What requires validation?

5.1 Scope

5.1.1 The International Laboratory Accreditation Cooperation (ILAC) organisation has the aim of harmonising laboratory and inspection accreditation practices internationally. The ILAC-G19:08/2014 publication Modules in a Forensic Science Process, generally referred to as ILAC-G19, defines the forensic science process to include the following.

a. Initial discussion regarding scene of crime attendance.

b. Undertaking initial actions at the scene of crime.

c. Developing a scene of crime investigation strategy.

d. Undertake scene of crime investigation.

e. Assess scene of crime findings and consider further examination.

f. Interpret and report findings from the scene of crime.

g. Examination, testing and presumptive testing (including appropriate case; assessment).

h. Interpretation of the result of examinations and tests.

i. Report from examinations and tests including interpretation of results.

5.1.2 ILAC-G19:08/2014 says that:

Irrespective of whether the forensic unit implements ISO/IEC 17025 or ISO/IEC 17020, methods of examination/testing shall be fit for purpose. In demonstrating this, the forensic unit will need to refer to appropriate validation / verification data.(3.10)

5.1.3 This definition encompasses the idea that even if it is not carried out in a traditional laboratory, the courts expect that all methods can be demonstrated to be reliable. The term ‘forensic unit’ is now used across many of the Regulator’s documents as the term forensic science provider has been used for a long time in policing as synonymous to an external provider, whereas the Regulator’s Codes and validation requirements apply equally to internal forensic units in forces. The term forensic unit is used in this document to refer to small teams in larger organisations, sole practitioners or large forensic science providers. Forensic units may be in law enforcement or independent, the use valid methods is the requirement irrespective of whether the forensic unit is instructed by the prosecution or the defence.

5.1.4 The Codes require that the completed validation paperwork contains comparable features irrespective of the approach taken in the study. That is, the collated paperwork contains the same material whether the whole of the validation study was conducted in-house or by the wider scientific community. The requirement is that the relevance and reliability of any existing validation is verified. How can the forensic unit demonstrate that the user requirement has been reviewed if this review cannot be produced to the accreditation body or to the court?

5.1.5 The process discussed in Section 7 shows the stages that apply whether it is a new validation study or review/verification against an existing validation study. The process is intended to allow an expert to be able to provide objective evidence that a method is fit for purpose, whether it was developed in house or is an adopted method.

5.1.6 There is an assumption in the Codes that there is little to be gained from retrospectively creating documents when the validated method is already within the schedule of a forensic unit’s accreditation. In such cases the Codes require that a comparable ‘library’ of documents is, or at least can be, compiled. [footnote 3] The existing validation will have something comparable for many of the sections, but do not neglect compiling the scientific papers, or other materials, that underpin a method as well as any papers critical of the method. It is expected that there will be a natural convergence towards completing the library as methods are updated, reviewed and modified, or new ones are introduced. Further detail is given in 7.10 Validation Library.

5.2 Standard versus non-standard methods

5.2.1 ISO/IEC17025:2017 (7.2.2.1) says that the “laboratory shall validate non-standard methods, laboratory-developed methods and standard methods used outside their intended scope or otherwise modified” so it is worth discussing here if there are standard methods in forensic science, and even if there were, what would be expected.

5.2.2 What constitutes a ‘standard method’ is not defined in ISO17025, however some of the features are alluded to suggesting a method comes from reputable organisation and that the published method:

a. Contains concise information on how to perform the tests;

b. Does not need to be supplemented or rewritten as internal procedures; and

c. Can be used as published by the operating staff in a laboratory.

5.2.3 Even if a method were to be officially recognised as ‘standard’ (although by whom it is unclear), the requirement to demonstrate that it is valid, appropriate for the purpose, and that the forensic unit can properly perform it and achieve the required performance remains (e.g. ISO/IEC17025:2017 - 7.2.1.5). ILAC-G19 which covers standards that may be applied in forensic science requires it irrespective of which standard is implemented, as does the Codes. Although this guidance cross references to documents relevant for accreditation, use of valid methods is a requirement irrespective of whether accreditation is required or not.

5.2.4 This guidance include sections on Verification (6.2), on Forensic unit competence (6.3) and assessing the Reliability of external objective evidence (6.4) which are relevant.

5.3 Method validation

5.3.1 The validation definition provided in 4.2.1 includes any method, process or device. For ease of reading, this guidance will refer only to the method in the text, even if it could also mean process, procedure, software tool, expert system, etc. It is generally the method, the use that something is put to, that can truly be validated rather than any sub-processes, component parts, devices or tools.

5.3.2 For instance, a pipette could be validated to show that it is fit for the purpose of moving a measured volume of liquid, but such a validation says little about the wider process it is being put to, say DNA profiling. In reality it is calibration that is carried out on such instruments, not validation. This is a useful distinction, as an instrument or software may be employed as part of a method but it is only those features that have an impact on the result that are likely to be required to be included in the validation, i.e. showing that it is fit for the purpose.

5.3.3 The focus for accreditation in the first edition of the Codes was on the laboratory,[footnote 4] but the second and subsequent editions include the crime scene in its scope. The requirement to demonstrate the reliability of scientific evidence clearly extends to any methodology when the operation has an impact in the results obtained, wherever it is used. Therefore, validation should be the norm.

5.4 Evidential versus intelligence

5.4.1 Neither the Codes nor ILAC-G19 differentiate between methods for evidential, intelligence or screening use. All methods should be validated for the particular purpose they are intended for prior to implementation. Results provided as intelligence are likely to be acted on; the officer in the case will need to be able to support their actions and there will often be an expectation that the intelligence can be converted into evidence.

5.4.2 An aspect of a validation is to define what a method (or the output of a method) should be used for and any caveats that might apply, e.g. error rates. This does not mean that a test designed to be a presumptive test has to be tested as if it was an evidential method. The specification derived from the end-user requirement defines the purpose, validation provides objective evidence that it is fit for that purpose.

5.4.3 Provided any caveats that apply (e.g. an x% error rate) are clear when investigators are weighing up the other intelligence they have to support a decision, then that risk is minimised or at least controlled. However, if the end-user requirement is for an intelligence product, then the scientist’s report should be designed to assist intelligence analysts to assess, package and present the results correctly. The most obvious way of doing this is to be aware of how intelligence is graded within the National Intelligence Model (NIM) [18] [19] to produce standard intelligence products and consider the highest rating that an analytical result from the method ought to ever receive. [footnote 5]

6. Internal validation

6.1 Rationale

6.1.1 The requirements laid out in the Codes are for each of the steps of the validation process to be completed, whether the user is producing the objective evidence for relevance, reliability and completeness themselves or objectively reviewing data produced by others. The expert presenting the evidence will be the one who will have to convince the court that it is admissible. Unfamiliarity with the objective evidence that shows a method to be reliable is a doubt that could grow to undermine the court’s view as to reliability.

6.2 Verification

6.2.1 The term ‘verification’ is often used in conjunction with or as an alternative term for ‘validation’, which causes confusion.

6.2.2 ILAC-G19:08/2014 (3.10) refers to verification thus:

When a method has been validated in another organization the forensic unit shall review validation records to ensure that the validation performed was fit for purpose. It is then possible for the forensic unit to only undertake verification for the method to demonstrate that the unit is competent to perform the test/examination.

6.2.3 The Regulator defines verification thus:

Confirmation, through the assessment of existing objective evidence or through experiment, that a method, process or device is fit (or remains fit) for the specific purpose intended. There is an overriding requirement that there is evidence that the provider’s own competent staff can perform the method at the given location.

6.2.4 ISO/IEC17025:2017 defines verification as the “provision of objective evidence that a given item fulfils specified requirements”, as opposed to validation which is defined as “verification, where the specified requirements are adequate for an intended use”.

6.2.5 There is nothing particularly contradictory about any of the descriptions or definitions, although all those working in the forensic sciences would be best served to not try to reinterpret the definitions again from first principles, as the description in ILAC-G19:08/2014 and the Codes reflect how these matters are actually assessed in practice.

6.2.6 Before verification of performance, the forensic unit must review/assess/verify that the external/developmental validation:

a. was relevant to the way that the forensic unit intends to use the method (e.g. covering the equivalent end-user requirements and to being used in the same manner); and

b. had been conducted in a scientifically robust manner.

6.2.7 Internal validation is probably a better term than verification, as it is less likely to be misconstrued. This is in contrast to the larger more in-depth study, which is sometimes called a developmental validation. [footnote 6] The requirement is for validation, even seemingly established methods may need more experimental work to show that they meet the user requirement if the existing (developmental) validation study does not cover all the requirements. However, as the term verification it is in common usage it cannot be dropped entirely.

6.2.8 There is an overriding requirement for evidence that the forensic unit’s own competent staff can perform a method at a given location.[footnote 7] (This is the requirement whether a developmental validation has been done by the forensic unit’s own research department or if it is an adopted method. This topic is explored further in the Section 6.3.

6.2.9 Objective evidence produced by another party to support method implementation used in a different manner, even for a similar yet different use, might not be adequate. Identical implementation will require an assessment of the scope, reliability and rigour of the existing validation study and even if instrumentation variation is controlled by external calibration, there will always remain local variation in staff skills/competence.

6.2.10 Therefore, verification can be thought of as demonstrating the following.

a. The existing objective evidence produced externally is relevant, available and adequate for the intended specific purpose, and that a method performs reliably at the given location with the forensic unit’s own staff; or

b. A method remains fit for the specific purpose following a minor change in the process, and if the change does not require revalidation of the method. [footnote 8]

6.2.11 It is unlikely that the accreditation body will be satisfied with anything less than objective evidence was produced:

a. By the forensic unit’s competent staff;

b. At the given location (or validated for crime scene work); and

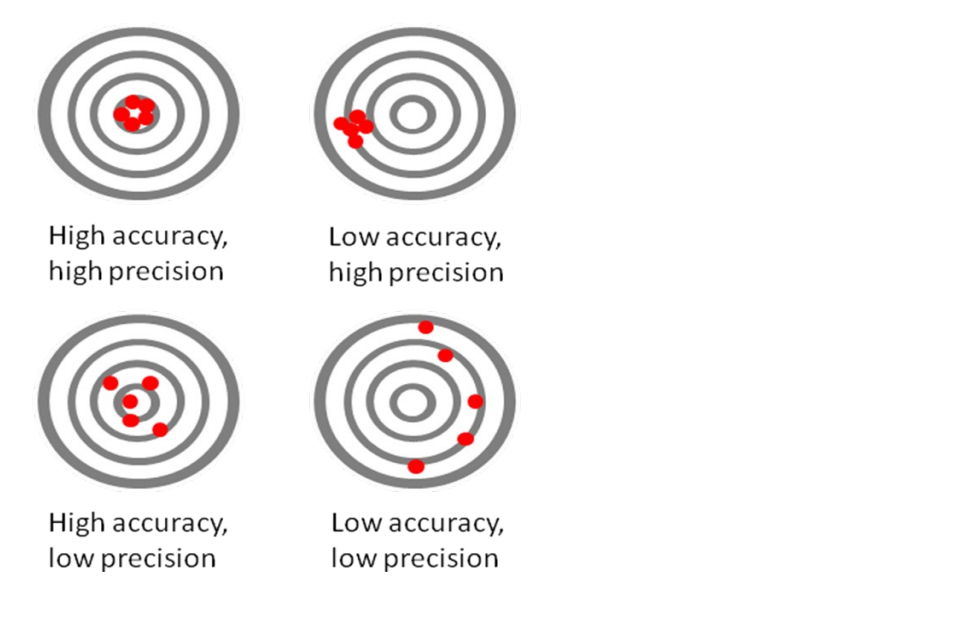

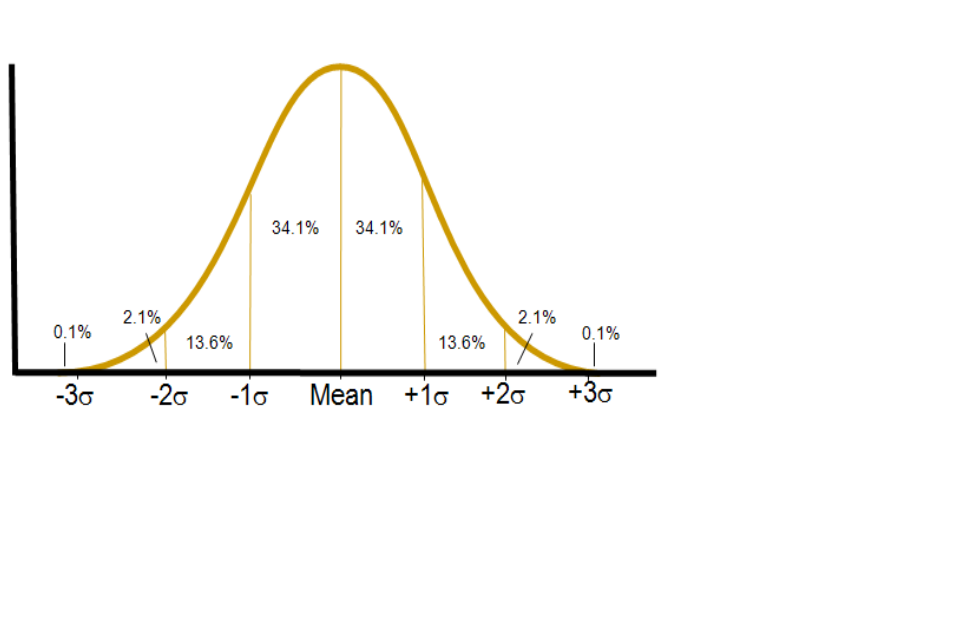

c. Showing the level of precision, accuracy, and probably the limit of detection that they can achieve following a documented review of the existing validation records.

6.3 Forensic unit competence

6.3.1 Technical competence is essential for delivering quality forensic science to the Criminal Justice System (CJS). The aim of accreditation to is to provide independent assurance that a forensic unit has demonstrated the technical competence to produce valid and accurate data/results.

6.3.2 Therefore, the forensic unit using a method must produce objective evidence of fitness for purpose for features of this method that are likely to be influenced by any local variation in implementation. This is sometimes summed up as ‘demonstrating that it works in your hands’.

6.3.3 Therefore, the objective evidence required should show how well this has been achieved, as in discussed 4.2. However, the Codes require this check to be against the required specification for the specific use that a method is being employed for, rather than simply against existing published data. This allows for differences in the requirements; e.g. if the National Physical Laboratory published data using its sensitive equipment on a method, is the local requirement really for the same level of precision or accuracy?

6.3.4 Providing objective evidence for any given operational parameter relies on really understanding the performance parameters. There may be advice in the literature to assist setting statistically significant sample sizes [footnote 9] or supporting the decision arrived at, but there may be a need to seek the advice of statisticians should there be nothing already available. This may allow far less testing in the long run to achieve the same level of confidence in a range of operational parameters.

6.3.5 If the original acceptance criteria are shown to be unachievable, a good validation study design should be able to show what levels of precision, accuracy, and possibly the limit of detection can be achieved. If, following root-cause analysis, the differences between stated and actual performance parameters cannot be, or are too costly to be, addressed (i.e. different instrumentation), the impact and associated risks of this on the originally stated end-user requirement can then be assessed.

6.3.6 Modifying the end-user requirement, specification and method to have a different operational range may be acceptable. However, there needs to be a clear separation between development and validation to ensure that the final version of a method is the subject of the validation study.

6.4 Reliability of external objective evidence

6.4.1 Assessing the relevance and completeness of objective evidence produced by others in collaborative or developmental validation studies should be relatively straightforward if the requirements laid out in the Codes for each of the steps of the validation process have been completed. Assessing the reliability and relevance of the work of others is something that is sometimes a little less clear.

Publications

6.4.2 In considering the scientific model/theory in terms of its validity and the limits of applicability, publications in respected scientific journals can usually be relied on. Whether a paper is relevant to how a method is going to be used is another question discussed later. Overall, publication is a beneficial route to transparency with the various degrees of peer review of the science and/or the method it offers.

6.4.3 However, occasionally even papers in peer-reviewed journals relevant to the method are later shown to be flawed so an up-to-date literature search should ensure that questioned science is not further relied upon. For example, a number of key concepts are repeated in literature and in text books regarding fire scene investigation where the scientific underpinning is now known to be weak or flawed.

6.4.4 Regardless of the nature of the publication it would be inadvisable to rely on any publication by an author whose scientific reputation has been undermined.

6.4.5 Forensic units often have a significant amount of ‘in-house’ information relating to the techniques they employ. This information may be relied on in a validation exercise if it is relevant and robust. However, it needs to be added to the validation library, which may need to be disclosed. The Regulator is very clear that ‘secret science’ is not in the interests of transparent justice.

Relevance

6.4.6 It is not expected that most validation studies would be considered novel enough to be published in the main journals. Even if it was considered for publication there is typically a considerable delay between the completion of a validation study, acceptance for publication and actual publication. It is therefore not a requirement that a validation study is published, but it is certainly easier for the forensic unit wishing to rely on it if it is.

6.4.7 A paper being published with validation in the title is certainly a good start for the forensic unit’s literature review. However, certain studies that may have great relevance may not be described as validation, conversely, some studies may be so described, but are limited to demonstrating a very narrow range of characteristics. Some disciplines, and therefore the related journals, use the related terms differently and this should be borne in mind.

6.4.8 One of the key tasks is to determine if the published work is relevant to the method that the forensic unit intends implementing, and fulfils all/some/any of the requirements that have been identified. The house style of the journal may mean that the published work is part or a summary of the validation study. Is it clear that the method is being implemented in exactly the same way as the forensic unit intends to implement it, and on the same target material? The methods sections in such papers are often very brief and the level of detail needed to determine this may not be present, but the author may be willing to share.

6.4.9 Published work deemed relevant and reliable can be used to supplement the performance criteria derived from the end-user requirement/specification that need to be verified to show a method works in the forensic unit’s hands.

Science

6.4.10 There is an expectation that where a method implements any scientific model or theory (or provides for the interpretation or evaluation of the results of a model or theory) the specification should address the following matters.

a. The nature of the scientific model/theory, including any required evaluation/interpretation implemented in the method, process or software. This would include considering the following.

i. The validity of the model/theory.

ii. The validity of the application of the model/theory in the method.

iii. Any assumptions incorporated within the model/theory.

iv. The validity of the assumptions and any limits on the application of the assumptions.

v. Limits on the application of the model/theory.

vi. The robustness of the model/theory on the basis of the information supporting it.

b. In light of the consideration of the model/theory the specification should set out any limits to the use of the method or any additional procedures and/or safeguards that should be implemented.

6.4.11 The extent of this consideration may vary considerably. Methods that involve the application of commonly accepted scientific theories/principles in an area where it is relatively routine will require far less assessment than methods that apply a new scientific model/theory or apply an existing model/theory in a novel area. However, it is important that an assessment is performed – even with well- established techniques, it is necessary to consider any issues or limitations.

7. Validation process in the Codes

7.1 Overview

7.1.1 The framework published in the Codes is intended to ensure that completed validation studies contain comparable features irrespective of the approach taken in the study. An outline of this process is set out in Figure 1.

Figure 1: Framework published in the Codes

-

Determination of the end-user requirements and specification

-

Review the end-user requirements and specification

-

Risk assessment of the method

-

Set the acceptance criteria

-

The validation plan

-

The outcomes of the validation exercise

-

Assessment of acceptance criteria compliance

-

Validation report

-

Statement of validation completion

-

Implementation plan

7.1.2 Following this process and compiling the paperwork is required by the Codes, whether conducting a validation study or verifying that validation studies conducted elsewhere are applicable.

7.1.3 The process shown in figure 1 is essentially linear, however as the requirements are developed into specifications and risks are assessed, it is clearly possible for these previous stages to be revisited as clarification may be required from those specifying the requirements/specification and/or finalising the paperwork. However, this iteration is before the validation experimentation has begun. There needs to be a clear separation between development and validation to ensure that the final version of a method is the subject of the validation study.

7.1.4 The approach taken in the validation study will vary depending on the nature of the method, the manner in which it is to be used, and the risks assessed to the CJS. Further, it may be affected by the nature of the forensic unit performing the validation and external requirements imposed on it (e.g. legal or through accreditation).

7.1.5 The correct operation of a method is the sum-of-its-parts, so modifying any aspect of a method may influence several seemingly unconnected performance parameters. Once a performance parameter has been characterised, modifying a method/instrument to fix underachievement for subsequent performance characteristics may nullify all the previous testing. Performance characteristics could mean quantitative type characteristics or qualitative features, such as error reporting or the operation of certain safeguards, e.g. write-blockers[footnote 10] in digital forensics.

7.1.6 To assist in ensuring that the validation study refers to the final method there should be a unique identification of the final version of the method from the onset of the validation work. It might also be wise to consider applying this to any external methods, components or systems that feed into the method, and to ensure that proper configuration management applies.

7.1.7 The Codes expect that validation is complete prior to the introduction into casework, and this guidance places casework piloting within the implementation stage. Where use of casework material is essential in the validation, the validation plan should have clear gateway(s) between the verification of a forensic unit’s competence to deliver the method and the stages of casework use (see section 8 on the use of casework).

7.2 The end user requirements

7.2.1 Any method providing information to the CJS should meet the requirements of the end-users. This part of the process is to capture all the requirements, the emphasis is put on the end-user to ensure that the more immediate users’ requirements are not only what is captured. The officer might want a quick and inexpensive drug test, but for the purpose they want use it, the Crown Prosecution Service might expect it is type-approved by the Home Office. A method that allows greater access to data might be welcomed with open arms by the users of the data, but if the court expects that continuity can be demonstrated then this end- user requirement trumps ease of use. In considering these requirements it is important to note that the end-users are not only the person/organisation that may be directly provided with the output from the method but the CJS as a whole. This includes, but is not limited to:

a. The investigative agencies (e.g. The police); [footnote 11]

b. The prosecution authorities (e.g. the Crown Prosecution Service, Public Prosecution Service or Crown Office and Procurator Fiscal Service); [footnote 12]

c. The defence;

d. The judiciary (see section 4.1.5);

e. The reviewing authorities (e.g. the Criminal Cases Review Commission); and

f. The public (including those serving as jurors).

7.2.2 It is not envisaged that many validations will require actual direct consultation with the wide range of CJS stakeholders and end-users, in fact consulting actual jurors is generally unlawful. However, considering what such groups might expect from the operation and output of the method will assist in ensuring that it is fit for purpose. If the intended users of the method assisting with capturing the requirement also give evidence in court, they might have a very clear idea of what the courts might expect, particularly if prompted by lists such as provided in the Criminal Practice Directions. The Criminal Practice Directions provides a list of several factors which relate to validation that the court may take into account in determining the reliability of evidence (outlined in section 4.1.5).

7.2.3 Expert witnesses routinely are expected to satisfy the courts and understand the obligations they have to assist the courts. So as a starting point, it is worth considering the following.

a. What propositions would the expert witness be expected to be able to address when they take the stand to report the results?

b. What caveats would they have to give and what counter claims could a legal expert legitimately make, based on the extent of testing that has been conducted?

c. Have the appellate courts made observations or stipulations for the presentation of this type of evidence, and have these been thought through in the end-user requirement so that they can be tested if required?

7.2.4 The Regulator has published information on the legal obligations related to expert evidence in England and Wales that covers a number of these areas.

7.2.5 Major breakthroughs, novel uses of existing science, or significant changes might warrant wider stakeholder consultations. In these cases it would be useful to inform the Regulator, who may advise on the most expedient method of ensuring that the CJS requirements are understood.

7.2.6 In considering the end-user requirements, forensic units should give consideration to possible sources of obligations on witnesses in the CJS. These include, but are not limited to, the following.

a. Provisions in primary legislation.

b. Provisions in secondary legislation.

c. Practice directions or equivalents (e.g. the Criminal Procedure Rules and the Criminal Practice Directions).

d. Disclosure.

e. Judgments of the courts.

f. Requirements established by prosecution authorities.

7.2.7 It should be borne in mind that there are different legal systems in operation within the UK.[footnote 13] It may, therefore, when developing and/or validating a method not be possible to produce one specification that sets out the end-user requirements for all jurisdictions. Where the specification is limited in territorial scope, for instance due to a different legal limit, this should be stated in the specification.[footnote 14]

7.2.8 The end-user requirements can be determined by consideration of the context in which a method is to be used, including the following.

a. The use to which the information generated by a method will be put.

b. Any legal or regulatory provisions that will apply to the operation of a method or to the use of the information it produces.

c. The persons within the CJS who will directly use the output; and

d. The manner in which the output will be provided to the CJS.

7.3 Specification

7.3.1 The end-user requirement should contain the requirement from the perspective of the CJS including, of course, the intermediate users of a method all the way through to the expectations of the court (e.g. relevant case law). The specification adds the detail of what the requirement actually means and ultimately what is to be tested, encapsulating what this method is to do, the configuration, and even what the method can and cannot be used for.

7.3.2 Although one could also be thinking about the acceptance criteria at this stage, it is important to check that the specification has been translated correctly; many terms may be understood differently depending on the forensic unit’s background and discipline.

7.3.3 Some normal usage terms may mean something more specific, and subtly different, in different contexts. For instance, the end-user requirement for a new test method may be that it must be as sensitive as the method it is likely to replace. Sensitivity in diagnostic testing can be defined as the proportion of known positive reference samples that are correctly identified by a screening test. However, in analytical testing it might be more the limit of detection/quantitation that is meant. Both definitions may apply if the test method is capable of both screening and producing an analytical result.

7.3.4 The Codes put special emphasis on ensuring that the end-user requirement is captured in the specification, as this was seen to be an area that had been overlooked. Of course, the interim and technical users’ requirements should continue to be captured and included in the specification.

7.3.5 At this stage the list contained in the ILAC-G19:08/2014 (3.10) should be considered, even if the points listed were not explicitly raised in the end-user requirement capture exercise (table 1).

Table 1: Example of issues to be determined in quantitative and qualitative methods

Scope

- Intended purpose and limitations

Sampling

- Sampling strategy

Sample preparation

- Sample homogeneity

Testing

-

Accuracy

-

Precision

-

Measurement uncertainty

-

Matrix effects

-

Interference

-

Limit of detection

-

Limit of quantification

-

Linearity range

-

Stability of measured compounds

-

Specificity and selectivity

-

Repeatability

-

Reproducibility

-

Robustness

Interpretation

-

Reproducibility

-

Robustness

-

Performance characteristics

-

Hypothesis and/or scenarios

-

Databases

-

Statistical evaluation

-

Limitations of conclusions

-

Scientific literature

7.3.6 This consideration of end-user and other requirements should lead to a specification that may also include the following.

a. The information to be determined by a method (e.g. the analytical results) or the opinion to be provided.

b. The nature of the results to be determined:

i. the values to be determined (e.g. mass or concentration);

ii. the units to be employed (e.g. SI units or ones dictated by legislation such as mg per 100ml);

iii. the required accuracy of the results (e.g. against a reference sample);

iv. the required precision of the results (e.g. value ± 3 standard deviations or appropriate verbal scale).

c. Information that is required in the output:

i. case-related information (e.g. case number and exhibit number);

ii. relevant statutory declarations (e.g. the concentration exceeded a legal limit);

iii. operator information;

iv. instrument information (e.g. traceability to a specific piece of equipment and/or software version);

v. results;

vi. conclusions;

vii. caveats (e.g. if the test is a presumptive test only).

d. Uses for which the output should be suitable:

i. comparison with existing results;

ii. inclusion in, or comparison with, existing databases.

e. Information that must not be in the output (e.g. likelihood ratios in footwear cases).

f. The format of the output.

g. Methods for delivery of the output.

h. Security requirements (e.g. security of electronic transmission).

7.4 Risk assessment

7.4.1 The risk assessment element of this framework is an important method of ensuring that the validation study is scaled appropriately to the needs of the end-user, which for the most part is assumed to be the CJS. Adherence to general legal obligations (e.g. health and safety and environmental protection laws) is taken as a given requirement but is not seen as part of a scientific study to validate a method.

7.4.2 The risk assessment process during validation is not about managing out and/or mitigating all the risks inherent in a method, as the method development stage should have largely dealt with this. It is about understanding the risks to ensure that the validation study correctly assesses whether the risk mitigation put in place works. There may be risks that cannot be managed out of the analytical stage, but many of these can be dealt with by the more human aspects of secondary checks, use of controls or even through the expert’s professional judgement as required in the validation of interpretive methods.

7.4.3 Risk assessment in the CJS often concentrates on the risk of a wrongful conviction, but a more complete list includes the following.

a. The risk of wrongful conviction(s).

b. The risk of wrongful acquittal(s).

c. The risk of obstructing or delaying investigation(s). [footnote 15]

7.4.4 The risk assessment should inform the forensic unit of the following.

a. The possible impact on the CJS of any errors in the method, associated materials or procedures, on the basis of the use to which the information generated by the method may be put.

b. Areas where, although the output is accurate/correct or the method operates in the expected manner, the output is ambiguous or may be misleading – especially to a non-scientist or person who does not understand the full operation of the method.

7.4.5 The assessment should address the possible impact of the risks. In performing this assessment, it will be relevant to consider the significance that may be assigned to the output of the method or the information derived from it, and the resultant impact that any error may have:

a. Where the evidential weight is likely to be high (e.g. DNA evidence) the impact of the risk is likely to be significant;

b. Where the evidential weight is likely to be low (e.g. glass evidence) the impact of the risk is likely to be relatively low.

7.4.6 The approach to risk assessment will vary depending on what the method is and how it interacts with the CJS. Issues that it may be appropriate to consider include the following.

a. Information accuracy;

i. Are there circumstances where the output from the method, or the information derived from it, could be inaccurate?

ii. Is the output from the method, or information derived from it, misleading or could it be misinterpreted?

iii. Is the output from the method, or information derived from it, such that it cannot be easily, or reliably, understood by persons without specialist knowledge or experience?

b. Information context;

i. Are there circumstances where the output, or information derived from it, could be misleading if not properly addressed in the context of the case?

c. No information;

i. Are there circumstances in which the method will find no information when the information is there?

ii. Where the method produces no result (e.g. no DNA profile) is the meaning of that properly understood and communicated to the CJS?

d. Delayed information;

i. Are there circumstances where the provision of the information derived from the method to the CJS could be delayed as a result of the operation of the method, i.e. it requires confirmation prior to being actionable?

ii. If there are such circumstances, what are the possible impacts and can any adverse impacts be avoided/minimised?

7.4.7 As part of the risk assessment there may be recommendations for the modification of the specification, or additional procedures and/or safeguards that should be implemented and carried through to the statement of validation. At this stage the validation experimentation has not begun, it is important to ensure the validation is based upon the final method. Although the validation process is considered largely linear, this is a permitted loop back to earlier stages. These modifications to put back to the those that proposed, set or signed off the requirements and specification may include, but need not be limited to, the following.

a. Restrictions on the circumstances in which the method will be used. These may include the:

i. Circumstances in which it will not be used;

ii. Circumstances in which it will not be used without some additional work;

iii. Input types on which it will not be used;

iv. Input material ranges for which it will not be used;

v. Output types, or results, which will not be provided to the CJS.

b. Restrictions on the members of staff who can employ the method.

c. Warnings/caveats to be applied to the information provided to the CJS.

7.4.8 If the method can be augmented in this way then a new version of the specification and/or risk assessment should be produced, numbered and this version of the method clearly identified. However, if the limitations, tweaks or caveats proposed degrade the business case for the method, then the validation study may in fact end here. If the study were to be forced to close at this stage, the forensic unit should learn lessons from the work done and if this was a technology maturity issue or something that might change, they may wish to devise a way to horizon scan for future opportunities to revisit the project.

Assessment methods

7.4.9 The risk analysis is intended to assist in identifying the critical aspects of the method that need to be tested. Method development should have included risk analysis and mitigation and the validation is to ensure that the method does indeed manage these risks. During method development a failure modes and effects analysis (FMEA) is an effective way of analysing the consequences of technical specification failure, as is the Japanese method of mistake proofing known as Poka-Yoke.

7.4.10 A method is more than the analytical test or instrument; it includes the human element in the procedure, which may include any error-trapping, e.g. peer review, second checks. A good validation study and acceptance criteria would cover the management of the risks identified.

7.4.11 Most risk assessment methodologies have developed for specific purposes and have terminology, and associated guidance, tailored for that purpose. For instance, methodologies used in project management guide the assessor toward prompts such as accepting or transferring the risk, which isn’t really the correct prompts to have for risks to the CJS.

7.4.12 There is a considerable amount of published literature on the various risk assessment methodologies. However, FMEA is used here as one example of how to get the most out of a risk assessment method in the context of method validation.

7.4.13 There are two main types of FMEA, design FMEA for product or method development and process FMEA, which is what is discussed here. Process FMEA is one of a number of similar methods best used with process mapping; it looks at each step in the method under assessment and prompts the assessor to describe what could go wrong, how this failure will affect the function of that step, give an indication of the root cause(s) or reason for the failure and what controls are currently in place to catch, detect or prevent this failure. Table 2 is a typical standard process FMEA template.

Table 2: Failure mode and effects analysis (FMEA) example template

* Process/ step / input * Potential failure mode * Potential failure effects * Severity (Low 1-5 High) * Potential Failure causes * Occurrence (Rare 1-5 Often) * Current Controls -prevent/detect (short verbal description and cross ref. to control in SOP) * Detection (Detect 1-5 Miss) * Risk profile number (Sev x Occ x Det) * Actions (for validation study and/or ref to assurance method)

7.4.14 The benefit of a structured approach over the abstract top-level idea of risk being ‘a miscarriage of justice of the total method’ is that it prompts tangible and therefore testable risks to be captured. The structured approach is to assist completeness, but also to help ensure that what matters is tested; it can help to avoid large amounts of testing being embarked on, which adds little to the assurance of the method.

7.4.15 Identifying risks does not mean they will occur; each step in the process may usually be very successful, but also there may also be multiple possible failure modes at each step. Take a simple a sample recovery step. Such a step might have various potential failure modes of contamination, primarily of the type that could impact the ability to obtain a usable result and therefore justice for a victim. There are controls, those will be tested, but this step is to get the failure modes thought through for that testing.

7.4.16 Each of these failure modes might have multiple failure effects and possible causes. If this is case, you may need to also use another method to help tease out the issues. Methods such as cause and effect analysis with an Ishikawa, more commonly called a fishbone diagram might be appropriate, the idea is simply to guide those capturing the risks to not settle for one thing for one failure mode or cause. Never forget that the users and trainers of similar methods will have a wealth of ideas, but so might the scientific literature, previous error investigations or even lessons learnt style documents by the Regulator (e.g. Lessons learnt, issue 01/2019).

7.4.17 The next stage is to capture the severity and the likelihood of the ‘failure’ happening and the likelihood of it being detected before it can occur, pass to the next level or be unrecoverable.

7.4.18 The scores in FMEA are multiplied together, typically ‘Severity’ and ‘Occurrence’ are rated 1 to 10 with 10 being the high rating; the example in table 2 uses the shorter range of 1 to 5 as unless the definitions guide the assessor to easily differentiate across the range, this is probably adequate for most purposes. However, to have a lack of detection of a failure contribute to a higher final risk priority number, there is an inverse relationship for ‘Detection’; the higher figure means the failure is unlikely to be detected before the damage is unrecoverable.

7.4.19 It is important to understand the nature of the testing, for instance detecting a failure in a destructive test is not a control that should be given a low priority. It is good practice to produce a scoring matrix in any risk assessment process that ranks risk, and to include the unrecoverable issue in the definition with examples of what might mean in that discipline e.g. contamination of a sample.

7.4.20 The assessment of ‘Severity’ should be on absence of controls capturing it. The scoring matrix should guide the assessor to understand the nature of the failure mode. It might be that the worst-case scenario is a miscarriage of justice, but that might be an abstract concept when assessing the impact of dispensing a reagent or packaging an item. Although harder to estimate, a sub-optimal or a false negative result may contribute to a failure to achieve justice for a victim or a failure to correctly identify exculpatory evidence. All of these are may contribute to a miscarriage of justice, they are simply more subtle failure modes than a false positive. The scoring matrix should be designed to cover the types of failures that process steps might have in the given discipline.

7.4.21 There should be cross-reference to the specific control in the written procedure of the method being validated, with a sufficiently meaningful description so those knowledgeable of the type of technique but less familiar with the specific method can follow. The inclusion of both facilitates checking and increases objectivity, since a control without a cross-reference is potentially an abstract concept rather than a tangible control.

7.4.22 Various templates for design FMEA prompt reassessing the severity, probability and detection post ‘Action’ which the forensic unit may find useful. However, the purpose of process FMEA in method validation is not about amending the method this might not be as relevant and the extra effort at this stage may not be warranted. What is important to capture is the ‘Action’ for the validation study.

7.4.23 The ‘Action’ in the validation context is whether to test for the issue and or the efficacy of the control as part of the validation study, or whether a different assurance approach has been identified (which can be specifically cross- referenced). Clearly if the FMEA template was being reused from the design phase (i.e. from method development), it might already have an action column used for a process improvement purpose; in that case, add a column for ‘Assurance method test/ref’ here.

7.4.24 The inclusion of the cross referencing to the procedure and identifying what controls are to be looked at during the validation provides the rationale and documentation to back up that decision; clearly something useful for trouble shooters, auditors and external assessors. The example given here is for a process FMEA, however, much of what is discussed would be good practice in any risk assessment process.

7.5 Review and setting the acceptance criteria

7.5.1 The specification should be reviewed to ensure that all the recommendations made following the risk assessment are addressed. This process should lead to an agreed specification against which the validation is performed against specific and measurable, testable or observable acceptance criteria. This clearly can be a loop back to the setter of the requirements or the sponsor; the intent is to get an agreed specification, but it stands to reason if agreement cannot be achieved then the validation study might be at an end, and alternative methods might need to be sought or method development might be required.

7.5.2 Unlike the initial end-user requirement, the specification and related acceptance criteria are expected to be technical in nature with the stated quantitative and qualitative requirements. Some of the features may be mandatory, with certain performance criteria, whereas others may be only desirable.

7.5.3 This is the stage where what is going to be tested from the specification is defined; analytical and functional requirements are identified for testing, and how non- functional requirements are to be assessed. If a requirement has been translated into the specification without being fully defined, and reads ‘must be as sensitive as the system if replaces’, then it needs to be properly defined here. The idea that there may be a need to loop back and check with the originator of the user requirement is a sound one, but do note, that that person might not always have the technical knowledge to clearly define the specification.

7.5.4 The experimental design needs to be properly thought through to ensure that the results used for the acceptance testing are statistically significant, and therefore valid. If a specific error rate is specified in one or more the acceptance criteria, then the minimum number of samples to demonstrate it need to be clearly defined. If several replicate measurements are required to obtain a statistically significant result to meet the acceptance criteria, these need to be planned for.

7.6 Validation plan

7.6.1 There should be a validation plan, based on the agreed specification and risk assessment, which sets out the programme of tests to be performed and the acceptable results that should be achieved. The plan should ensure that all of the following requirements are met by the validation exercise.

a. The validation plan should test the proper operation of the method over the range for which the forensic unit considers it appropriate to employ the method.

b. For mandatory requirements, all acceptable permutations of input material and method operations the validation plan should establish that:

i. the method performs as set out in the specification; and

ii. the output complies with the specification.

c. For mandatory requirements, where the order in which work is undertaken may have an impact on the operation of the method then, for each order in which the work may be undertaken, the plan should establish that:

i. the method performs as set out in the specification; and

ii. the output complies with the specification.

d. For desirable requirements, all acceptable permutations of input material and method operations the plan should establish whether:

i. the method performs as set out in the specification; and

ii. the output complies with the specification.

e. The plan should establish that all safeguards in the method operate as expected.

f. It should show that all safeguards established in relation to the use of the method (but not contained within the method) operate as expected.

g. It should also show that all risks identified in the risk assessment have been considered and addressed.

7.6.2 It is important to note that the robustness of a validation exercise is determined by the proper consideration of the permutation of factors that may impact on the operation of a method. The use of a large number of samples without proper consideration of permutations is not likely to provide robust validation. It may in fact give a false sense of completeness.

7.6.3 Where a method is a component of a larger system or service (e.g. analytical equipment) the validation plan for the method may be incorporated in the plan for the system/service. In this case it still should address the issues raised in the Codes and this guidance.

7.6.4 The validation plan can take account of validation undertaken by other reputable organisations or scientists where the results of that work are:

a. Appropriate and relevant, e.g. the method, matrices and processes are comparable with those used by the laboratory; and

b. In the public domain.

7.6.5 The use of such work does not eliminate the need to demonstrate that a method works correctly when employed by the forensic unit at the given location. This normally would include the level of precision, accuracy and probably the limit of detection they can achieve.

7.6.6 The quality of information provided to the CJS is not solely dependent on a method. It may also be affected by other materials associated with the use of a method. These include, but are not limited to, the following.

a. The instruction manuals/media for a method.

b. The training material for users of a method.

c. The quality management procedures implemented in relation to the use of a method.

d. The information provided to those within the CJS.

7.6.7 Either within the validation exercise, or as a separate exercise, there should be a consideration of whether the associated materials that have been generated are of sufficient scope and quality to ensure that the information provided to the CJS is fit for purpose.

7.6.8 Either within the validation or as a separate exercise there should be a consideration of all legal issues related to the development, testing and implementation of a method. The aim is to determine whether there are any issues that may be used to undermine the use of the method within the CJS.

7.6.9 In particular, consideration should be given to the following.

a. The appropriate use of personal information. [footnote 16]

b. Observance of human rights. [footnote 17]

c. The appropriate handling of human tissue. [footnote 18]

d. Restrictions on DNA profiling. [footnote 19]

e. The legal rights and obligations related to casework material (see the section 6 on casework material). [footnote 20]

7.6.10 This assessment should consider not only the method in isolation but also its use with any product/service of which it forms a part.

7.7 Validation exercise

7.7.1 This guidance is provided to assist in developing the validation study, a set of examples in provided in section 5.3, several of these parameters are discussed here.

7.7.2 A robustness/ruggedness assessment may be required to show that a method operates in an acceptable manner throughout the range of conditions in which the forensic unit states that it should be used. This is certainly required if the method is intended for crime scene work, but it is also a sensible step for all laboratory methods as it assists troubleshooting.

7.7.3 The validation study should not attempt to compensate for areas of operation that have less developed methods of demonstrating effectiveness (such as those dealing with qualitative output) by merely producing extra data on the aspects that are well understood. Where professional judgement and technical ability are required the competency of individuals as well as participation in collaborative and proficiency trials may be assessed to be a relevant approach.

7.7.4 As discussed in 5.1.4, validation should be of the final method rather than an extension to method development.

7.7.5 The validation exercise should be performed to standards that ensure that the results are of a sufficient quality to establish the reliability of a method, if necessary, in court.

7.8 Assessment of acceptance criteria

7.8.1 The assessment of acceptance criteria is intended to inform whether a method should be implemented in its existing form, modified with additional procedures and/or safeguards and revalidated, or even not implemented at all.

7.8.2 If certain criteria were considered mandatory and prove to be unachievable, this assessment offers the opportunity to review the stated criteria with the proponent of that criteria (or rating thereof), or with the customer, to see what the next steps should be before finalising and writing up the report. As with all records, this review should be maintained for traceability.

7.8.3 If a method needs be modified with additional procedures and/or safeguards the effectiveness of these may also need validating. Where this is the case the following steps would be wise.

a. A new specification should be prepared. This should be subject to version control.

b. A new risk assessment should be undertaken and a report prepared. Again, this should be subject to version control.

c. There should be an assessment of whether, in light of the new specification and risk assessment:

i. the validation exercise that has been performed to date is sufficient;

ii. additional work is required; or

iii. the validation study needs to be started afresh.

d. The results of this work should be set out in the validation report.

7.9 Validation report

7.9.1 The completed report would be expected to supply the following information.

a. Identify the organisations or persons who produced and/or were involved in producing and/or authorising the:

i. end-user requirement; [footnote 21]

ii. specification;

iii. risk assessment; and

iv. validation exercise.

b. Identify, where relevant, the work of other organisations that are relied on within the validation.

c. Identify the method that is the subject of the validation exercise. The version of the method also should be identified.

d. State the date of the report.

e. Identify the specification against which the validation plan has been developed and the validation exercise performed. This may be included as an annex.

f. Identify the risk assessment against which the validation plan has been developed and the validation exercise performed. This may be included as an annex.

g. Identify the validation plan against which the validation exercise was performed. This may be incorporated in the report either as an annex or within the relevant sections of the report.

h. Provide the results of the validation exercise. This should include a full description of the following.

i. The investigations/experiments performed.

ii. The results of the investigations/experiments. [footnote 22]

iii. Whether the method operated as expected for each requirement set out in the specification.

iv. If the method has not operated as expected there should be an analysis of whether that failure (either individually or in conjunction with any other failures) is sufficient to prevent the method being employed in the CJS or whether (a) the output is still fit for purpose without any further intervention or (b) the output is still fit for purpose if additional procedures/safeguards are implemented.

i. Make recommendations as to what steps need to be taken to determine that the method is operating as expected when implemented. These recommendations should be sufficient to ensure the proper operation of the method at each site at which it is implemented, and for all staff using the method.

j. State the extent to which the method meets the specification and the issues raised in the risk assessment have been addressed appropriately.

k. Make a recommendation as to whether the method should:

i. be implemented in the existing form;

ii. be implemented in the existing form with additional procedures and/or safeguards (see also Section 7.8.3);

iii. be implemented in a modified form (see also Section 7.8.3); or

iv. not be implemented.

7.10 Validation library

7.10.1 The forensic unit should have available a ‘library’ of documents relevant to the validation of a method. The Codes require the content of this library to include, but need not be limited to, the following.

a. The specification for the method approved.

b. Any associated supporting material, such as academic papers or technical reports that were used to support or provide evidence on the applicability of the method. [footnote 23]

c. The risk assessment for the method approved.

d. The validation plan for the method approved.

e. The validation report.

f. The record of approval can be demonstrated as well as supporting the application of direction 19A.5d of the Criminal Practice Directions V.

g. The statement of validation completion.

7.10.2 Where the validation relies on material published by others the forensic unit should keep a copy as part of the library to ensure that the information is readily accessible. This is even more important if the source of the material is not permanent, e.g. published on the internet (beware of the dead hyperlink).

7.10.3 Where the method implements a scientific model and/or theory or an interpretation or evaluation model the library should include a record of information supporting the use of the model/theory. The amount of material required will depend on the novelty and application of the technique.

7.10.4 The validation library should be maintained by the forensic unit to cover the period for which:

a. The method is in use; and

b. Legal challenges to the output from the method may arise.

7.10.5 The production of a validation library may be viewed initially as a burden. However, attempting to collate this information in response to a legal challenge, perhaps many years after the introduction of the method, is likely to be a far more difficult challenge than collating the material reviewed at the time of validation. This is likely to be particularly true if the staff involved in the initial validation have left the organisation.

7.10.6 Methods that are still in use are expected to be reviewed periodically and/or as part of the internal audit programme. Therefore, as part of the review of the method, it is expected that the literature review is included and updated if required. The purpose of the update is to ensure that any emerging scientific critique of the method, issue with the underlying principles or any relevant appellate court judgements have been considered. Clearly, if the forensic unit becomes aware of such critique at any time whether the method is still in use or not, the forensic unit should consider the potential impact this may have on current or past casework.

7.11 Statement of validation completion

7.11.1 The aim of this statement is to provide those making decisions on the use of the results with an executive summary of the validation and key issues regarding a method. It is intended to be a short (i.e. two sides of A4) summary that includes the following.

a. That the method has been approved, by whom, and the nature of that approval.

b. The scope of the validation performed.

c. Any reservations or restrictions on the use of the method.

d. Any caveats about the use of the method or the information derived from it.

e. Whether the method is within the scope, or being brought within the scope, of any accreditation held by the forensic unit.

f. Key applications for the method.

g. The circumstances in which the use of the method is inadvisable.

7.11.2 The Criminal Practice Directions, provides several factors which relate to validation that the court may take into account in determining the reliability of evidence as outlined in section 4.1.5. The statement of validation completion to provide a generic summary of the method, but there is no reason why it could not address these issues and case specific exceptions noted. The Regulator will monitor how the Courts of Appeal use these Directions, and modify guidance and/or the Codes accordingly.

7.11.3 The manager responsible for validation should ensure that the following have been established.

a. The validation exercise has shown that the information provided to the CJS is fit for purpose.

b. All recommendations in the validation report have been addressed or will be addressed prior to the implementation of the method.

c. There are no risks to the CJS that have not been properly addressed.

d. The associated material is sufficient to ensure that the information provided to the CJS is fit for purpose.

e. There are no legal issues that would:

i. prevent, or undermine, the use of the method, or its output, as evidence; and

ii. mean that the use of the method, or output from it, would pose an unacceptable risk to the CJS.

f. There is no information available that would raise significant doubts about whether the information generated/modified by the method is fit for purpose.

7.11.4 The forensic unit should ensure that where approval is given it specifies the circumstances in which the method may be used, any limits/conditions related to the use, and information that must be supplied to the CJS in relation to the method.

7.11.5 The date, name, signature and role of the person authorising the use of the technique in casework should also be included.

7.11.6 Since the inclusion of a statement of validation as a requirement, the Crown Prosecution Service has added another requirement for a Q&A style document illustrating the strengths and weaknesses of the scientific procedures offered. As in section 7.11.2, Criminal Practice Directions outlines questions that might arise should admissibility be questioned, forensic units would be wise to look at these requirements together. It would seem wise to produce these all these documents at this stage, although it is accepted that if the method is not going to be immediately adopted, this could fall under the implementation stage.

7.12 Implementation plan

7.12.1 The introduction of a new validated method may require an incremental approach that sees it deployed in a number of increasingly demanding roles. These may include, but need not be limited to, the following.

a. Use in a controlled (e.g. laboratory) environment on test data/information.

b. Use in a controlled environment on casework data/information.

c. Use in a pilot exercise or other controlled use on casework data/information.

d. Inclusion on schedule of accreditation.

e. Use in casework.

7.12.2 The implementation of a new method is often part of a process of improving the service provided to customers. Equally the validation may have been triggered by a method modification as a result of a corrective action. The Codes outline that there should be some assessment of the impact that the implementation of a new method(s) could have on old cases.

7.12.3 The validation exercise is likely to have been undertaken in a controlled environment and, perhaps, employing staff who have a particular skill in the area. When deployed in routine use a method is likely to be used in a less controlled environment. The Codes list a number of issues that might affect the approval for implementation of a new or modified method.