Large-scale computing: the case for greater UK coordination (HTML version)

Published 20 September 2021

A review of the UK’s large-scale computing ecosystem and the interdependency of hardware, software and skills. This report provides evidence to support the establishment of a coordination function and sets out the key policy areas to consider.

- Key concepts in large-scale computing

- Users and uses of large-scale computing

- The global stage, data and hardware

- UK infrastructure, software and skills

- Achieving the UK’s potential in large-scale computing

Key Facts

Top 500

The United Kingdom currently has 12 systems in the Top500 lists of global high-performance computing systems. The 12 systems represent 2.4% of global installations and 1.4% of total performance capacity.

Cloud computing

In 2018, 74% of high-performance computing sites were found to run some of their workloads in the cloud.

New use cases

New uses of large-scale computing including smart cities, emergency preparedness and resilience, energy networks, public health and digital twins.

The UK’s highest-ranking machine

The Met Office supercomputer is the UK’s highest-ranking machine at #37.

Skills in large-scale computing

The UK has the largest software industry in Europe. The UK software industry contributed to direct value-added GDP of £70.3 billion in 2016. It directly employs nearly 700,000 workers.

Glossary of Terms

See Appendix A for full glossary of terms.

| Term | Meaning |

|---|---|

| Access models | A method by which users can access the capability of a computing system (for example, cloud computing) |

| Algorithms | A set of instructions that, when given to a computer, are used to solve problems |

| Artificial intelligence | A broad area of computer science concerned with the use of computer systems to carry out functions analogous to human cognition |

| Cloud computing | A rapidly emerging access model where computing infrastructure is accessed on-demand via the internet |

| Exascale | A high-performance computing system that is capable of at least one Exaflop per second (a system that can perform more than 10 floating point operations per second) |

| Large-scale computing | Computer systems where processing power, memory, data storage and networks are assembled at scale to tackle computational tasks beyond the capabilities of everyday computers. Often involves the widespread use of parallelisation (see Appendix A). An umbrella term encompassing terms such as high-performance computing, high-throughput computing, supercomputing and novel computing paradigms |

| Petascale | A high-performance computing system that is capable of at least one Petaflop per second ( a system that can perform more than 10 floating point operations per second) |

| Quantum computing | An experimental form of computing which utilises the probabilistic qualities of quantum mechanics. It has the potential to massively accelerate certain computational tasks (also see Appendix C) |

Preface

Photo of Patrick Vallance, Government Chief Scientific Advisor

Large-scale computing (LSC) has revolutionised our lives in fields of national importance such as weather and climate modelling, and financial services. It is an important enabler of R&D as demonstrated by DeepMind’s remarkable recent breakthrough on protein folding. Computing power also underpins key technologies such as Machine Learning and Digital Twins.

The pace of innovation in LSC is accelerating rapidly, as illustrated by the fact that the highest performing system today is 175,000 times more powerful than the largest system 20 years ago. This brings with it new opportunities and emerging applications in areas such as fusion energy, energy networks, digital twinning, and public health. With new opportunities come challenges that the UK must address if it is to realise the true potential of LSC and the Prime Minister’s ambitions for the UK as a Science Superpower.

This report describes the LSC landscape in the UK and sets out the building blocks required to create a world-class computing ecosystem. While quantum computing is likely to be of critical importance, we are some years from commercialisation and therefore it is not covered in much detail. The core of this report is based around seven challenge areas and options to overcome each: the need for better national coordination; nurturing a healthy UK ecosystem; ensuring we have the right mix of hardware, skills, and software; minimising energy consumption; and the UK supply chain.

LSC forms part of the UK’s national infrastructure and many of the key opportunities and challenges span multiple sectors. The Government therefore has a significant role to play in nurturing and supporting the UK ecosystem both as a consumer and a funder. Therefore, our primary recommendation is for the establishment of a team within Government to take policy responsibility for large-scale computing and address the challenges that are identified in the report. Industry, as a major user and innovator of large-scale computing, will be part of the solution and need strong ties to the coordination team within Government.

This report has been prepared through interviews with officials, experts and industry stakeholders from across the ecosystem under the direction of a steering group comprised of departmental Chief Scientific Advisors. I would like to thank them all for their invaluable contributions.

Sir Patrick Vallance

Government Chief Scientific Adviser

Executive summary

There is a strong case for continued public investment in large-scale computing. However, this needs to be coupled with improved long-term strategic planning, driven by close collaboration between Government, academia, and industry.

Large-scale computing is an essential tool for solving industrial and scientific problems. It is used to analyse highly complex or data-intensive problems involving simulation, optimisation, data processing and artificial intelligence (AI). Large-scale computing supports a range of R&D-intensive sectors and is used as a research tool at many of the frontiers of science. Several large-scale computing systems, such as those used for weather forecasting, form part of the UK’s critical national infrastructure. Computing needs vary substantially across sectors, with users having different requirements in terms of both capability and support requirements:

-

in the public sector, service reliability is a key consideration. For public sector users, cybersecurity and the physical locations of infrastructure are other key considerations

-

academia requires access to systems of a range of sizes and architectures owing to the diversity of programs run

-

industrial users of large-scale computing use a wide range of access models. Private sector use of public systems often includes a requirement to publish results and cybersecurity is a key concern

-

small and medium enterprises (SMEs) often do not have an awareness of the range of business problems that large-scale computing can solve or the technical requirements to use it. In addition, SMEs often require support in adapting their software to run on large systems

The UK is a global leader in a number of computing domains, including in areas of software development, computational modelling, data analytics, cybersecurity, AI and machine learning. [footnote 1], [footnote 2] However, the UK’s large-scale computing infrastructure lags behind other major global economies and would benefit from a refreshed, longer term focus that takes into account interdependencies such as skills and software. As of November 2020, China and the US had 214 and 113 of the top 500 computer systems globally, and France and Germany had 18 and 17. In contrast, the UK had 12. [footnote 3]. The UK’s share of global high-performance computing capacity has decreased by three-fifths over five years, falling to 2.0% in 2019. [footnote 4]

Large-scale computing is a fast-changing field, where systems have a working life of five to eight years before becoming obsolete. We are entering an era of unprecedented hardware diversification, driven by the development of new computing architectures for specific tasks, in particular for AI research. Advances in data science have also driven demand for large-scale computing. As a society, we are generating far more data than ever before, and without the means to analyse it, its value is left unrealised.

Cloud computing has become an increasingly popular access model. Cloud access provides opportunities for new users to reap the benefits of many (but not all) forms of large-scale computing by offsetting upfront costs with cost of use. Users can assess their needs and choose a cost model to suit, whether that be on-site hosting or access via the cloud. Commercial cloud service providers are increasing their range of capabilities. This is still a nascent sector, and the market is currently dominated by a limited number of leading providers based in the US.

There is a strong case for continued public investment in large-scale computing. Access to world-class computing capabilities enhances UK competitiveness across a number of sectors. Lack of sufficient access to computing can create bottlenecks within research, development, prototyping and testing. The cost of leading-edge systems is greater than any single institution or business can afford. The UK Research and Development Roadmap describes supercomputing as a key part of our digital research infrastructure. [footnote 5] Large-scale computing plays an essential role underpinning UK R&D, helping to achieve ambitious ‘moonshot’ challenges, such as zero emission air travel. [footnote 6]

Investment in computer hardware alone will not be sufficient. To achieve the full potential of large-scale computing and allow the UK to compete at an international level, longer-term strategic planning is needed. Better coordination is required between Government and the publicly and privately funded research sectors. This report focusses on the functions that will be necessary for the UK to address these challenges and seize on the opportunities brought by large-scale computing.

Global trends spark important strategic questions about the types of national investments the UK should make. Our vision is to establish a dedicated oversight group tasked with providing effective coordination to the large-scale computing ecosystem and its users, to level up Britain as a science superpower.

Recommendations

1. National coordination. At present, there is no overall national roadmap for large-scale computing, and there is no single team within Government that provides coordination or carries out wider strategic assessment of UK needs. This has resulted in a wide variety of different procurement practices across departments.

- We recommend establishing a team within Government to provide policy leadership of large-scale computing. This team would be responsible for developing a rolling long-term roadmap for large-scale computing. This roadmap should cover the whole UK computing ecosystem, including software, skills, and user needs. This would help to improve resource sharing between organisations and provide a conduit for industry engagement. Finally, this team could advise Government on computing procurement, helping the public sector become a more intelligent customer.

2. Future systems Access to leading-edge computing is vital for many academic and industrial fields. Future computing capabilities will open up new application areas and help to solve problems that are currently intractable. Projects are underway around the world to develop exascale computing which would deliver systems roughly 140 times faster than the UK’s fastest system as of November 2020. These systems could mark a step-change in certain capabilities. Meanwhile, novel computing architectures could greatly accelerate specific applications such as training AI algorithms.

- The UK should maintain access to leading-edge computing capability, as well as a diverse range of system architectures. For certain applications, academia and the public and private sectors will require access to exascale systems. Exascale capabilities can be achieved through a balance between international collaboration and procuring new domestic systems.

3. User needs and the future ecosystem. Large-scale computing users have a range of different requirements – from hardware requirements to access and support. Making large-scale computing accessible to more users would help to solve new business and research challenges. However, many barriers exist that can prevent potential users from accessing these resources such as a lack of awareness, necessary expertise, or appropriate access-models.

- The roadmap for computing should be designed to meet the diverse requirements of users. This could include targeted support for SMEs to access large-scale computing, as well as taking steps to ensure that the UK marketplace for cloud computing is competitive.

4. Software. High-quality software is fundamental to realising the benefits of investments in computing. Software must be fit for purpose and be regularly tested, updated, and archived. The UK is a world leader in software development; to maintain this, software development must keep pace with advances in hardware.

- Best practices for developing robust and verifiable software should be adopted across industry, academia, and Government. In academia, testing, sharing, and archiving of software should become an essential part of the research process, ensuring that scientific results can be replicated. New computing architectures will necessitate considerable reengineering of software.

5. Skills. There is an acute shortage of large-scale computing professionals, including system architects, data engineers, system operations professionals and software engineers. Exploitation of advanced computing requires skilled cross-disciplinary teams. Within Government and academia, career paths for computing professionals are often not well-defined.

- Career pathways should be developed to help attract, support, and retain these skill sets. Job security, salary structures and progression opportunities should be improved to retain talent. The pipeline of talent could be bolstered through expanded apprenticeships and professional training programmes.

6. Energy and sustainability. Electricity is a significant proportion of the overall running costs of large-scale computing. Computing facilities are large consumers of energy, and they can put significant demands on regional electrical grids.

- Total cost of ownership should be considered during procurements, as well as whole life-cycle carbon emissions. A long-term roadmap could help ensure that computing needs are factored into electrical grid planning

7. The UK supply chain. The global large-scale computing sector has become increasingly consolidated through recent mergers and buyouts. To promote innovation, it is important to maintain a sector that is diverse and competitive. Most of the vendors of large-scale computing systems, and their associated hardware, are based outside of Europe. By some estimates, the global market is forecast to grow to £38 billion by 2022 [footnote 7]. However, due to a lack of domestic capability the UK is not currently well-placed to capitalise on this growth.

- A long-term roadmap for procurement could provide clarity to the UK computing sector, helping to encourage domestic investment. A strong domestic sector would strengthen the UK’s influence in shaping global hardware trends and standards. This would also provide a platform for capitalising on export opportunities brought about by growing global demand for large-scale computing.

1. Introduction

Since the early nineteenth century, the UK has played a leading role in developing the field of computing. UK scientists have played key roles in developing the first mechanical computer (the Difference Engine), the first programmable computer (Colossus) and the first commercial computer (Ferranti Mark 1). The UK was also home to many of the pioneers of computing, including Babbage, Lovelace, Flowers, and Turing.[footnote 8]

Early computing systems often filled large rooms and required several operators. Although modern personal computers have become much smaller, there are a number of specialist applications that still require warehouse-sized large-scale computing systems. Over several decades, we have seen exponential growth in system performance, the largest high-performance computing system today is 175,000 times more powerful than the largest system 20 years ago.

1.1 Scope and outline of this report

This report discusses the large-scale computing landscape in the UK. It outlines several building blocks required for creating a world-class computing ecosystem and the coordination mechanisms needed to support this. The following areas are within the scope of this report:

- high-performance computing (HPC) and high-throughput (data intensive) computing (HTC), which throughout the report are encompassed by the term ‘large-scale computing’

- access models for large-scale computing

- large-scale data handling, storage, and networking

- the wider ecosystem around large-scale computing, including software, skills, and users

Outside of the scope of this report are:

- personal computing and workloads carried out on personal computers

- issues around data governance and ethics, except to the extent that they influence technical decisions concerning infrastructure provision

- cloud-based computing workloads that do not require HPC or HTC capability

- digital services such as video streaming and e-commerce

This report has been prepared through interviews with stakeholders from across the large-scale computing ecosystem.

Figure 1: Large Scale Reporting in the UK: Report Structure

| Section | Description | |

|---|---|---|

| Introduction | ||

| Issues and Context | Chapter 2 | Users and access models of large scale computing infrastructure |

| Issues and Context | Chapter 3 | The international backdrop. Big data and hardware developments. |

| Issues and Context | Chapter 4 | The UK’s large scale computing capability and global position |

| Reforms | Chapter 5 | Lifting the UK’s large scale computing capability |

The first four chapters of this report set out the key concepts and issues surrounding large-scale computing, discuss the main uses of this infrastructure and describe the UK position within the broader global landscape. Following this, chapter five outlines seven building blocks for strengthening the large-scale computing ecosystem in the UK.

1.2 Key concepts in computing

Broadly, computing infrastructure can be grouped into three key functions which all computer systems require. These are data processing, data storage and data transfer, as explained here.

Figure 2: Three broad systems involved in computing

| System | Description |

|---|---|

| Data processing | - Computer processors carry out computations as instructed by computer software - There are several processor types the most widely used being central processing units (CPU) |

| Data storage | - The data needed for and processed by computer programs must be stored somewhere. - Storage comes in many types, from short term non-permanent storage for active use by processors to longer term permanent storage for use at a later date. |

| Data transfer | - Data transfer occurs at many different scales within computing. This is called ‘networking’. - Data is transferred both withing systems for example between processors and short term storage and between systems (for example between data centres on different sites). |

1.3 Large-scale computing

Some computing tasks are so large or complex that they go beyond the capabilities of personal computers. These computational tasks can provide challenges in some, or all, of the following areas:

- processing: very large amounts of processor time are required

- storage: very large amounts of data storage are required

- transfer: very large amounts of bandwidth are required for data transfer

Large-scale computing systems address these bottlenecks through parallelism: the simultaneous, coordinated use of multiple processors, storage media and communication links. Through parallelism, computational problems are split into smaller elements that are run concurrently on a number of processors, greatly reducing the overall time required. Large-scale computing is often subdivided into HTC and HPC. This infrastructure typically has a high cost to purchase and operate. A typical system has a working life of just 5 to 8 years before becoming obsolete. The first twelve months of a large-scale computing system are when it is at its most competitive and therefore preparations to ensure rapid uptake of new resources are essential to maximise return on investment. The rapid rate of hardware development and improvement means that systems quickly become outclassed. The performance improvement offered by new systems, combined with the high operational and power costs which existing systems incur, results in short lifetimes for systems.

1.3.1 Architecture and set-up

Large-scale computing systems tend to be bespoke and specialised, being designed by vendors to efficiently solve a certain class of problems. Different sets of problems have different processing, memory, and networking requirements. Therefore, the design of the data processing, data storage and data transfer functions vary. A schematic description of a typical system is shown in Appendix B.

1.3.2 Software development and system operation

Running large-scale computing workloads requires software that has been designed to run efficiently in a highly parallel way – where workloads are divided into many smaller tasks that can be processed separately, with results being combined upon completion. This is more challenging than writing software that is designed to process instructions sequentially (‘in serial’).

Writing parallelised programs requires the software engineer to understand the system architecture and control the flow and distribution of data between individual processors. Significant training and support, in terms of both software development and system access, is required before inexperienced programmers can efficiently make use of parallel computing at scale. Domain scientists also play an essential role, mapping the real-world scientific problems to computational solutions.

Operating large-scale computing systems is also a complex task. Highly skilled system administrators and software developers are required to configure, tune, and deploy software for use on large-scale computing infrastructure. Typically, very few jobs will use 100% of a system, so multiple jobs are often run simultaneously using differing proportions or partitions of a system. Efficient job allocation is therefore essential to achieve cost-effective utilisation of systems. This creates complex scheduling and dependency challenges.

1.3.3 Computational workloads for large-scale computing

Different types of large-scale computing programs run efficiently on different types of systems. A commonly used distinction is between HPC and HTC as compared in the table below.

Table 1: Comparison of high-throughput computing and high-performance computing.

| High-Throughput Computing | High-Performance Computing | |

| Optimised for | Capacity – completing a large number of tasks | Capability – completing sizeable tasks quickly |

| Typical applications | Analysing and processing large volumes of data | Modelling and simulating complex systems |

| Coupling of code | Loose (individual nodes communicate with each other infrequently) | Tight (individual nodes communicate with each other frequently) |

Typically, HPC workloads generate data while HTC workloads analyse data generated elsewhere. However, the distinction between HTC and HPC workloads is not clear-cut, and the two terms tend to be defined inconsistently. Furthermore, with the growing use of large-scale data analytics within modelling and simulation, some argue that a convergence is underway. Indeed, both types of workload can make use of similar large-scale computing systems, though it is more efficient to use system architectures that are tailored to the needs of the application. A well-designed large-scale computing system, tailored for particular science workflows, can deliver comparable science outputs to systems with much higher theoretical performance but that are less balanced in terms of memory, network bandwidth and processing power. For example, the high memory per node of the new DiRAC-3 (Distributed Research using Advanced Computing) Memory Intensive service means that it will be capable of performing cosmological simulations (see section ‘Users of large-scale computing’) on 49,000 cores – which, when run on the European PRACE (Partnership for Advanced Computing in Europe) machines require 200,000 cores. Therefore, rather than making a binary distinction between HPC and HTC is it more helpful to recognise that there are a range of workload types which might require large-scale computing (see section ‘Users and uses’).

2. Users and uses

Strategic decision making that considers user needs and future uses will have the greatest impact on society. Long term planning and coordination should work to make large-scale computing accessible to more users encouraging uptake, competitiveness and innovation.

The types of problems that are solved using large-scale computing fall into four broad areas:

-

simulation of large or complex systems

-

system optimisation

-

processing and analysing very large volumes of data

-

AI and machine learning

Problems do not always fall into a single one of the four areas above, and there is some degree of overlap between these categories.

2.1 Users of large-scale computing

Large-scale computing is a fundamental tool in many disciplines, enabling verification of experimental results, modelling of otherwise inaccessible systems or processing of massive quantities of data.[footnote 9][footnote 10]

There are a wide range of applications for large-scale computing across public sector bodies, academia, and industry. A selection of applications is shown below.

-

Chemicals and materials: Chemistry and materials science are significant users of ‘in silico’ chemical modelling, for example using simulations to understand the structures and properties of materials, modelling molecular interactions, and designing new drugs

-

Weather and climate modelling: Large-scale computing is crucial for high-resolution weather and climate modelling. As well as the Public Weather Service, accurate weather data supports the transport, agriculture, energy, and defence sectors. Large-scale computing also underpins climate modelling to support the work of the Intergovernmental Panel on Climate Change (IPCC)

-

Life sciences: Large-scale computing enables the processing and analysis of large biological datasets, such as population health data or human genome sequences. This facilitates the advancement of understanding of biological systems such as vascular system modelling and supports drug development for novel pandemics like COVID-19 [footnote 11]

-

Engineering and product development: Businesses can improve some of their research, development, and prototyping activities through use of large-scale computing. For example, in the consumer products sector it can enable product stability tests to be carried out in minutes rather than weeks. [footnote 12] In the aerospace sector it can facilitate more rapid and cost-effective prototyping of engines [footnote 13]

-

Nuclear stockpile stewardship: In the Ministry of Defence, large-scale computing provides modelling and simulation capabilities to support research into the performance and reliability of nuclear warheads[footnote 14]

-

Financial services: Large-scale computing supports a range of applications in the financial services industry. Simulation techniques including the Monte Carlo method can be used for risk characterisation and optimisation. Emerging techniques such as AI and deep learning can inform investment decisions

-

Fundamental physics: Large-scale computing is crucial for high-energy physics experiments such as those undertaken at the European Council for Nuclear Research (CERN). Through the modelling of particle collisions, analysis of data from particle accelerators and comparison of both simulated and real data, large-scale computing supports new insights into particle physics

-

Cosmology: Simulations of galaxy formation and evolution are vital for generating the scientific return for UK investments in major international telescopes and satellite missions. They provide insight into the origin and evolution of all the structures in the universe

-

AI and Machine Learning: Training complex and advanced deep learning models requires the fast processing of large data sets, which large-scale computing allows. In recent years there has been exponential growth in the computing power required to train the most demanding AI algorithms

-

Defence: Large-scale computing underpins defence led modelling and simulation; ranging from low level physics modelling up to the analysis of mission effectiveness via campaign models

-

The internet: Large-scale computing can be used to analyse internet traffic, which is useful for informing internet policy, identifying and preventing outages, defending against cyberattacks, and designing more efficient computing infrastructure.[footnote 15] Most of social media, search tools and web services are powered by large-scale computing and underpin their business models, investment planning and advertising revenues

2.2 Emerging users and use cases

Developments in large-scale computing such as exascale computing (see section ‘Exascale computing’), as well as new, more accessible, access models are expected to open up a range of new use cases, including:

-

smart cities: Recent advances in sensor technology and Internet of Things (IoT) devices have stimulated the development of small, cheap mobile-enabled sensors that collect large quantities of data. Large-scale computing can be used to process and create insights from the large volumes of data produced by smart cities

-

emergency preparedness and resilience: There is growing interest in the use of large-scale computing to improve responses to emergency situations, for example the FireGrid project exploring the use of computing to inform and coordinate fire emergencies. [footnote 16] During the COVID-19 pandemic, large-scale computing resources have been used for epidemiology, bioinformatics, and molecular modelling to inform policy decisions and accelerate the discovery of treatments [footnote 17]

-

fusion energy: The Governments Ten Point Plan for a Green Industrial Revolution has set a grand challenge to deliver fusion energy to the grid in the 2040s. This time scale eliminates conventional, real world, test based, iterative design as an option, instead requiring reactor designs to be developed ‘in-silico’. This requires a paradigm shift in the way complex products are designed, exploiting exascale computing and state of the art data driven engineering design technologies

-

energy networks: Power grids are being modernised through the introduction of IoT sensors, including smart meters. The data that these sensors provide can be used for real-time data analysis and optimisation through large-scale computing [footnote 18]

-

public health: Healthcare providers are producers of enormous quantities of data, which historically have been difficult and costly to analyse. Large-scale computing facilitates research into medical data, giving insights into population health and improving public health interventions [footnote 19]

-

computer-aided diagnostics: Through advances in image recognition and machine learning, large-scale computing can be used to provide near real-time analysis of medical scans. The Industrial Strategy Challenge Fund challenge ‘From data to early diagnosis and precision medicine’ is focussed on this area [footnote 20]

-

processing of unstructured data: Advances in data science, AI and natural language processing could create opportunities to use large-scale computing in new fields. In the humanities and social sciences, there are large quantities of unprocessed, unstructured data from sources including books, videos, and social media. Large-scale computing can facilitate processing of this data, providing new insights in a range of fields

-

digital twinning: Increasing computing power and the collection of real-time data from sensors opens up opportunities for the creation of digital replicas of physical objects in the built and natural environments. Studying the replica of the object allows for prediction of real-world behaviour and the early identification of problems. Efforts such as the National Digital Twin Programme facilitate the development of digital twins [footnote 21]

The use of large-scale computing can offer substantial benefits to new users, with increased diffusion of large-scale computing capabilities possibly playing a key role in addressing the UK’s productivity gap. [footnote 22] However, uptake amongst many potential SME users remains limited due to multiple barriers [footnote 23]

-

awareness and expertise: They may lack awareness of the benefits that it can bring as well as the specialised skills required to make use of large-scale computing and to map their real-world challenges to computational solutions. Intermediaries can play a critical role in raising awareness and offering specialised support which together provide a pathway to greater SME utilisation of large-scale computing.

-

finance: The high levels of investment required in infrastructure and human resources to establish large-scale computing capabilities and the perceived high-risk of such projects can exclude SMEs with relatively small overall R&D budgets and limited access to finance. Access to shared or on-demand cloud-based resources may therefore be an important enabler of uptake by SMEs

2.3 Access models

The range of user requirements from large-scale computing leads to a variety of different access models. Some of the key considerations are:

-

frequency and variability of use: Usage requirements will determine which access model is most convenient and economical. Some users have infrequent or sporadic demand for large-scale computing, whereas others rely on it continuously for business-critical processes requiring back-up, redundant systems to maintain very high-levels of uptime

-

level of support required: While some experienced users will be able to make use of large-scale computing facilities relatively independently, others may require a high degree of support to both understand the use cases of large-scale computing and to effectively write software for these systems

-

intellectual property and security concerns: Some users make use of software or data that is sensitive or proprietary, which introduces limitations on which access models are viable

2.3.1 “Classical” access models

Historically, access to large-scale systems has been achieved through the following access models:

-

on-site hosting: Large companies and some public sector institutions often find it most efficient to host their own large-scale computing systems in-house. This represents a significant capital expense, but can be cost-effective if they have reasonably steady, high demand for large-scale computing. On-site hosting can also mitigate some security concerns

-

“bid-for-time” shared systems: This access model is frequently employed for large systems across academia. Research councils or institutions fund systems for the use of the whole community, and users often bid for time through an assessment of scientific merit. A requirement to publish findings in peer-reviewed journals helps to ensure that allocated time is well used. Industry can gain access through research collaborations or on a pay-per-processor-hour basis

-

grid or scavenger computing: In this access model, computing resources across multiple institutions and geographic locations are brought together via a network as a “grid”. [footnote 24] Due to its distributed nature, these systems tend to be used for high-throughput, easily parallelisable workloads. In some cases, grids can be assembled from idle or underutilised computing resources in a process called cycle-scavenging. In this way distributed computing projects like Folding@home (which aims to help scientists develop new therapeutics) have enabled scientific challenges to be tackled using personal computers [footnote 25]

2.3.2 Large-scale computing in the cloud

Cloud computing is a significant access model for a broad range of computing tasks. This refers to on-demand access to computing infrastructure owned by a cloud service provider (CSP) via the internet.[footnote 26] In this report, we cover specifically HPC and HTC in the cloud. There are also a range of other uses of cloud computing.

Cloud access has the potential to become a major provisioning model for large-scale computing. A key distinction from other access models is in the way computing resources are procured, generally as an on-demand service with a flexible pay-as-you-go model, without requiring users to make up-front capital investment or participate in a merit-based bidding process.

The typical features of different access models are described below. For comparison, a non-cloud access model is also shown:

Table 2: Features of different large-scale computing access models.

| Public cloud | Managed private cloud | On-premises hosting (non-cloud) | |

|---|---|---|---|

| Owner operator | CSP owns infrastructure and manages system | CSP manages system and often owns the hardware | End user purchases and manages system |

| Location | Hosted at the CSP’s data centre | Typically hosted at the CPS’s data centre | Hosted by the user |

| Cost model | Pay-per-use | Ongoing subscription to CSP | Upfront capital and ongoing running costs |

| Exclusivity | Resources shared between many users | User has sole access | User has sole access |

| Hardware | Selection of hardware configurations may be available | Bespoke hardware designed for user needs | Bespoke hardware designed for user needs |

| Scalability | Scalable (additional processor cores can be made available for resource-intensive tasks) | Limited scalability within initial bespoke hardware, highly scalable to non-bespoke public cloud infrastructure | Not easily scalable (tasks are limited to the hardware specifications of the system) |

Hybrid models, utilising a mix of public cloud, private cloud, and on-site hosting, are also available. These models can be especially useful for managing peaks and troughs in demand.

Large-scale computing in the cloud offers a number of potential advantages:

-

cost-per-core model: Instead of large, up-front capital costs, users pay ongoing subscriptions based on their usage. System upgrades and system management become the responsibility of the CSP rather than the user. This may open up large-scale computing to new users who are unable to afford the upfront costs of large-scale computing systems. The cost-per-core of a cloud service provider drives purchase decisions and keeps the market competitive

-

enabling flexible demand: As CSPs pool resources between many users, the cloud model allows for fluctuating levels of demand from individual users. Users with their own in-house systems can make use of “cloud bursting” where computing workloads are dynamically allocated to the cloud to meet spikes in demand [footnote 27]

-

access to different hardware architectures: CSPs can offer access to a range of hardware architectures across their data centres. Through this, users can run programs on different computing architectures tailored to specific applications

-

support to users: Cloud computing can offer ‘large-scale computing-as-a-service’, where users with limited expertise can submit jobs via an easy-to-use portal. [footnote 28] This may help overcome skills barriers that can limit uptake of large-scale computing

However, there are several limitations and challenges around cloud computing:

-

performance at scale: The capability of commercial cloud systems to run complex workloads cost effectively has been improving over time. However, it does not yet meet the needs of the most demanding users, who require access to world-class large-scale computing systems, nor can it currently match the capability of traditional large-scale computers. The procurement and operation of these leadership-class computing systems is often prohibitively expensive. Furthermore, cloud facilities are often not equipped with the very high-speed interconnect networks required to run certain tightly coupled programs (see section ‘Computational workloads for large-scale computing’) [footnote 29], [footnote 30]

-

virtualisation: The foundation of cloud computing. To utilise cloud computing, workloads must be able to run on virtual machines (an emulation of a physical computer). While there is a growing trend towards virtualisation in many organisations, it can also require significant adaptation of infrastructural set-up and associated specialist skills. Virtualisation also adds an additional layer of abstraction between the user and the physical hardware which can reduce efficiency

-

cloud “lock-in”: When using CSPs it is possible to become locked-in due to tight technical integration and reliance on a particular CSP’s services. Additionally, the present cost-model for cloud computing includes significant costs for data egress (transferring data out of a CSP’s data centre), which can make it prohibitively expensive for some users to switch suppliers. The Government Digital Service provides guidance on pragmatically managing this lock-in [footnote 31]

-

cybersecurity: Cloud provision often involves moving data and code to remote servers for processing and storage. Many uses of large-scale computing systems involve sensitive data or software. In such cases, users need high confidence in the security of data during transfer, processing, and storage

-

critical national infrastructure (CNI): For some users, continuous system availability is mission critical to deliver an essential service (for example, managing a national weather service, power grid or smart city infrastructure). In such cases, in-house management or private cloud models may be preferred

-

regulation: The nature of the supply side of large-scale computing is changing with the use of CSP on the rise. Should a cloud service become so heavily used that it resembles CNI, it would need to be regulated to assure appropriate use, security and resilience. There is currently no mechanism to classify large-scale computing infrastructure as CNI which could lead to misuse and deter future users of the technology

-

support, software development and skills: While cloud provision may open-up access to new users, it does not eliminate the need for skilled, in-house computing professionals [footnote 32]

The CSP market is currently dominated by three US-based providers: Amazon Web Services (AWS), Microsoft Azure and Google Cloud. A 2019 paper by Hyperion Research, sponsored by AWS, show the extent of market consolidation: “AWS is the primary CSP for 58% of surveyed HPC user organizations that run workloads in the cloud, more than double the percentage for the number two vendor (23%) and more than seven times higher than the third-place competitor (8%).” [footnote 33]

Despite the challenges mentioned above, cloud computing provides increasingly viable solutions for the lower end of large-scale computing applications. It is a particularly promising access model for users who could not previously justify the large capital investments, inflexibility and related risks associated with other access models. The growth of cloud computing therefore represents one mechanism through which access to large-scale computing by SMEs could be expanded. For example, the Fortissimo project has created a successful marketplace for European SMEs to access large-scale computing resources, expertise, software, and tools on a pay-as-you-go basis. [footnote 34]

According to 2018 analysis by Hyperion Research sponsored by Google, 74% of large-scale computing sites were found to run some of their workloads in the cloud, compared to just 13% in 2011.[footnote 35] However, despite the rapid proliferation, these users still only use the cloud for a relatively small proportion (~10% on average) of their workloads. In the coming years, we can expect cloud computing to increasingly complement and augment, rather than fully replace, existing access models for large-scale computing.[footnote 7]

3. The global backdrop

Many nations around the world have seen strategic planning lift their large-scale computing capability. The rapid growth of aggregated data and IoT will form the feedstock for innovation from countries with adequate capacity.

3.1 The international landscape

Large-scale computing is a global endeavour, with most advanced economies making national investments in large-scale computing to support academic research, private sector research and development and national security. Global trends are leaning towards scale up, international centralisation and collaboration of large-scale computing capability. However, there are a number of related issues still to be analysed such as governance, international use agreements, regulation and cybersecurity.

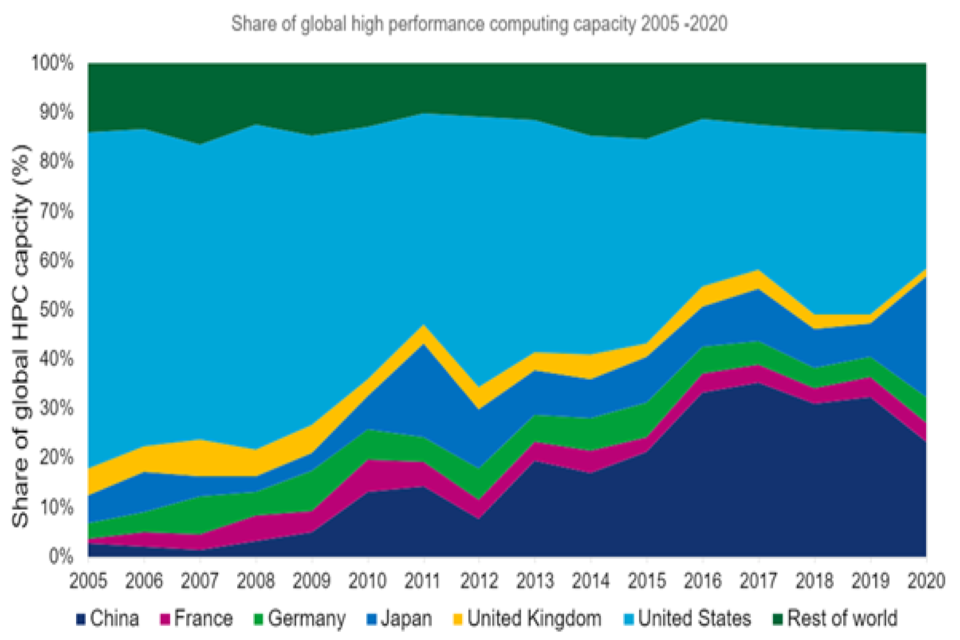

Graph showing global high-performance computing capacity (2005 to 2020)

Figure 3: Global high-performance computing capacity (2005 to 2020).

The capabilities of large-scale computing have grown substantially year on year. Since 2005, there has been a 696-fold increase in the aggregate performance of the world’s 500 most powerful HPC systems. The world’s fastest HPC system, ’Fugaku’ at the RIKEN Centre for Computational Science in Japan, has a benchmark performance of 442.0 petaflops, and is 22 times more powerful than the expected peak performance of ARCHER2 which is set to shortly replace ARCHER as the UK’s National Supercomputing Service.

Computing is increasingly seen as an international benchmark for scientific superiority, which has led to an increasing emphasis on ranking and league tables. A key trend of the last decade has been the emergence of China as a major global player in HPC, alongside the US (Figure 3). China has expanded its performance share of the global top 500 systems from 2.6% in 2005 to 32.3% in 2019.

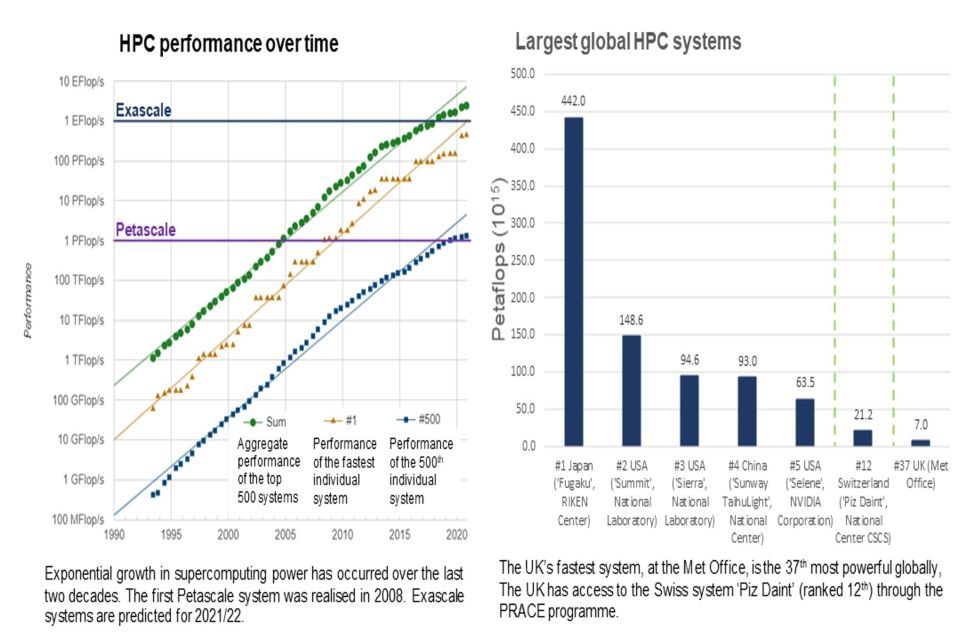

The aggregate performance of the top 500 systems have already exceeded the exascale ceiling and it will not be long before the top system reaches this benchmark. With the current top system being such a step change in performance, the UK and PRACE systems seem to lag behind (see Figure 4).

Figure 4: Largest global HPC systems

Two graphs showing HPC performance and largest global HPC systems.

3.2 The data revolution

Unprecedented volumes of data in a wide variety of forms are being produced globally. Large-scale computing resources are essential to process and analyse this data to gain insights and extract value. As well as the often-discussed growth of social media data, current and emerging sources of this data include:

- large science projects like the Large Hadron Collider (LHC) and the Square Kilometre Array (SKA)

- increased gathering and collation of health data from patient records, medical imaging, and genome sequences

- data from IoT sensors and the development of smart networks and cities

- growing digitisation of library, archive, and museum materials

- increasingly detailed results from scientific and industrial simulations

3.2.1 New opportunities and challenges

The National Data Strategy sets out our vision to harness the power of responsible data use to boost productivity, create new businesses and jobs, improve public services, support a fairer society, and drive scientific discovery, positioning the UK as the forerunner of the next wave of innovation. One specific opportunity to highlight is that, as a result of the increasing availability and diversity of data, a range of novel application areas for large-scale computing are emerging (see section ‘Emerging users and use cases’). Meanwhile, the growing availability of computing power is considered a major driver of advances in AI and machine learning, enabling the training of more complicated algorithms on ever growing datasets. [footnote 36] These new use cases have potentially significant implications for the large-scale computing ecosystem, changing the balance of computing infrastructure required through an increase in demand for both HPC and HTC systems. Many potential new users may not have the prerequisite awareness or skills to seek out or make use of large-scale computing resources.

As well as increasing demand for resources, in some areas there is also a convergence of big data analytics and AI with more traditional modelling and simulation applications of large-scale computing. [footnote 37] Emerging applications may make use of both analysis and simulation concurrently. As data volumes become greater, network and input-output bandwidth may become an increasingly significant bottleneck for making use of large-scale computing resources for data analytics. Having sufficient and readily accessible data storage facilities to enable the long-term preservation and use of valuable data is also essential. The Department for Digital, Culture, Media and Sport (DCMS) have developed a National Data Strategy (NDS) which aims to ensure the security and resilience of the infrastructure on which data use relies.

A move towards more real-time data processing and analysis may help to keep long-term storage and network requirements more manageable. [footnote 38] Edge computing (computing carried out near the source of data) is likely to play an important role in enabling these developments and the real-time insights they could provide.[footnote 39] These developments may see large scale systems embedded within distributed networks of computing resources (for example, IoT sensors, edge processors) where one of the key challenges will be “data logistics”. [footnote 40]

3.2.2 Privacy, security and governance implications

The NDS sets out the importance of safeguarding responsible data use and ensuring the security and resilience of our data assets, alongside seeking to capitalise on the opportunities and yield the benefits associated with it. There are clear public benefits accrued by the analysis of large volumes of data by users across academia, industry, and the public sector. However, the sensitive nature of much of this data, such as patient records or genome sequences, raises concerns around its maintenance and transfer. Cybersecurity and encryption will become more important to protect data and give users reassurance.

This includes exploring and implementing new approaches for data governance and data sharing. Edge computing could improve security by enabling more distributed networks which are more resilient to attack or disruption and reduce both the movement of sensitive data and the need to hold it in centralised databases. [footnote 41] Authentication, Authorisation and Accounting Infrastructure (AAAI) can enable secure and transparent access to complex and geographically distributed datasets through federated authorisation and authentication systems. The UK Research and Innovation (UKRI) Infrastructure Roadmap included AAAI as a key consideration for e-infrastructure. [footnote 42] The Government has committed to publishing a new National AI Strategy later this year, which will aim to build on the excellent progress the UK has made through the AI Sector Deal, in order to realise the potential economic, productivity and societal opportunities presented by AI. The widespread implementation of these federated networks under a common framework could greatly improve the ability to extract value from sensitive data.

3.3 Developments in computing hardware

3.3.1 Exascale computing

The current largest global HPC systems operate in the ‘petascale’ range, 1015 to 1017 floating point operations per second (FLOPs). Exascale systems are those that are capable of 1018 floating point operations per second. Exascale systems will lead to a step change in capability for specific applications through a substantial increase in capacity.

While milestones such as exascale are useful, principal consideration should be given to the use cases of the infrastructure (the real-world problems that the system will solve) and the improvement they offer over previous systems, rather than simple benchmarks of hardware performance. Most systems achieve a small percentage of their theoretical maximum performance under real-world testing.

The first exascale systems are expected to be running in 2021 or 22, with China, Japan, the European Union, and the US all developing exascale initiatives. The European High-Performance Computing Joint Undertaking (EuroHPC) is the European Union’s exascale initiative. Operating from 2018 to 2026, three pre-exascale systems (including one with a theoretical peak performance of 550 petaflops and five smaller petascale systems are planned, with two exascale systems being delivered circa 2023. [footnote 43], [footnote 44] The UK is not a member of EuroHPC. [footnote 45] Achieving exascale capability will require sustained efforts in developing the necessary skills, software and infrastructure. The US Exascale Computing Project includes funding for software and application development on a similar scale to – and in coordination with – their hardware initiatives. [footnote 46]

Networking, storage and archiving will have to be scaled-up to account for the increased data required and produced. New models of data movement, processing and storage may be needed to prevent new bottlenecks. An emerging example is the link between digital twin technology and digital threads – the bank of data used when developing a digital twin. This technology needs warehousing of data that is not yet available in the UK. To take full advantage of digital twins, the UK will need to develop a Tier-0, Big Data Centre that will curate data assets either as part of a system for data analytics, or as a long-term archive.

Improvements in power efficiency will also need to be achieved; extrapolation of power consumption from current top-tier systems would lead to untenable energy costs. [footnote 47] The US Department of Energy (DOE) has set a cap of 40MW for its own exascale projects. [footnote 48] This power demand also has implications for both running costs (each 1MW of power demand adds c. £1 million per year to running costs) and environmental impact (see section ‘Challenge 6: Energy and sustainability’). Electrical grid infrastructure is also a key consideration, as large amounts of power are required for single sites, which can limit where systems can be located.

Expected uses for exascale systems [footnote 49]

Exascale systems will lead to a step change in capability for specific applications, both dramatically improving existing computational work and allowing for expansion into previously intractable areas. The increased capacity of exascale systems enables higher resolution modelling of more complex systems over longer time periods, better uncertainty characterisation and the processing and analysis of very large datasets.

Some of the areas which will benefit from exascale systems are:

-

weather and climate modelling: the production of more accurate, more reliable and longer-term forecasts and climate models at a greater granularity of scale

-

quantum mechanics: more precise modelling of particle phenomena and the exploration of previously intractable subatomic physics problems, such as calculating neutrino mass

-

materials science advances: increasing the accuracy, size and timescales of materials simulations, enabling modelling of previously unfeasible systems

-

tackling astrophysics questions: simulation at the scale of cosmological events and simulation of gravitational processes which could help solve fundamental questions about the universe

-

digital twins: enabling the detailed simulation of digital replicas of complex physical objects or processes, such as entire aircraft engines, to optimise performance while reducing development and testing costs.[footnote 50]

-

understanding fundamental biology: high-resolution modelling of molecular interactions and processes, supporting drug development and enzyme design

3.3.2 Diversification of hardware technologies

The power of computer processors has increased exponentially over the last 50 years. However, fundamental physical limits in transistor density are now being reached. Computing approaches have become increasingly tailored to application needs, with processors being designed for particular uses.[footnote 51]

This trend is leading to diversification in system architectures. Heterogenous systems, which use more than one kind of processor, are becoming more commonplace with hardware accelerators, such as Graphics Processing Units (GPUs), Tensor Cores or Tensor Processing Units (TPUs) and Field-Programmable Gate Arrays (FPGAs), increasingly being used to augment CPUs (see Appendix C for more information). 29% of the global top 500 HPC systems use GPU accelerators. [footnote 52] GPUs are particularly adept at performing machine learning tasks. [footnote 53]

Other new computing paradigms are emerging which may introduce additional diversity into the hardware landscape such as:

-

neuromorphic computing which mimics the function of the human brain through the use of electricity ‘spikes’ rather than constant current. It is particularly adept at modelling the human brain and performing machine learning tasks

-

quantum computing uses the properties of quantum mechanics to perform computations. It has a number of potential applications across machine learning, as well as in cryptography, modelling quantum systems, and solving linear systems of equations

We can expect to see increased diversification in system makeup as hardware becomes less general purpose and is more tailored to the application in question. This is likely to further complicate procurement decisions, with systems increasingly being built to solve specific types of problems and transfer of workloads between systems becoming more difficult.

Hardware diversification also has implications for software development. Employing different types of hardware accelerators requires development of new software or extensive updates to existing software. This trend towards increased hardware diversification will require software that can run more flexibly across different architectures. Co-design of large-scale computing systems, while difficult to implement, offers many benefits in terms of delivering stakeholder-driven design goals, significant reduced risk of non-performance and ultimately a better end result constituting better value for money. They also deliver additional benefits in terms of supporting the UK skills-building agenda in key digital areas and providing opportunities to innovate in areas such as sustainability.

3.3.3 Green computing

Globally, running computing infrastructure consumes vast quantities of energy. Carbon emissions are generated from the production and disposal of large-scale computing hardware, and during operation through the powering and cooling of systems. The proportion of overall greenhouse gas emissions from ICT infrastructure is expected to increase sharply over the next few decades. [footnote 54], [footnote 55]

Driven by technological developments, the power efficiency of computation has historically doubled every year and a half. [footnote 56] However, as the scale of systems has grown, so too has their energy footprint. El Capitan at the Lawrence Livermore National Laboratory, one of the planned US exascale systems, will have an energy budget of 30 to 40 MW; equivalent to the electricity consumption of 70,000 to 90,000 UK homes. [footnote 57], [footnote 58]

While representing only a small relative percentage of global power consumption and emissions, there is growing interest in how the expansion of large-scale computing can continue to be delivered in a manner that is sustainable and in line with Paris Agreement targets. An example of this is the recent procurement by the Met Office for a supercomputer from Microsoft which will deliver 18 times the capability of the current system over ten years and will be run on 100% renewable energy. The Green500 list tracks the top 500 supercomputers in the world ranked by energy efficiency rather than computing power.[footnote 59]

There are several means through which energy consumption and waste can be minimised, such as optimising resource utilisation and innovative cooling infrastructure. These approaches, as well as reducing carbon emissions, can also save money. The Royal Society’s ‘Digital Technology and the Planet’ report highlights that the technology sector is in a position to lead by example and manage its own carbon footprint. Not only can large-scale computing optimise utilisation, it can also contribute to the decarbonisation of the energy grid through scheduling – allocating tasks to times of peak renewable energy generation. With the UK hosting COP26 and assuming presidency of the G7, there is an opportunity to demonstrate leadership in the digitisation of the net zero transition.

3.3.3.1 Optimising utilisation

Optimised utilisation means that more research can be delivered for the same carbon footprint, thus the research output per tonne of carbon dioxide increases. Examples of how this could be achieved include improved software development practices (see section ‘Challenge 4: Software’); minimising data movement and unnecessary storage; dynamic workload scheduling (including “bursting” workloads to the cloud during spikes in demand) and ensuring resource allocation is matched to workload requirements. [footnote 60]

A range of research avenues are also being explored to improve system efficiency, such as

-

designing novel system architectures to reduce energy usage. The Horizon 2020-funded ECOSCALE project is studying integrating FPGAs (see Appendix C) and CPUs in architectures that reduce data transfer requirements

-

mixed-precision computing (see Appendix C) can reduce energy consumption by minimising the wasteful use of overly precise calculations [footnote 61]

-

flexible applications, which can tolerate a variable power supply could allow the use of renewable energy to power systems. [footnote 62]

This approach could both reduce running costs and assist with electricity grid management

It is worth noting that employing the above approaches requires either developing new software or making extensive updates to existing programs.

3.3.3.2 Cooling infrastructure

Large-scale computing produces significant amounts of heat during operation. Energy is continuously required to cool systems to keep them within their operating temperatures; for traditional air-cooled systems this is a significant proportion of the overall power consumption of these systems. There are a number of strategies that can be employed to reduce the power costs and environmental impact of cooling:

-

infrastructure can be cited in locations where there is a surplus of renewable energy[footnote 63]

-

improvements can be provided by continuous monitoring and use of analytical techniques such as machine learning. [footnote 64]

The layout of computing infrastructure can also be designed for thermodynamic efficiency, which can be aided by computer simulation [footnote 65]

- warm wastewater from system cooling can be used in district heating schemes

4. The UK’s position in the global landscape

Investment in hardware alone cannot bring about the most effective use of systems. The UK is a leader in software development and sought after, skilled professionals. Despite this, demands outweighs supply and the workforce lacks the diversity required to produce software that encompasses the needs of society.

The UK has a reputation as a highly skilled and innovative economy which make it an attractive place to carry out research and to do business. This is underpinned by strong legal and regulatory frameworks, as well as protections for intellectual property. A world-class national large-scale computing ecosystem can build on this foundation, enhancing the attractiveness of the UK for research and business investment. Meanwhile, a strong position globally can ensure that UK priorities in terms of privacy, cybersecurity and openness are incorporated into international standards and initiatives. It can also help strengthen the UK’s role in helping to tackle pressing global challenges, for example: by supporting research into climate change, pandemics, and extreme weather events.

The UK’s large-scale computing ecosystem is complex and interlinked, with a wide range of users and providers. It relies on a diverse and accessible physical infrastructure base, with large-scale computing systems of varying size and type. High-quality software and skilled workers are necessary to ensure that these systems can run effectively.

The UK is recognised for its capabilities in computer science and software development, and has strengths across several computing domains, including AI, computational fluid dynamics and bioinformatics. The UK has a highly skilled large-scale computing workforce, however demand for computing professionals currently outstrips supply.

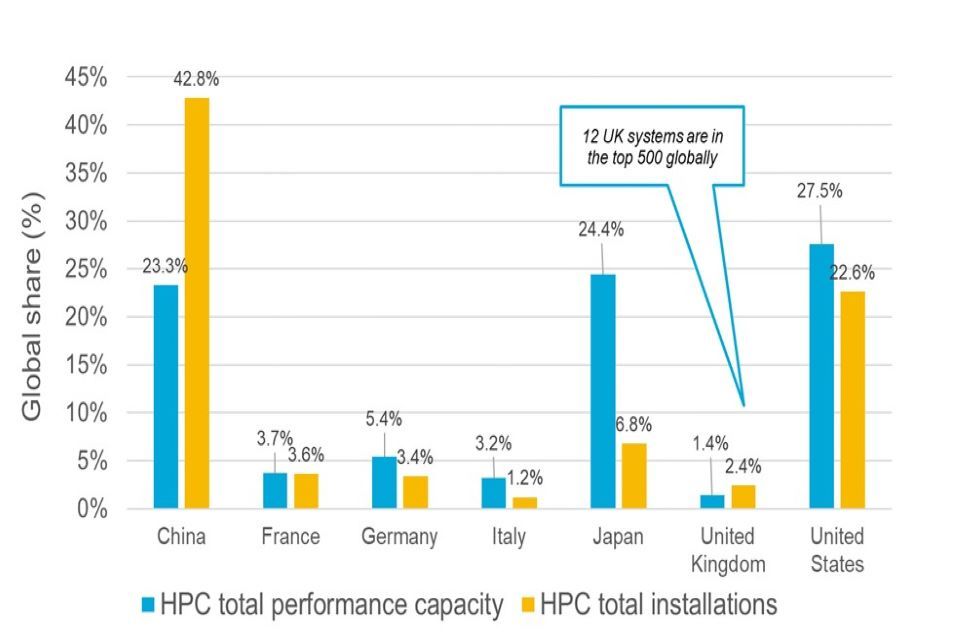

Within HPC, the UK currently possesses 1.4% of overall capacity world-wide (Figure 5). The UK’s largest system, at the Met Office, is the 37th most powerful in the world. [footnote 66] The UK has access to the 12th largest global system, based in Switzerland, through the research consortium PRACE. On the hardware side, the UK supply chain is limited, with few domestic vendors or hardware suppliers.

Graph showing HPC total performance capacity and HPC total installations

Figure 5: Share of global HPC capacity (selected countries)

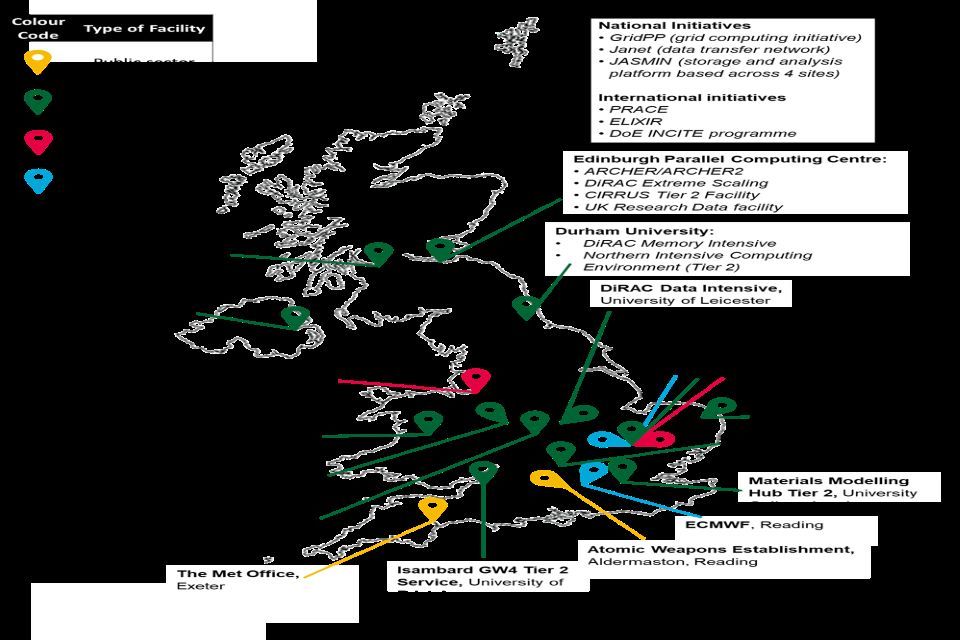

Map of UK includes UK supercomputers in the global top 500 and other large-scale computing infrastructure of a national or regional significance.

Figure 6: UK large-scale computing landscape map. The map includes UK supercomputers in the global top 500 and other large-scale computing infrastructure of a national or regional significance.

4.1 Current UK infrastructure

The UK’s large-scale computing infrastructure comprises a range of systems of varying sizes and hardware architectures to support a range of different applications (Figure 6). Large-scale computing infrastructure comprises not just computing systems, but also storage facilities, networks, and other supporting facilities.

Large-scale computing infrastructure can broadly be divided between public sector, academic and industry systems. However, these boundaries are fluid, and many sharing arrangements exist. The UK also has access to international large-scale computing systems through partnerships and sharing agreements. Large-scale computing infrastructure comprises not just systems, but also storage facilities, networks, and other supporting facilities.

A summary of some of the UK’s key large-scale computing infrastructure is outlined below. Many universities, companies and research institutes also host their own in-house facilities which are not listed. UKRI has recently carried out a landscape analysis of the UK’s research and innovation infrastructure, which includes large-scale computing. [footnote 67]

4.1.1 Public sector

Some of the largest large-scale computing users are in the public sector and their facilities represent critical national infrastructure.

- Met Office: The Met Office has the UK’s fastest supercomputer alongside two smaller systems for resilience. [footnote 68] Portions of these computers are made available to the academic community. [footnote 69] A new system has been approved and expected to come online in 2022 and deliver a six-fold increase in capability with a further three-fold uplift planned later in the 2020s. [footnote 70]

- Atomic Weapons Establishment (AWE): AWE runs HPC to support nuclear warhead stewardship. [footnote 71]

In addition to these larger systems, smaller high-security systems also exist at the Defence Science and Technology Laboratory (DSTL) to support a wide range of diverse defence and security workflows. The Ministry of Defence is keen to investigate opportunities for extending use of these systems to UK SMEs and academic organisations to better exploit the UK’s technical expertise for sensitive workflows.

4.1.2 Academic research infrastructure

A range of national and regional systems are in place to facilitate access for users across the UK academic community. A selection is described below:

National large-scale computing systems:

ARCHER2: Forms the general-purpose national supercomputing service for industry and academic researchers in the UK based at the Edinburgh Parallel Computing Centre (EPCC). The transition from ARCHER to ARCHER2 is currently underway. This system is designed to be “capable on average of over eleven times the science throughput of ARCHER” [footnote 72]

-

DiRAC: Provides more targeted large-scale computing services for the Science and Technology Facilities Council (STFC) theory community with a range of tailored computational infrastructure based across four sites for data-intensive, memory-intensive and “extreme-scaling” workloads [footnote 73]

-

EPSRC Tier 2 systems: The Engineering and Physical Sciences Research Council (EPSRC) currently funds a mixture of Computing Centres of Excellence to provide a diversity of computing architecture at a mid-level of performance capability. In the latest round in 2019 £34 million was invested in nine centres: [footnote 74]

- Cambridge Service for Data Driven Discovery (CSD3) (led by the University of Cambridge)

- the Materials and Molecular Modelling Hub (led by University College London)

- JADE the Joint Academic Data Science Endeavour (led by the University of Oxford)

- Sulis: An EPSRC platform for ensemble computing delivered by HPC Midlands+ (led by the University of Warwick)

- GW4 Tier 2 HPC Centre for Advanced Architectures (led by the University of Bristol)

- Northern Intensive Computing Environment (led by Durham University)

- Baskerville: a national accelerated compute resource (led by the University of Birmingham)

- Cirrus Phase II (led by the University of Edinburgh)

- Kelvin-2 (led by Queen’s University Belfast and Ulster University)

Regional large-scale computing systems:

-

Supercomputing Wales: Provides computing facilities for academic users from Cardiff, Swansea, Bangor, and Aberystwyth Universities. Part-funded by the European Regional Development Fund (ERDF) through the Welsh Government, with support from university partners [footnote 75]

-

ARCHIE-WeSt: Funded by EPSRC and operating in partnership with the Universities of Glasgow, Glasgow Caledonian, West of Scotland, and Stirling, it provides large-scale computing services to academia, industry and the public sector in the west of Scotland [footnote 76]

HTC facilities:

-

Earlham Institute: A Biotechnology and Biological Sciences Research Council (BBSRC) funded research institute focussed on genomics and computational biosciences. It hosts a range of large-scale computing resources tailored to the specific needs of bioinformatics workflows [footnote 77]

-

EMBL-EBI: The European Bioinformatics Institute (EMBL-EBI) is a UK-based component of the European Molecular Biology Laboratory (EMBL) and aims to “help scientists realise the potential of ‘big data’ in biology”. As well as hosting a wide range of databases, the institute also possesses significant HTC capacity, some of which is made available to the research community through a range of analysis tools [footnote 78]

-

GridPP: GridPP is the UK’s contribution to the Worldwide Large-Hadron Collider Computing Grid (WLCG) to allow the processing and analysis of data arising from particle physics experiments at CERN. Funded by STFC and established in 2001, it brings together computing resources and expertise across 18 institutions and has allowed the UK to play a leading role in the WLCG [footnote 79]

Data transfer, storage, and analysis infrastructure:

-

JASMIN: A storage and analysis platform based across four sites provided by the Natural Environment Research Council (NERC) and STFC for climate and earth sciences applications [footnote 80]

-

Janet network: Using 8,500km of fibre optic cabling, Janet provides high-speed networking to the academic community. [footnote 81] Janet is connected to other similar research networks across Europe through GÉANT (the Gigabit European Academic Network) [footnote 82]

-

UK Research Data Facility (RDF): Based at the EPCC, the EPSRC- and NERC-funded facility provides high-capacity disk and tape storage for data from national large-scale computing facilities, ensuring its long-term preservation [footnote 83]

4.1.3 International access

The UK currently has access to a range of world-class large-scale computing systems through international agreements. Some of the key partnerships are below.

-

PRACE: The Partnership for Advanced Computing in Europe has 26 member countries and provides shared large-scale computing infrastructure across Europe.[footnote 84] Access to leading-edge systems is allocated based on scientific merit. The programme is being continued through 2021 but its long-term future, and how it will relate to the European High-Performance Computing (EuroHPC) Joint Undertaking, remains uncertain

-

ELIXIR: The European life-sciences infrastructure for biological information (ELIXIR), with its central hub on the Wellcome Genome Campus, Cambridge, brings together life-science research institutions from across Europe to provide support in sharing, storing, and analysing biological data.[footnote 85] This includes a dedicated scheme of work to integrate large-scale computing infrastructure for life-sciences across Europe as part of a federated system.[footnote 86]

-

ECMWF: The European Centre for Mid-range Weather Forecasting is a multinational collaboration currently based in Reading, whose primary role is to provide forecasts to member states in the 10-day range. It hosts two systems in the UK, although this large-scale computing capacity is moving to Bologna, Italy

-

Department of Energy INCITE Program: Access to the world’s most powerful supercomputers at the US’s DOE network of national laboratories is available to UK researchers and companies.[footnote 87] Applicants must demonstrate their work tackles problems at the frontiers of science and engineering, and that their software can effectively scale to make use of large-scale computing systems

4.1.4 Industry

Some industry users maintain in-house large-scale computing capacity, making use of individual large-scale systems, grid computing approaches or cloud access. Industry users may also obtain access to the above public systems, either through bidding for use on the basis of scientific merit or by paying for system time.

Many industry users work with the Hartree Centre, an STFC-funded centre tasked with helping UK industry to take advantage of large-scale computing opportunities. The Hartree Centre offers access to computational resources including a data analytics platform, two GPU accelerated hybrid systems for big data or AI applications and Scafell Pike, an HPC system capable of delivering 1.8 petaflops of performance (RMAX, LINPACK benchmark). [footnote 88] It also provides training, develops software tools, and engages in collaborative R&D to support uptake in industry.

NVIDIA, inventor of the GPU is currently building a new supercomputer called Cambridge-1 which promises to achieve 400 petaflops of “AI performance” through its GPU accelerators and 8 petaflops of LINPACK benchmark performance. The system is intended to form part of its AI Centre of Excellence in Cambridge and is expected to be operational by the end of 2021. It will focus on supporting joint industry research targeted at AI applications in the health and life sciences sectors while machine time will also be donated to university researchers and start-ups.[footnote 89]

4.2 Software and skills

4.2.1 The UK software base

The UK has the largest software industry in Europe. The UK software industry contributed to direct value-added GDP of £70.3 billion in 2016, and it directly employs nearly 700,000 workers (around 2.2% of the UK workforce). [footnote 90]

It is difficult to overemphasise the interdependent nature of software, skills, and hardware. Without the necessary skills, good software cannot be written or maintained. Without the necessary software, hardware cannot be made use of effectively. The UK is recognised for its capabilities in computer science and software development, alongside strengths in the research domains that make use of large-scale computing. 92% of academics make use of some type of research software, with 69% regarding it as fundamental to their work. [footnote 91]