Test development handbook 2024

Updated 8 May 2025

1 Introduction

The national curriculum was introduced in 2014. This curriculum was assessed in mathematics, English reading and English grammar, punctuation and spelling for the first time in 2016. The key stage 1 (KS1) statutory phonics check was introduced in 2012.

Following the Standards for Educational and Psychological Testing[footnote 1], this handbook describes how the tests of this curriculum at key stage 2 (KS2) and the year 1 phonics screening check were developed for the 2024 test cycle and presents validity and reliability evidence.

The 2024 optional end of KS1 tests followed the same development process.

1.1 Purpose of this document

This handbook has been produced by the Standards and Testing Agency (STA) to explain how the 2024 tests and checks of the national curriculum were designed, developed and delivered. STA is an executive agency of the Department for Education (DfE). STA’s national assessment functions are regulated by the Office of Qualifications and Examinations Regulation (Ofqual). This is in line with Ofqual’s regulatory framework for national assessments.

1.2 Who is this document for?

This document is primarily for a technical audience. It is published for reasons of openness and transparency. It will be of interest to those who develop tests or are involved in assessment, including in schools.

2 The tests

All eligible pupils in England who are registered at maintained schools, special schools or academies, including free schools, are assessed at the end of year 1 in phonics and at the end of KS2 in:

- mathematics, including the multiplication tables check (MTC)[footnote 2]

- English reading

- English grammar, punctuation and spelling

The tests designed to assess children at the end of KS1 became optional in the academic year 2023 to 2024.

2.1 Purpose and uses of the tests

The main purpose of statutory assessment is to ascertain what pupils have achieved in relation to the attainment targets outlined in the national curriculum.

The intended uses of the outcomes, as set out in the Bew report and in the 2017 consultation response on primary assessment and accountability, are to:

- hold schools to account for the attainment and progress made by their pupils

- inform parents and secondary schools about the performance of individual pupils

- enable benchmarking between schools and monitoring of performance locally and nationally

The purpose of the phonics screening check is to confirm that all children have learned phonic decoding to an age-appropriate standard and to identify where additional support for children is necessary. For this reason, the phonics screening check is a ‘check’ rather than an ‘assessment’, although for brevity in this document, it will sometimes be described alongside other tests as an assessment.

2.2 Development of the tests

The national curriculum test models were developed concurrently with the 2014 national curriculum to align with its aims, purposes and content. They apply to English and mathematics for pupils aged 11.

The phonics screening check is taken by pupils at the end of year 1.

2.3 The test and assessment frameworks

STA developed test frameworks based on the national curriculum for each subject. These frameworks define the content and cognitive domains for the tests. Care is taken within each test to ensure only the skills necessary to that test are assessed, so the tests are fair and valid.

The year 1 phonics screening check assessment framework sets out the content and cognitive domain for the phonics screening check.

The purpose of the frameworks is to guide the development of the tests. By providing consistent parameters for the development and construction of the tests, the test and assessment frameworks allow valid, reliable and comparable tests to be constructed each year.

The frameworks were written primarily for those who write test materials. They have been made available to a wider audience for reasons of openness and transparency. STA developed the frameworks in consultation with the DfE curriculum and assessment teams, panels of teachers and subject experts, to refine their content, confirm their validity and make them fit for purpose.

The frameworks provide information about what the tests cover. The frameworks do not provide information on how teachers should teach the national curriculum or assess pupils’ progress.

Each test framework contains:

- a content domain, setting out what can be assessed through the test

- a cognitive domain, outlining the demands of the test and the cognitive skills required for the subject

- a test specification, which:

- gives details of test format, item types, response types, marking and the balance of marks across the content and cognitive domains

- explains how the test outcomes will be reported

- the expected standard performance descriptors for each subject (not for the phonics screening check)

2.3.1 Development of the cognitive domain

The cognitive domains make explicit the thinking skills and intellectual processes required for each test. Each item (the smallest unit of a question for which a mark is awarded) is rated against the relevant components of the cognitive domains. By taking this information into account during test construction, the tests will be comparable in terms of cognitive skills and intellectual demand of the items from year to year.

The cognitive domains were initially developed through a literature review. The resulting models for each subject allow items to be rated across different areas of cognitive demand.

To validate the cognitive domains, panels of teachers reviewed the test frameworks. They were asked to comment on the extent to which the cognitive domain set out the appropriate thinking skills for the subject and age group. Pairs of test development researchers (TDRs) also independently classified items against the cognitive domain and their classifications were compared.

Refinements were made to the cognitive domains based on both the inter-rater consistency between TDRs and the comments gathered from the teacher panels. This ensured the cognitive domains published in the test frameworks were valid and usable.

2.3.2 Development of the performance descriptors for the key stage 2 tests

Performance descriptors describe the typical characteristics of pupils whose performance is at the threshold of the expected standard. Performance descriptors for each test were created by subject-specialist test developers, in conjunction with teachers and curriculum experts, using a variety of sources. The performance descriptors were reviewed and validated by a panel of teachers. They were published in the test frameworks in June 2015. This meant that schools were able to refer to them before the first year of the tests in 2016.

An exercise was sent to attendees of the standard-setting meeting for the 2016 tests, in which between 72% and 95% of participants indicated that they agreed or strongly agreed that the performance descriptors contained sufficient detail for mathematics, English reading and English grammar, punctuation and spelling. These agreement ratings provide validity evidence that the performance descriptors are fit for purpose.

2.3.3 Development of the contents standards for the phonics screening check

The contents standards for the phonics screening check were developed by phonics experts and are set out in the assessment framework. At 2 standard-setting exercises, involving around 25 teachers each, this performance descriptor was agreed as an appropriate expected standard for children at the end of year 1.

3 The test development process

The test development process is based on an item-banking model. Each item is taken through a series of phases to establish whether it is valid, reliable and meets the purposes of the test. Items meeting these criteria are considered for possible inclusion in a live test. Any suitable items not used in a live test are kept in the item bank for potential use in future live tests. Live tests must meet the test specification in the test framework for each subject.

The test development process takes between 2 and 3 years to complete. An overview is provided in Figure 1 and Figure 2. Full details of each stage are provided in sections 4 to 13. Not all items will go through the entire process, depending on the predictability of item performance.

3.1 STA test development staff

Test development is conducted by STA’s in-house test research and development division. This team comprises assessment experts, psychometricians, TDRs, test designers and project staff. STA’s technical specialists have a range of valid experience. This includes experience of working on a number of assessment programmes, expertise in the theories and techniques of assessment, and classroom experience.

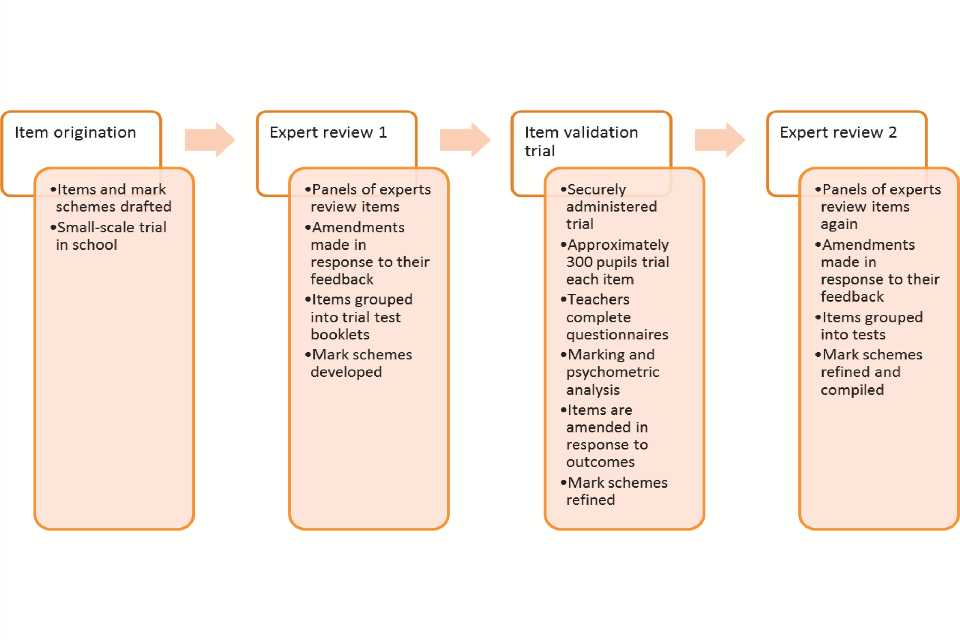

Figure 1: Overview of test development processes

Transcript of Figure 1: Overview of test development processes

Stage 1 - Item origination:

- Items and mark schemes drafted

- Small-scale trial in schools

Stage 2 - Expert review 1:

- Panels of experts review items

- Amendments made in response to their feedback

- Items grouped into trial test booklets

- Mark schemes developed

Stage 3 - Item validation trial:

- Securely administered trial

- Approximately 300 pupils trial each item

- Teachers complete questionnaires

- Marking and psychometric analysis

- Items are amended in response to outcomes

- Mark schemes refined

Stage 4 - Expert review 2:

- Panels of experts review items again

- Amendments made in response to their feedback

- Items grouped into tests

- Mark schemes refined and compiled

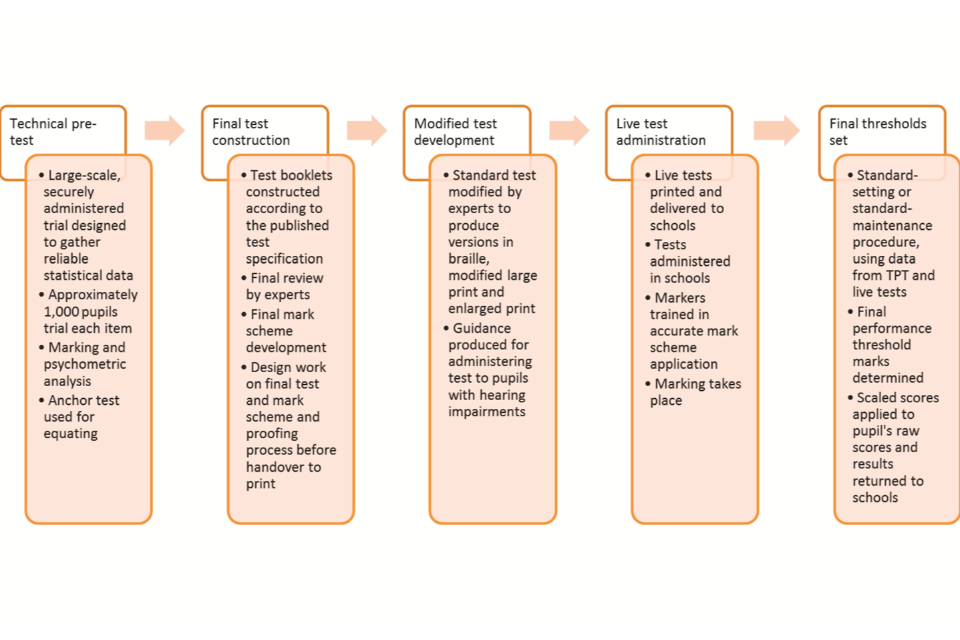

Figure 2: Overview of test development processes (continued)

Transcript of Figure 2: Overview of test development processes (continued)

Stage 5 - Technical pre-test:

- Large-scale, securely administered trial designed to gather reliable statistical data

- Approximately 1000 pupils trial each item

- Marking and psychometric analysis

- Anchor test used for equating

Stage 6 - Final test construction:

- Test booklets are constructed according to the published specification

- Final review by experts

- Final mark scheme development

- Design work of final test and mark scheme and proofing process before handover to print

Stage 7 - Modified test development:

- Standard test modified by experts to produce versions in braille, modified large print and enlarged print

- Guidance produced for administering test to pupils with hearing impairments

Stage 8 - Live test administration:

- Live tests printed and delivered to schools

- Tests administered in schools

- Markers trained in accurate mark scheme application

- Marking takes place

Stage 9 - Final thresholds set:

- Standards setting or standard-maintenance procedure, using data from TPT and live tests

- Final performance threshold marks determined

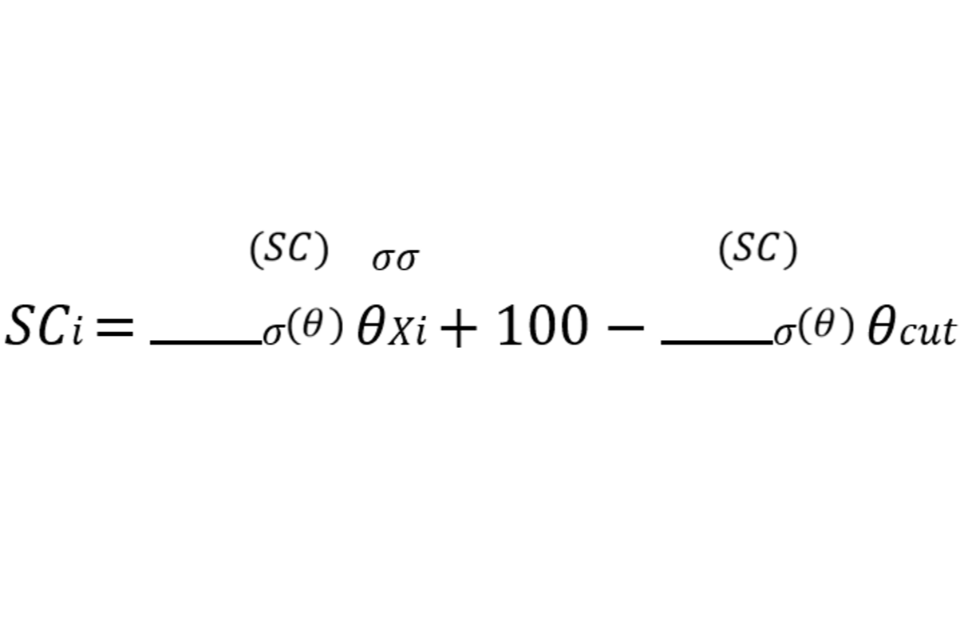

- Scaled scores applied to pupils’ raw scores and results returned to schools

3.2 STA’s item bank

Test materials and data are stored securely in a searchable database, known as an item bank, which can only be accessed by designated STA staff using DfE computers. The security of the item bank is one aspect of overall test confidentiality, which is essential for the integrity and validity of the tests.

STA developed its item bank database in August 2012. It was designed to improve the efficiency and effectiveness of the test development process and stores all information about items in development.

An item-banking test development model means that items, questions or texts are trialled and held in a single repository, available for selection to meet the test specification. The use of an item-banking test development model contributes significantly to the efficiency of test construction and the validity and reliability of the tests, by:

- enabling STA to meet the test specification criteria precisely, because test papers are constructed using information from trialling

- ensuring items that do not perform well in trialling are not included in the live tests

- allowing selection of items in any combination to create the optimum test that meets the test specification

In addition, over time, the item bank will hold a volume of items that are appropriate and ready for inclusion in a live test. Provided the item bank has sufficient materials, some stages of the process may not be needed each year, resulting in cost savings.

4 Item origination

Item origination is the initial development process for items (and texts for English reading). This first stage of development can be outsourced to external suppliers, managed by STA staff, or undertaken in-house. For phonics there is a database of grapheme phoneme correspondences that can be used in the check.

4.1 Planning for item writing

STA conducts regular reviews of all materials in the item bank to ensure enough items are available to cover the full programme of study for each subject. This takes into account all aspects of the test specification, such as coverage of the cognitive domain. STA decides whether to work with external suppliers or to proceed with internal item writing to provide the items required.

4.2 External item writing

STA holds a framework of item-writing agencies, who are external suppliers with experience of developing assessment material and educational resources. There are currently 6 item-writing agencies on the item-writing framework. This approved list of suppliers is renewed every 4 years.

Item-writing agencies from the framework may bid for work when invited by STA. The agencies are directed to publicly available documents such as test frameworks, past tests and sample materials to exemplify item format and design requirements. Information is also provided relating to the conventions of question wording. Further guidance about the scope for innovation and development of new items or item formats may be provided to prospective item-writing agencies. The provision of this guidance ensures continuity of approach between different item-writing suppliers and also between years of testing.

Bids are scored according to cost and the technical requirements below:

- expertise in item development

- project management

- example materials

The contract is awarded to the bidder who achieves the highest overall score. After the award of the contract, the lead test developer for the project and a project manager work with the item-writing agency to oversee the materials being developed.

Through a series of supplier meetings and project plans, initial ideas for item writing are discussed, requirements are clarified and refined, and guidance is provided on draft items and mark schemes. The lead TDR for the project provides the main review of items, taking into account comments from curriculum advisers.

The project manager ensures the overall delivery of contractual requirements. It is through this dialogue with suppliers that the majority of guidance about item writing is provided as curricular or assessment issues arise.

There are 2 principal points of review during the item-writing contract, during which all items under development will be scrutinised by the subject’s test development team in consultation with curriculum advisors, where appropriate. The first of these reviews takes place before the small-scale trial, which is conducted in school by the item-writing agency. The second review takes place immediately after the small-scale trial (see section 4.5).

Following these reviews, specific revisions are requested to ensure the items meet the requirements of the test framework and national curriculum and are suitable for inclusion in national curriculum assessments.

For English reading, an additional text selection meeting is held during the early stages of the contract. During text selection, TDRs, curriculum advisors and teachers review proposed texts and agree which are suitable to be used. Proposed texts are also submitted to an external equity advisor review group to ensure the subject matter is appropriate for all pupils and also the modified test agency (MTA). This provides evidence that texts included in the test are fair and accessible.

During the item-writing contract, design templates are provided to the supplier and a dialogue is maintained between the design team at STA and the item-writing agency.

Details of item-writing agencies are provided in Appendix A: technical appendix.

4.3 Internal item writing

Item writing is sometimes conducted within STA. This internal item writing may be targeted to:

- address gaps in the balance of items in the item bank

- develop specific item types for research purposes

- create items to address new requirements

For more straightforward areas of the curriculum, such as the arithmetic paper in mathematics, item writing is routinely undertaken internally.

Items are drafted by subject-specialist TDRs. Depending on the nature of the item-writing project, they may do this with reference to specific published sources, international tests or research evidence. Sometimes a familiar item format is used, with changes made to the target word or context. Indicative mark schemes are developed alongside the items. Once drafted, the items and mark schemes are subject to several stages of internal review. Some items will be considered suitable without changes, for others amendments may be proposed. Some items are removed at this stage, which may necessitate further item writing.

Following amendments to items, a draft is circulated to curriculum advisors who provide comments via a written report. Subsequent amendments are made before the items proceed to a small-scale trial in schools. Very straightforward items, such as phonics, spelling or simple arithmetic, do not need to go through this small-scale trial.

4.4 Initial mark schemes and guidance

Mark schemes are developed, reviewed and revised alongside the initial drafts of items. At this early stage, these mark schemes outline the general correct answers. Example pupil responses are gathered from subsequent large-scale trials to exemplify acceptable points or to add acceptable responses to the mark schemes. Early development of the mark schemes is an important part of the process. It ensures effort invested in developing test items is not wasted because the item is subsequently deemed unmarkable.

Since the phonics screening check is an oral check, there are no pupil responses to go through to exemplify acceptable points. However, scoring guidance is published to outline all acceptable pronunciations.

4.5 Small-scale trialling

Where appropriate, items are trialled with a small number of pupils to provide an indication of the clarity of question wording and text accessibility. This provides the first small-scale evidence of validity for each item. Test development research staff conduct trials of items originated in-house. Item-writing agencies carry out trials for the materials sourced externally.

Approximately 60 pupils of the target age group, from at least 2 schools, trial each test item. Consideration is given to variables such as school type, geographical location and attainment when selecting schools for trialling. This ensures that, as far as is possible within a small sample, a range of characteristics is represented. During the trials, pupils complete booklets of items. Some pupils are interviewed to gather their qualitative feedback. Teachers also comment on the materials.

After the trial, pupil responses are analysed for a variety of features such as:

- the proportion of correct responses

- omission rates

- common responses and errors

- apparent misunderstandings of the item or text content

This small-scale qualitative information is communicated alongside the pupil and teacher feedback in an internal report, which gives further recommendations for amendments to items and the indicative mark schemes.

Once recommendations have been discussed and agreed with STA test developers, the final version of the item is submitted for inclusion in the item bank, along with item classification tables detailing item characteristics. The item then becomes available for potential selection for a larger-scale trial.

4.6 Item classification

At the end of the item origination process, items are securely stored within STA’s item bank database. Here they are assigned a unique identifier code and additional item metadata is stored. This is used to classify the items and monitor the characteristics of selected items against the test specification.

Item metadata may change as the item moves through the development process and any changes are recorded in the item bank. Further details of the classifications used can be found in the test frameworks.

5 Item design

Item design refers to an item’s appearance and the conventions used to maintain design consistency within, and between, items.

5.1 Interfaces and processes

Design begins during item origination (see section 4). It is an important consideration throughout the test development process because of its contribution to pupil experience, item accessibility and item validity, including the avoidance of construct irrelevant variance (see section 6.3.3).

Item-writing agencies produce items, including graphical elements, according to STA style and design guides. Items written in-house are typeset and designed by STA’s test design team. Design guidance is given to item-writing agencies to ensure aspects of design, such as font use and layout, are applied to items as early as possible and before being presented to pupils. In this way, item design is consistent throughout the trialling and does not influence the answers pupils may give, contributing to the reliability of trialling data. There is also comprehensive guidance around suitable contexts and themes.

Following each expert review (see section 6), STA may amend item wording, layout or graphical elements. The design team carries out amendments to the items, and commission additional images from external illustrators as necessary. Since items may change significantly during development, flexibility and common standards are important. STA’s designers work on materials using specific software to produce editable documents capable of being amended at each test development stage.

Once amended, items are placed in booklets for use during in-school trialling and live testing. The booklet format is developed in conjunction with the print and logistics, marking and trialling teams to ensure materials are compatible with live test and trialling requirements and specifications. From the first stage of trialling (the item validation trial), materials are produced as print-production ready. They are compatible with our live marking supplier’s scanning specifications, so they do not require amendment for use in live tests. Mark schemes are developed as Word documents throughout the development process, as this allows easy alteration by TDRs. Prior to being used in live test administration and entering the public domain, mark schemes are typeset.

5.2 Key considerations

Item design can affect validity. Items that are poorly laid out are difficult to comprehend and access.

All STA test materials are developed to conform to relevant design principles, such as:

- ensuring the purpose of each task is clear, with due consideration given to readability and legibility

- ensuring tasks address assessment criteria explicitly, without unnecessary prescription

- avoiding a requirement for pupils to demonstrate skills that are not essential to the subject being tested

In addition, materials are produced so that they are suitable for large-scale print reproduction, scanning, on-screen marking and final distribution online. STA produces materials that meet the final print specifications using:

- colour swatches (Pantone Management System)

- fonts (Adobe Open Type font library)

- design software (Adobe Creative Cloud and Adobe PDF)

Stylistic conventions (such as the use of bold, spacing of elements of an item, appearance of tables) are detailed in STA design guidelines. This ensures consistency within and between the items of a particular subject, and also between subjects as far as is practicable.

Consistent use of writing style and design guidelines are also important for the item bank development process (see section 3).

5.3 Style and design guides

Style and design guidelines provide a basis for item development so that items from different item-writing agencies are consistent. The guidelines are active documents, updated to reflect changes in the use of language and design resulting from feedback from the trialling and development process.

5.4 Design of graphical elements

Items include a variety of graphical elements, including photographs, illustrations (including diagrams), tables and graphs. Photographs and illustrations can be produced by an item-writing agency or commissioned from an external design supplier. Photographs of pupils are only taken and used when parental permission has been sought and obtained. Copyright of photographs and illustrations is always transferred to STA if they are commissioned from an external agent. Records of these copyrights are maintained and stored in case of future queries.

Tables and graphs are produced as an integral part of an item alongside the text and are subject to their own design constraints. The appearance of tables, graphs and diagrams is standardised within subjects and efforts have also been made to standardise them between subjects. Where differences exist, they are a consequence of subject-specific requirements.

5.5 Copyright

The use of third-party copyright materials is limited as much as possible. While STA is able to use third-party copyright materials relatively freely for the purposes of assessment in accordance with Section 32(3) of the Copyright, Designs and Patents Act, final test materials, once used in live testing, are available free of charge to schools in the collection of past test materials on GOV.UK under Open Government Licence (OGL).

Under the terms of the OGL, materials are available for open use, which may infringe the copyright of third-party copyright holders. Therefore, where possible, images and illustrations are directly commissioned for the purpose of testing, so the copyright is transferred to STA.

Materials that cannot be directly commissioned have their third-party source recorded by the relevant subject team and acknowledged on the final test materials. This information is included in a ‘Third-party copyright report’ that accompanies the materials when released under OGL.

6 Question and item review

Item review is the process of checking the content, wording and layout of questions and items to ensure appropriateness, validity, clarity and accuracy.

6.1 Stages of review

Items and their mark schemes are reviewed throughout the test development process by a wide team of internal and external parties to ensure they assess the national curriculum appropriately. Items are first reviewed through informal trialling during initial development, as described in section 4. They then enter the expert review process.

The expert review process involves independent quality assurance of test materials. It is critical to the validity evidence that supports the tests. Reviews usually take place:

- before the item validation trial (IVT) – expert review 1

- before the technical pre-test (TPT) – expert review 2

- after the live test is constructed – expert review 3

The expert panels review and comment on the suitability of test items, identify possible issues and suggest improvements. The dates and composition of the panels who took part in the expert review of materials at each stage of development are detailed in Appendix A: technical appendix.

Security is of critical importance during the expert review process and all those involved in the process are required to sign confidentiality agreements.

6.1.1 Panel definition and set-up

Three separate expert panels review the items at both expert review 1 and expert review 2. Each panel is provided with guidance about the purpose of their review and the intended outcomes of the meeting.

These panels are recruited through recruitment emails to schools, local authorities, subject associations and universities. The panels are regularly updated with new reviewers.

The expert review panels are chaired by a TDR.

6.1.2 Teacher panel

Teacher panels are designed to gather feedback on how materials reflect current classroom practice. The panels comprise practising teachers, headteachers, or teachers who have recently left the profession. They have experience of teaching the target age group for the test, meaning that STA is gathering relevant and valid advice. The panels consist of individuals of differing experience, from differing school types and geographical locations so that a range of views are gathered. The panels represent, as far as is possible within a small group, the range of teachers and school types that will encounter the live tests. The panel is asked to comment on:

- how the items reflect current classroom practice

- whether the materials are of appropriate difficulty for the age group and ability range

- whether the materials are a suitable assessment of the programme of study

- the format, design and layout of items

The teacher panel receives the materials for review in secure conditions on the morning of the meeting. The panel is given time to read and work through the items and mark up their copies. This process allows members to review the materials as they would on test day. Members are asked to comment on the suitability of draft materials and coverage of the curriculum, and to suggest any improvements that would make it a fairer assessment. After reading the materials, the panel works as a group to comment on each individual item.

6.1.3 Test review group

The test review group (TRG) comprises:

- subject specialists

- local authority advisors

- multi-academy trust advisors

- markers

- practising teachers and headteachers who have previously served on teacher panels

Approximately one third of TRG panel members are changed each time, so that each person stays on the panel for approximately 3 years.

The group comments on:

- how the items reflect classroom practice

- the technical accuracy of the content

- whether the materials are of appropriate difficulty for the age group and ability range

- whether the materials are a suitable assessment of the programme of study and the programme of study references are accurate

- the difficulty of test items in comparison with previous years

Both TRG and inclusion panel members (see section 6.1.4) receive test materials, mark schemes and a report template at least one week before the meeting takes place. They review each item, identify any issues and consider improvements in advance of the meeting. At the meeting, they provide feedback on each item, listen to each other’s perspective and attempt to reach a consensus on each item.

6.1.4 Inclusion panel

The inclusion panel is composed of experts in teaching pupils with special educational needs and disabilities (SEND) and English as an additional language (EAL). Typically, the panel includes at least one each of:

- an expert in teaching pupils with visual impairments

- an expert in teaching pupils with hearing impairments

- an educational psychologist

- those with expertise in teaching pupils with autistic spectrum disorders

- those with expertise in teaching pupils with dyslexia

- those with expertise in teaching pupils with EAL

- an equity advisor

The panel reviews the accessibility of the standard test for pupils with this range of needs.

6.1.5 Decision making after external review

Resolution meetings are held shortly after expert review meetings 1 and 2. Resolution meetings are chaired by the test developer leading that particular test, and are attended by:

- other TDRs or research assistants for the subject

- the deputy director, head of test development research or head of assessment research and psychometrics

- a psychometrician or senior psychometrician

- curriculum advisors

- a project co-ordinator

- an assessment researcher (optional)

The meetings consider all of the evidence from the expert review panels and resolve all the issues raised through the expert review stage, deciding what changes will be made to the materials as a result. Evidence from item validation trialling may also be considered if it is available.

The changes suggested by different reviewers may contradict each other. The lead TDR is responsible for deciding which recommendations to implement, taking all the evidence into account. If no solution can be found, the item is likely to be removed from the test development process.

6.1.6 External review at the end of the development process

A single expert panel reviews the items at the end of the test development process (expert review 3). It comprises members of the teacher panel, test review group and inclusion panel. Expert review 3 is to review the suitability of the constructed test, with specific consideration for overall difficulty and breadth of coverage of the content domain.

At expert review 3, the panel receives the test booklets, mark schemes and guidance documents on the morning of the meeting. The panel is given time to read and work through the test booklets before providing whole-group feedback on the suitability of the test. Test materials are not sent out in advance of expert review 3. This is to ensure the live test remains secure and to allow panellists to review the materials as they would on test day. The dates and composition of the panels who reviewed materials at expert review 3 are detailed in Appendix A: technical appendix.

6.2 Curriculum and assessment advisors

Each subject and key stage usually has one curriculum advisor reviewing materials and one assessment advisor supporting development processes. Curriculum advisors are recruited to support the quality assurance of items throughout the test development process. They work alongside STA’s TDRs to ensure the tests provide an accurate, valid and appropriate assessment of the curriculum. Assessment advisors support TDRs in areas such as item writing and checking for enemies (questions that could give the answers away to other questions).

Curriculum and assessment advisors need to have:

- substantial and recent expertise in their chosen subject at the relevant key stage

- an understanding of the national curriculum, its structure and ongoing strategy of improvement

- an understanding of adjacent key stages within their chosen subject area, such as an understanding of KS1 or key stage 3 for a role that focuses specifically on KS2

- an understanding of the national curriculum with respect to teacher assessment and the exemplification of national standards that support reliability, validity and consistency

- an understanding of the issues being considered in any recent and forthcoming national curriculum and assessment reviews and how they may impact on the assessments

- the ability to communicate effectively with stakeholders, providing constructive feedback at meetings

6.2.1 Procurement process

Curriculum advisors are appointed through an open competitive procurement exercise advertised on Contracts Finder.

Applicants are required to respond to a series of questions to assess their skills and expertise. Responses are scored by relevant subject team from the test development research unit. Contracts are awarded to those with the highest overall scores.

6.2.2 Input to test development

Curriculum advisors typically review materials at the following stages of test development:

- initial drafts of questions and mark schemes from item-writing agencies

- expert review 1, including participation in resolution meeting 1 and project board 1 (see section 10)

- expert review 2, including participation in resolution meeting 2 and project board 2

- final test review meeting

- project board 3

- mark scheme finalisation meeting

- marker training materials development meetings

The test development stages are summarised in section 3.

6.3 Main areas for item review

The factors taken into account by STA and its reviewers are explained below.

6.3.1 Programme of study

Items are reviewed to ensure the programme of study references, as categorised in the content domain of the test specification, are applied accurately. This helps STA monitor the overall balance of curriculum coverage in the tests.

6.3.2 Test specification

Items and tests are considered against the criteria laid out in the test specification, such as:

- item type

- cognitive domain

- context of questions

6.3.3 Construct irrelevant variance

Construct irrelevant variance (CIV) occurs when pupils interpret an item in an unintended way. As a result, their performance on the item measures something other than that intended by the test. This means that the item can become unexpectedly easy or difficult and does not contribute to the aim of the test.

Reviewing an item for CIV involves looking for unintended ways in which a pupil might respond to the question and deciding whether these are valid misinterpretations of the item.

6.3.4 Distractors in selected response items

Selected response items (for example, multiple-choice items) require careful review of distractors (incorrect answer options). There are many aspects to be considered for distractors, which include:

- the comparative length and complexity of language of distractors and correct answer options

- whether distractors are plausible in the context of the question – for example, that they work semantically and syntactically as answers to the question

- whether any of the distractors could be regarded as alternative correct responses

6.3.5 Accessibility of questions

All reviewers consider the needs of pupils with SEND and EAL, and some reviewers are specifically appointed for their expertise in one of these areas. STA applies the principles of universal design, where the standard versions of the tests are designed to be accessible to as many pupils as possible.

Modified versions of the tests are developed for pupils with certain types of SEND (see section 11). However, there are a significant number of pupils with SEND who will use the standard version of the tests.

6.3.6 Other considerations

In addition to the factors explained in detail above, reviewers take account of the following when considering the suitability of an item:

- accuracy

- clarity of question text

- formatting of item text

- clarity of instructions

- appropriateness of contexts

- clarity and accessibility of diagrams, photos and illustrations

- layout of questions

- number of marks

- appropriateness of difficulty level

- comparability with questions from previous years

- compliance with house style

7 Trialling

STA conducts trials with samples of pupils to assess whether items and their associated coding frames (see section 7.5.1) are valid assessments of pupils’ knowledge and skills.

Administration of test trials, including coding, is outsourced to approved suppliers. The trials take place at the same point in the academic year as the live tests do, to ensure that pupils have completed the programme of study. However, STA maintains overall management of the trials to ensure they are conducted in a robust, reliable and confidential manner.

Information from trialling is used to make decisions about whether an item enters the next stage of the test development process.

This section provides an overview of the IVT and TPT. Informal trialling also occurs during item origination, as described in section 4.

7.1 Item validation trial

An IVT is the first large-scale trial in the test development process. The purpose of the IVT is to determine each item’s suitability and general difficulty for the target age group and ability range. Qualitative and quantitative data is collected to inform the development of each item and its mark scheme. Exceptions to this may include items for which item properties are well established – for example, phonics and some arithmetic items.

For IVT, items are grouped into trial booklets that reflect coverage in the live test. Each IVT item is administered to approximately 300 pupils. The sample of schools that participate in an IVT is as representative as possible of the characteristics of the national cohort in terms of school attainment and region. The dates and numbers involved in the IVTs are provided in Appendix A: technical appendix.

There are 3 possible outcomes for each item following the IVT:

- The item is progressed to the next stage of the development process with no amendments.

- The item is progressed to the next stage of the development process with some amendments.

- The item is archived because the evidence collected indicates that the item is not valid, reliable or of an appropriate level of difficulty and cannot be improved.

7.1.1 IVT trial design

Not all items for all subjects require an IVT as certain item types and formats are more predictable in terms of performance and therefore go straight to TPT. All English Reading texts and items are taken through an IVT.

IVT booklets do not mirror the live test specification, but are designed to enable a large volume of items to be trialled.

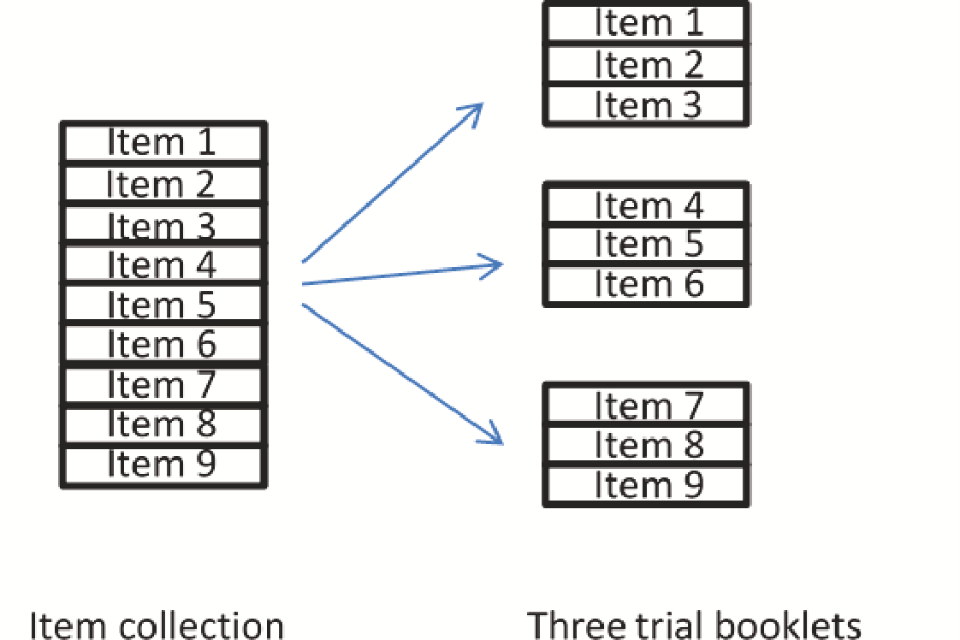

An example of a trial design is presented in Figure 3. In the example, a collection of 9 items is used to make 3 trial booklets, with each item appearing once in the entirety of the trial.

Figure 3: IVT design

7.2 Technical pre-test

The TPT is the largest trial in the test development process. The purpose of the TPT is to gather detailed statistics to help support the final test construction process. Qualitative and quantitative data is collected about each item to determine suitability for a live test. The outcomes from the TPT are used to inform the final mark scheme construction. Statistics are used to link relative difficulty between tests from one year to the next as a comparability measure. This is achieved by including common ‘anchor’ items of booklets (see section 7.3.1) which are used in consecutive TPTs.

For a TPT, items are grouped into trial booklets that reflect coverage in the live test specification. Each TPT item is administered to approximately 1,000 pupils. The sample of schools that participate in the TPT is as representative as possible of the national cohort of pupils, in terms of school attainment and region. The dates and numbers involved in the TPTs are provided in Appendix A: technical appendix.

There are 3 possible outcomes for the items following a TPT:

- The item is approved for use in a live test.

- The item is not approved for use in a live test and is amended and re-trialled.

- The item is not approved for use in a live test and is archived because the evidence collected indicates that the item is not valid, reliable or of an appropriate level of difficulty, and cannot be improved.

All items included in live tests will have been subject to at least one TPT. The TPT will also have included anchor items or booklets to link standards across years, with the exact approach varying between subjects.

7.2.1 TPT trial design

The TPT trial design is more complex than that used for IVT. The same item appears in more than one booklet within the suite of trial materials. This helps to quantify the effect of any differences in overall ability between the groups of pupils completing the different booklets.

Designing the trial so that items appear in more than one trial booklet also minimises the possibility that the performance of the item has been influenced by other items in the same booklet (item ‘context’) or by where the item appeared in the booklet (item ‘position’). The aim is that each item is trialled twice.

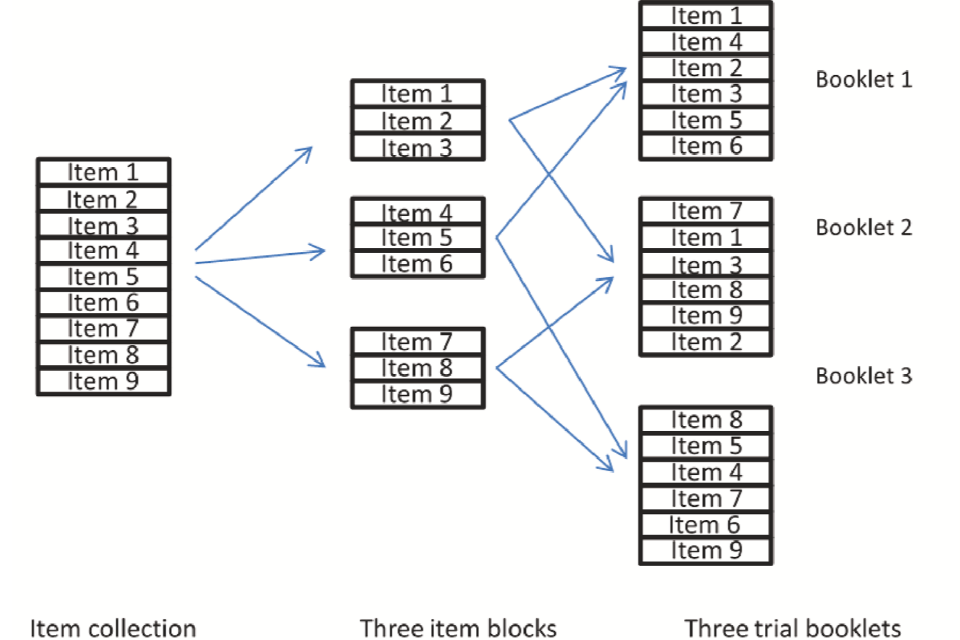

Figure 4 shows a simplified example of a TPT design. This design ensures that each item appears twice at different positions in the trial. The trial design dictates that any booklet within the trial is attempted by 500 pupils, so that each item will be trialled by 1,000 pupils in total.

Figure 4: A sample TPT design

In Figure 4, a collection of 9 items is divided into 3 item blocks. The 3 blocks are used to make 3 trial booklets by combining any 2 blocks. Items appear twice in the trial booklets at different positions and in the context of other items. The TPT can vary in size depending on the number of items that need to be trialled.

For KS2 English reading, the texts are treated like items. They can be moved into different combinations following the TPT, but will always follow the order of ‘easy’, ‘mid’, ‘hard’ in terms of demand. Texts are moved into different combinations on the basis of a qualitative review by test developers and an expert review panel.

7.3 Trial booklet construction

Trial booklets for IVT and TPT are constructed according to the chosen trial design and test specification for each subject.

For TPTs, statistical data gathered in the IVT is also reviewed to ensure the trial booklets are of an appropriate difficulty level.

Within each test booklet, items are generally ranked in difficulty order, with the exception of English reading where items appear according to the chronology of texts. However, at this stage it is not uncommon to have some easy items throughout the booklets. This can help ensure that low-attaining pupils are not discouraged too early on but are motivated to continue even when the overall difficulty of the items may be increasing.

Booklets are designed and scrutinised to ensure:

- there is no overlap of items that may prompt or support the answer to other items

- each contains an appropriate range of different item types, as set out in the test specification

- contexts that involve names of pupils include a mix of pupils of different gender and ethnicities

7.3.1 Anchor test

Anchoring is the process that compares the performance of pupils in the trial on a standard (‘anchor’) test or set of anchor items that are administered as part of the TPT every year. This process is used to maintain standards year on year. The performance on the anchor items is used to ensure equivalent relative difficulty between successive live tests. The anchor items are selected according to the test specification for each subject so that they are representative of a live test.

The anchor items used in the TPT for the 2016 tests will continue to be used for subsequent TPTs. Some of these were developed alongside the live test materials and went through the same development stages (see section 3). Some anchor items from previous years that met the requirements of the new national curriculum were reused.

Anchor tests are kept secure to ensure they will not have been seen by pupils involved in trialling in advance of the trial. English reading test TPTs use an external anchor booklet. External anchoring of TPTs involves having an additional test, or test booklet, which is attempted by a specific sample of pupils during the TPT window.

An alternative to external anchoring is to include anchor items within the trial booklets (internal anchoring). This model was used for the TPTs in mathematics and English grammar, punctuation and spelling.

Anchor items need to be indistinguishable from the items being trialled, so that pupils approach all items in the same way. The anchor items are marked in the same way as trial items in the TPT.

Anchor items need to be reliable, and the coding frames (described in section 7.5) must be unambiguous so they can be coded consistently from year to year. By keeping the administration and coding of the anchor test consistent, the performance of items should not vary considerably. If STA detects variance, test developers and psychometricians will investigate possible causes. Reasons could include:

- differences in the sample

- the position or context of an item in the test paper

- changes in the curriculum being taught

- unforeseen differences in administration or marking

When differences are detected, the anchor item concerned is not used to link standards and may be replaced for future years.

7.4 Conducting the trials

7.4.1 Trialling agencies

Trialling agencies procured from the DfE trialling agency framework administer the trials.

To be included on the framework, agencies must:

- meet security standards

- demonstrate sufficient resources and experience

Details of the trialling agencies can be found in Appendix A: technical appendix.

7.4.2 Recruiting trial schools

From 2016, participation in trials has been a statutory requirement for schools. The samples for the trials are designed to be representative of the national cohort.

Samples were stratified by school attainment and region to ensure the sample reflects the population of assessing schools in these factors. The trialling agency then contacted local authorities and schools to recruit a suitable sample. Once the samples of schools had been recruited, chi-square tests for each stratifier were conducted to test the representativeness of the samples. This indicated that the achieved samples met STA’s specification.

7.4.3 Trial administration

Trial administrators are recruited and trained by the trialling agency. The trialling agency also prints and collates the trial booklets and securely distributes these to the trial administrators shortly before the trial starts. Trial administrators then conduct the trials in schools. Administration is intended to mirror live test administration.

Questionnaires are used to collect qualitative information from teachers and administrators about aspects of the test booklets and their performance. Any incidents that occur during the trialling are also noted on the administrator questionnaire, so that these can be taken into account when reviewing data from the trial.

The trialling agency produces a final report for the trial. This is composed of:

- an administration report detailing the achieved trial samples, the representativeness of the samples as per STA’s specification, and a summary of the trial

- a data report collating findings of the teacher and administrator questionnaire data

7.5 Trial coding

During trialling, STA uses coding instead of marking for both the IVT and the TPT, as it provides in-depth quantitative information on how items have performed. Pupils’ scripts are not marked, they are ‘coded’. This means that those reviewing pupil responses (coders) give a code to a specific response, so that test developers can identify the number of pupils in the trial giving that response.

Coding is therefore used to capture more detailed information on aspects of question performance than is possible using a standard mark scheme approach. At the analysis stage, these codes are converted into marks so the items can be scored. Coding can be done on paper or on screen.

A wide range of coding may be used, but some examples of coding and their purposes are outlined below:

- common, but incorrect, responses

- response types that are borderline creditworthy or non-creditworthy

- creditworthy responses beyond the key stage or programme of study

- misconceptions STA wishes to identify

- analysis of the performance of incorrect answer options in multiple-choice items

This is not carried out for the phonics screening check, as pupils respond to that check orally with teachers inputting the scores as they proceed through the check.

7.5.1 Development of coding frames

During item origination, items are developed with initial mark schemes. STA then converts these to coding frames, which are refined during the early stages of the process (see section 7.5.3).

7.5.2 Recruitment of trial coders

Trial coders are expected to have relevant teaching experience or experience of marking KS2 tests. It is also desirable that the majority of trial coders have some experience in previous rounds of trial coding.

Each coding team is headed by a lead coder, supported by a deputy lead coder. These senior coders are responsible for training coders and quality assurance of coding. They also deal with coding queries, passing these to TDRs for a decision where necessary.

7.5.3 Pre-coding

Before coding for each trial begins, pre-coding takes place, in which senior coders review the coding frames against a small sample of actual pupil responses from the trial. The purpose of pre-coding is:

- to test the draft coding frame on actual scripts and to find a range of responses that could act as exemplars in the coding frames

- to identify unexpected responses and to ensure they are catered for in the coding frame or coder training materials

- to make amendments to the coding frames ready for coder training

- to select scripts to develop any coder training materials

- to select practice scripts

- for TPT only, to select standardisation scripts and seeding or benchmarking scripts (see section 7.5.5)

For both IVT and TPT, a minimum of 100 scripts from the trial are provided for review at pre-coding. Onscreen coding may allow far more than this to be available.

At the end of pre-coding:

- coding frames, training materials and practice materials have been agreed and finalised

- for TPT only, standardisation scripts and seeding or benchmarking scripts have been agreed and finalised

- where applicable, themed response tables have been checked for agreement with amended coding frames and finalised for use

- coding decisions are finalised and passed to the trialling agencies

7.5.4 Training coders

The senior coders, trialling agency and STA work collaboratively to deliver coder training events, which contribute to coding reliability by making sure that all coders receive the same training.

On the training day, the trial coders familiarise themselves with the materials with direction and support from their lead coder. They work through each item in the coding frame methodically, using the training exemplars selected at pre-coding. If any issues are identified, which were missed at pre-coding, the coding frames are amended to ensure consistent and reliable coding. Coders then apply the coding frames to the practice materials selected during pre-coding. For TPT only, coders also complete the standardisation scripts to ensure they are able to apply the coding frame accurately and consistently prior to the start of the actual coding.

7.5.5 Quality assurance during coding

Quality assurance processes are dependent on the mode of coding.

7.5.5.1 Paper-based coding (IVT)

At IVT, TDRs lead on coder training and quality assurance. TDRs check 1 in 5 booklets of each coder’s allocation. If a coder error is found, the frequency of quality assurance by TDRs is increased. TDRs will also provide the coder with additional guidance on accurate application of the coding frame for the affected question or questions. In some instances, double coding is conducted as an additional check. Where necessary, the senior coders carry out targeted recoding to address problems that are identified. TDRs oversee the entire coding process for IVT, including training and quality assurance.

7.5.5.2 Technical pre-test coding (TPT)

At TPT, the quality assurance process begins with coders completing standardisation items, for which the codes have been agreed at pre-coding. The lead coders check each coder’s responses to ensure the coding frame has been applied accurately, and to clarify any points of contention.

After the successful completion of standardisation, trial coding commences. As a quality assurance check, senior coders check 20% of each coder’s allocation. If coder error is found, further training is given and the frequency of quality assurance increases.

For onscreen coding, seeding items are included in each coder’s allocation to ensure that their marking remains accurate. Like the standardisation items, these have been pre-coded and coders must allocate the same codes that were decided at pre-coding.

For paper-based coding, benchmarking scripts are included in each coder’s allocation at a predetermined point. Like the standardisation items, these have been pre-coded and coders must allocate the same codes that were decided at pre-coding.

Senior coders feed back regularly to their teams to discuss issues as they arise. Coders are encouraged to raise any problematic responses to the lead or deputy lead coder for advice and discussion.

Coders could be stopped from coding all or part of a test paper, based on evidence from standardisation and ongoing quality assurance. This decision would be taken following discussion between the lead coder, TDR and trialling agency. Targeted re-coding is then undertaken as required.

7.5.6 Qualitative evidence collection

Coders are asked for feedback during and after coding to contribute to the qualitative evidence associated with each item. In particular, coders:

- highlight exemplars that raised particular issues for the coding frame so that they could be included in live training or used to inform changes for the next step in the process

- highlight exemplars that could be used in the final mark scheme

- feedback on the coding frame, training materials and items themselves

- feedback on processes to enable continuous improvement

Some of the factors that STA considers when reviewing coder feedback include:

- if there are responses to a question that could be considered correct but are not credited by the coding frame

- if there are responses to a question that are difficult to code because they are not sufficiently addressed by the coding frame

- if there is a mismatch between the item and coding frame

- if there is sufficient differentiation of mark points in open-response multi-mark items

- if the coding frame is rewarding answers where it is not clear that the pupil fully understands the concept or skill being assessed

This information will feed into decisions on whether the item proceeds to the next stage of test development unchanged or whether changes are required to improve the item.

8 Analysis of trialling data

Statistical analysis of the items is carried out following each trial to inform the test development process. This analysis is to evaluate the performance of the items rather than the pupils.

From this analysis, STA gathers crucial, objective information about the difficulty of each item and how well it measures the test construct. It also highlights any potential flaws such as bias towards different groups of pupils or issues with the mark scheme. The types of analysis fall broadly under 2 areas of test theory:

- classical test theory (CTT)

- item response theory (IRT)

8.1 Classical analysis

Most CTT-based analysis is aimed at identifying how much information the observed score tells us about an individual’s true score. A true score is a CTT concept interpreted as the average of observed test scores over an infinite number of administrations of the same test (per pupil). Classical analysis encompasses a range of statistics at item, booklet and test level.

Booklet or test-level information includes:

- score distributions

- measures of internal consistency reliability

- comparisons of mean scores by gender

Item-level statistics include:

- the mean score expressed as a percentage of marks

- the percentage of pupils achieving each possible score

- the percentage of pupils who omitted the item

- the percentage of pupils who omitted the item and all remaining items in the booklet

STA also examines the correlation between the item score and the total score, which has been corrected to exclude the item. This gives an indication of how well the item differentiates between pupils of differing ability.

8.2 Item response analysis

IRT refers to a group of statistical models in which the probability of pupil success on an item is a function of various item parameters, depending on the model. IRT allows the comparison of items that have not appeared in the same test together and pupils who have taken different tests, by putting them all onto a single common scale, linked by common items or pupils. Due to its complexity, IRT is correspondingly more difficult to carry out, requiring specialist software.

IRT assumes there is an underlying latent construct being measured that is continuous in nature. An item characteristic curve plots the probability of a correct response as a function of the latent construct. Item characteristic curves are summed together to plot a test characteristic curve for each test (see Appendix A: technical appendix).

Another important concept in IRT is that of item information. This provides a display of item contribution to the latent construct and depends to a large extent on the differentiation power of the item along the latent construct continuum. Item information function plots can also be summed together to plot a test information function for each test (see Appendix A: technical appendix).

IRT has a number of assumptions that must be statistically tested to ensure its use is appropriate:

- that the items in the test fit the IRT model

- that the underlying latent construct is unidimensional

- that the items in the test are independent of each other

8.3 Distractor analysis

Pupil responses are coded for both trials rather than marked. Numeric codes are assigned to represent how the pupil responded. Distractor analysis is used to analyse the response codes to provide more information about how the pupils answer the items – for example, in multiple-choice items, distractor analysis shows how often incorrect options were selected.

8.4 Differential item functioning

Differential item functioning (DIF) is a statistical analysis carried out to flag differences in item performance based on group membership. This analysis is useful when looking at all of the evidence for test construction. However, DIF can only indicate that there is differential item performance between groups (boy and girl, EAL and non-EAL) that have the same overall performance. It cannot determine the cause of the differential performance.

Further qualitative exploration of an item leading to a reasoned, substantive explanation for the DIF is required before an item could be considered biased. It is important to acknowledge that group differential performance does not, on its own, indicate bias or lack of fairness.

8.5 Analysing qualitative data

Qualitative evidence is gathered throughout the trial administration and trial coding stages. Feedback is evaluated alongside the data, recorded in the item bank and used to inform item and coding frame refinement.

8.5.1 Summary of types of evidence gathered

Throughout each trial, administrators have the opportunity to observe pupils taking the tests. They are asked to report any findings to STA via a questionnaire completed at the end of the administration window.

Teachers whose classes participate in the trial also have the opportunity to review the materials and observe their pupils sitting the tests. They also complete a questionnaire.

The questionnaires provide an opportunity for those involved in the trialling to give their opinions on the suitability of the questions in terms of accessibility, ease of reading, clarity and layout, manageability and timing.

Pupils’ comments also feed into the trial administrator and teacher questionnaires.

8.5.2 Revising items and coding frames

Qualitative data can support information provided by quantitative data and highlight issues with accessibility or reasons why pupils may have misinterpreted a question. The data can also highlight where a majority of pupils struggle with vocabulary used in the tests.

However, because it is subjective and because of the relatively small numbers responding to the questionnaires, some caution is required when using qualitative data to make judgements on whether changes should be made to questions and coding frames.

9 Test construction

Test construction is the process by which questions are put together to form test booklets that meet the test specification set out in the test framework (see section 2).

TDRs and psychometricians are responsible for constructing the tests based on the evidence from trialling. After the live tests have been constructed, they are reviewed at expert review 3 and by curriculum advisors to ensure the tests are fit for purpose and meet the test specification.

9.1 Item finalisation

All questions considered for a live test have been through a TPT, which has provided evidence that they are functioning well. Items going forward to the live test should not be amended after the TPT as this could affect pupil performance on the item and render the data held unreliable.

An item finalisation meeting is held following each trial. At this meeting, test developers and psychometricians confirm which items from the trial are suitable for inclusion in the next round of trialling or in a live test. Items that are deemed suitable must:

- have appropriate facilities (difficulty levels)

- differentiate between groups of pupils of differing ability

- be accessible in terms of language, layout and illustrations

Both quantitative and qualitative data from the trial is reviewed to give a report on each item’s reliability and validity, as an appropriate assessment for its programme of study references.

Any items that do not function well, or that had poor feedback from teachers or pupils, are either removed from the pool of available items for selection or amended to be re-trialled for future use.

Occasionally, it is not possible for the items in the live test to be identical to the trialled TPT versions. Small changes may be required at the test construction phase, for example to keep the gender and ethnicity of pupils named in the papers balanced.

While there is a small chance this could affect the performance of a question in the live test compared to its performance in the TPT, it is unlikely to be significant and is monitored through the live analysis. Such changes are only made when essential.

9.2 Constructing the test

STA’s psychometricians use question and item metadata to construct a draft test that meets the test specification, and which optimises the measurement precision within the appropriate range on the ability scale. A test construction meeting is then held to select the items for IVT, TPT or live test booklets. At this meeting, test developers use the psychometricians’ initial selection as a starting point. The meeting’s participants consider the proposed booklets, taking into account:

- item type

- presentational aspects

- question contexts and coverage

- whether there are any conflicts between what is assessed within test booklets and across the test as a whole

At this stage, items may be swapped in or out of the test to improve its overall quality and suitability.

Phonics items are trialled in blocks of 4 and have to be constructed into a live test in those blocks.

Once the questions have been chosen for each test, they are put in an appropriate order. For phonics, mathematics and English grammar, punctuation and spelling, more accessible questions are put at the start of the test and more demanding questions towards the end so that the test paper increases in difficulty. For English reading, although an attempt is made to put easier questions earlier in the test, the questions are required to follow the chronology of the texts, so some easier questions will appear later in the booklet. The tests usually start with one or 2 easy questions to allow pupils to familiarise themselves with the context and conditions.

The overall test is reviewed in the combination of booklets that will form the complete test. This is to check for overlaps – for example, in the English grammar, punctuation and spelling tests, it would not be appropriate for one of the spelling words also to appear in the question booklet.

9.3 Reviewing and confirming the test

The tests are subject to a further review process after test construction. They are reviewed first by the test development team and the psychometrician to ensure none of the test constraints have been missed during the test construction meeting.

External stakeholders then review proposed live tests at expert review 3. Information about this panel is provided in section 6 and the details of those who participated in expert review 3 are in Appendix A: technical appendix.

Live tests are then presented to project board 3 (see section 10). Once the project board is satisfied that the tests have met the test specification and are of sufficient quality, the tests are signed off to go into production.

9.4 Quality assurance and proofreading

The tests go through a rigorous quality assurance process before the materials are signed off to print. There are 3 major handover stages. At each stage, a range of people proofread the tests and mark schemes to ensure all the material is accurate and free from errors. Between each stage, any errors found are corrected and rechecked.

The tests and mark schemes are proofread by people with particular areas of expertise, including professional editorial proofreaders and subject experts. They make sure that:

- there are no typographical errors

- house style is applied consistently

- grammar is correct and reflects the grammatical rules and conventions tested in the English grammar, punctuation and spelling tests

- ISBN numbers, product codes and barcodes are correct

- requirements are met for the scanning of papers, data capture and marking

- there is consistency across subjects and key stages

- there are no content overlaps

- the tests give pupils all the information they need to answer the questions

- test content is factually correct and would stand up to scrutiny

- the mark schemes and tests reflect each other

- the mark schemes are usable, correct and adhere to established conventions

- the number of marks is correct

- items are labelled correctly

All of these checks occur at least once throughout the handover stages.

TDRs also proofread the materials at every stage and have overall responsibility for the quality of the tests. They are responsible for collating the comments from each proofing round and ensuring any amendments are made.

10 Governance

Overall governance of each test in development is managed through fortnightly checkpoint meetings involving the whole project team for that subject, plus relevant members of STA’s senior management team. Outcomes of these meetings are reported to monthly delivery and technical subprogramme boards as appropriate, chaired by a deputy director and attended by members of STA’s senior management team. Escalation is through normal STA routes, including the Executive Management Board.

In addition, project boards take place at 3 points in the test development process:

- before IVT

- before TPT

- before the materials go live

The purpose of the project board is to:

- review documentation on the quality of the items and tests

- ensure there is sufficient material available to carry on to the next stage of development

- ensure, for tests going live, that the correct process has been followed, the tests meet the specification and the materials are fit for purpose

The project board will consider the evidence carefully before approving the test materials for trial or live testing.

Statutory national testing is regulated by Ofqual, whose representatives may observe technical board or project boards.

10.1 Project board 1

Project board 1 takes place shortly after the resolution meeting for expert review 1 (see section 3 for a summary of the test development process). The purpose of the meeting is to formally approve materials proceeding to the IVT.

At project board 1, the following evidence is considered:

- trial booklets

- a summary of the process to this point

- an overview of evidence from expert review 1 and any feedback from the curriculum advisors on the materials

- coverage of items in the trial against the test framework

- coverage of items within the item bank to ensure areas not covered in the trial are provided for in the item bank

10.2 Project board 2

Project board 2 takes place shortly after the resolution meeting for expert review 2. The purpose of the meeting is to formally approve materials for inclusion in the TPT.

At project board 2, the documentation comprises:

- trial booklets

- an outline of the TPT model being used

- a summary of the process to this point

- an overview of evidence from expert review 2 and the curriculum advisors

- coverage of materials in the trial against the test framework

- coverage of items within the item bank to ensure areas not covered in the trial are provided for in the item bank – in total, the materials in the trial and the item bank must be able to produce a live test that meets the specification

10.3 Project board 3

Project board 3 takes place shortly after expert review 3. The purpose of project board 3 is to formally approve the materials going forward as the live test. Any recommendations for changes to the constructed test following expert review 3 must be made at project board 3.

Project board 3 reviews the validity evidence for the test, including whether the correct process has been followed. Project board 3 ensures that the proposed test meets all aspects of the specification. If these conditions are met, the materials can be approved as fit for purpose.

The dates of the project boards are given in Appendix A: technical appendix.

10.4 Standards confirmation and maintenance

The final major governance meeting is the standards confirmation or standards maintenance meeting, where the scaled score conversion tables are signed off for the tests (see section 13). The dates of these meetings are in Appendix A: technical appendix.

11 Test administration

STA is responsible for developing test guidance and managing the processes related to test administration and access arrangements, overseeing the print, delivery and collection of paper-based national curriculum tests and overseeing the marking of KS2 tests.

11.1 Test orders

Maintained schools and academies, including free schools are sent standard test materials based on their census and pupil registration data.

Independent schools choosing to participate in the phonics screening check and KS2 tests must place test orders.

Schools order modified versions of the tests for pupils who require them.

Details of the numbers of test materials sent to schools are provided in Appendix A: technical appendix.

11.2 Pupil registration

All pupils enrolled at maintained schools, special schools and academies, including free schools, who complete the KS1 and KS2 programme of study in the current academic year, must be registered for the tests. This includes pupils who are working below the overall standard of the tests and ultimately will not take them, and pupils who are working at the overall standard but cannot access the tests.

11.3 Delivery of test materials

Test materials are delivered to schools in April.

The headteacher, or a delegated senior member of staff, checks the contents of their delivery against the delivery note to ensure the correct number and type of test materials have been received. Test papers must not be opened until the test is about to be administered on the day or in the window specified in the test timetable.

Headteachers ensure the security of the tests is maintained so that no pupil has an advantage. Schools follow the instructions in STA’s test administration guidance on keeping test materials secure and treat them as confidential from the point they are received in school until the end of the relevant test administration period.

11.4 Administering the tests

All tests are administered on the days specified in the statutory timetable.

For KS2 tests, headteachers are responsible for deciding the start time of the test, but all pupils take each test at the same time. Tests are taken on the day specified in the statutory timetable. The phonics screening check is administered to pupils individually throughout the month of June.

If it is not possible for all pupils to take a KS2 test at the same time on the day specified, schools may administer one or more sittings.

If it is not possible for all pupils to take a test on the day specified, schools apply for a timetable variation. If approved by STA, a timetable variation allows an individual pupil, or a partial or whole cohort, to take the test up to 5 school days after the scheduled day of the test. Schools must wait for approval from STA before administering the test on another day.