AI 2030 Scenarios Report HTML (Annex C)

Updated 28 April 2025

AI 2030 Scenarios

Helping policy makers plan for the future of AI

January 2024.

1. Executive Summary

1.1 Introduction

2022 saw AI break into the public consciousness with the release of Large Language Models (LLMs) including the OpenAI chatbot ChatGPT, and text-to-image models such as DALL-E 2. These tools were suddenly available to millions of people to interact with in simple language, no code required, and could be used for a range of tasks.

This moment significantly increased attention on developments in AI. But there is significant uncertainty around what the future holds, in particular at the frontier. We don’t know what the most advanced models will be capable of, who will own them, how safe they will be, how people and businesses will use them, and what the geopolitical context will be. These uncertainties will interact in unpredictable ways to create a specific future in which policy makers will operate.

GO-Science has developed five scenarios for developments in AI between now and 2030. They are designed to help policy makers explore the potential implications of different AI futures. We hope they help to develop policies that are resilient to risks, and to seize opportunities for the economy, society, and public services.

This report sets out evidence on a set of critical uncertainties, our AI 2030 scenario narratives, and the findings of a public engagement commissioned to explore public perceptions of the scenarios.

An AI generated image showing a bustling futuristic trade centre.

An AI generated image showing a school classroom, with a teacher teaching a class of children about AI.

An AI generated image showing a high-tech living room, with autonomous cleaning robots and smart technology.

Figure 1 AI generated images illustrating possible aspects of a positive AI future. See note below on AI generated content.

1.2 Background and scope

Scenarios are a tool to help policy makers explore uncertainty and identify a resilient course of action that can withstand a range of future conditions. They are not predictions.

We have developed five scenarios, exploring developments in AI up to 2030. Events at a global level are described, but the focus is on implications for the UK.

The scenarios focus on Frontier AI the most capable and general models available in 2030. The models we consider Frontier AI today are only a small subset within the broad field of machine learning and AI. Many other approaches to, and applications of, AI exist. AI is not a singular thing. However, Frontier AI models have created a new, uncertain dynamic due to the pace of their improvement, their adaptability across multiple tasks, and their availability to anyone to interact with in natural language. These scenarios explore how this could play out to 2030.

The scenarios are designed to help policy makers, industry, and civil society test actions that might mitigate risks and navigate to a more favourable future. Policy makers need to be able to stress test new policy ideas against different plausible futures, so we have explicitly avoided including any such government interventions in the scenarios. As a result, they are all challenging, with difficult policy issues to tackle.

This does not mean a more positive AI future is implausible, or that the risks set out in this report are inevitable. There are many beneficial outcomes that could arise from AI developments, some of which are included in the scenarios. However, most experts we spoke to agree that policy intervention will be needed to ensure risks are effectively mitigated and benefits realised. As a result, we have deliberately avoided including any scenarios that are largely benign or favourable.

The scenarios are informed by the latest evidence and expert judgements. In drafting this Foresight report, and the Future Risks of Frontier AI paper, GO-Science consulted over 70 experts from industry, academia and policy making to ensure the scenarios and key findings were as robust and evidence based as possible.

These scenarios have some important limitations. They were developed and reviewed swiftly by experts to feed into the UK AI Safety Summit in November 2023, meaning certain evidence or perspectives may have been missed. Given the pace and unpredictable nature of AI development, some aspects of the scenarios may also soon become dated. Whilst they have been designed for use in different policy contexts, they may not be suitable in the form presented here for all AI policy questions.

1.3 The AI 2030 scenarios

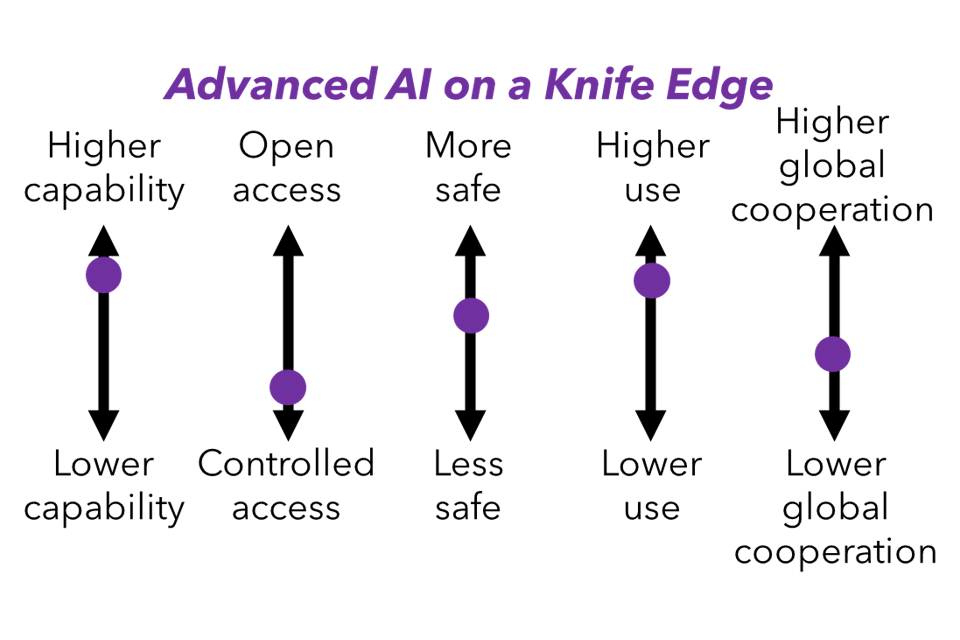

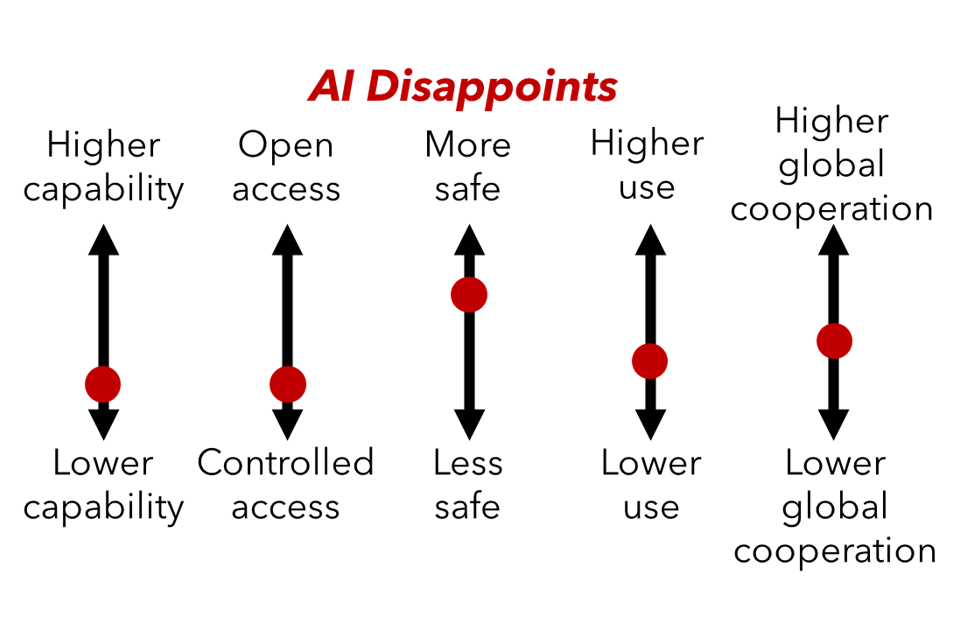

The AI 2030 scenarios are built using five critical uncertainties, factors that are both important to the future of AI development, but also highly uncertain (Figures 2).

Figure 2: The five critical uncertainties

| Capability: What ability will AI systems have to successfully achieve a range of goals? Will this include interaction with the physical world? How quickly will the performance and range of capabilities increase over time? |

| Ownership, access, and constraints: Who controls systems? How accessible are they? What infrastructure and platforms are used to deploy systems? What constraints are there on the availability of AI systems? |

| Safety: Can we build safe AI-based systems, assuring their validity and interpretability? How robust are systems to changes in deployment context? How successfully does system design ensure AI behaviour aligns to societal values? |

| Level and distribution of use: How much will people and businesses use AI systems? What for and why? Will they be consciously aware they are using AI, or not? How will people be affected by AI misuse? How will use affect the education and jobs people do? |

| Geopolitical context: What wider developments have there been at a global level that will influence AI development and use? Will there generally be more cooperation on big issues, or more conflict? |

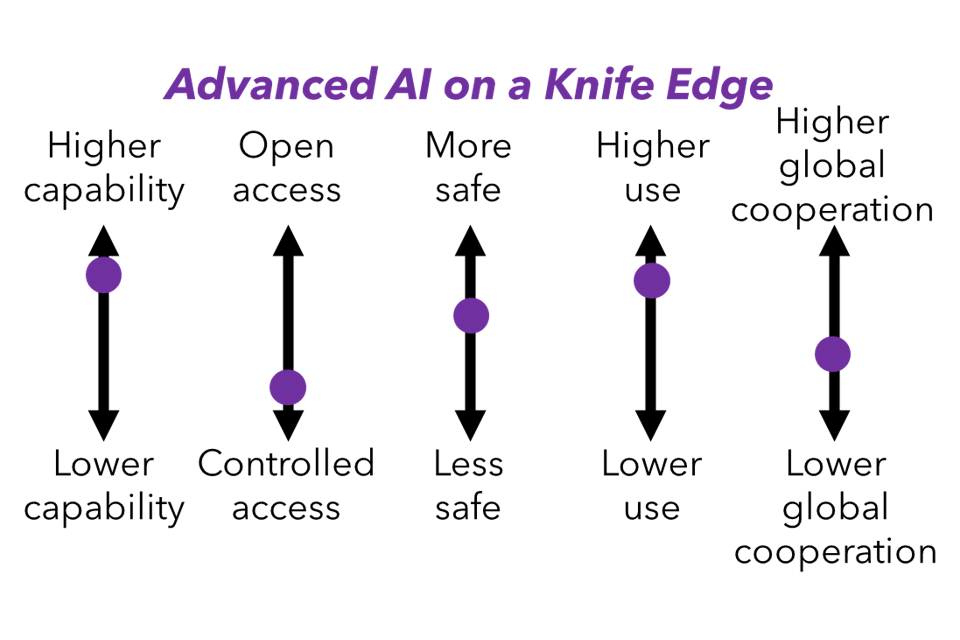

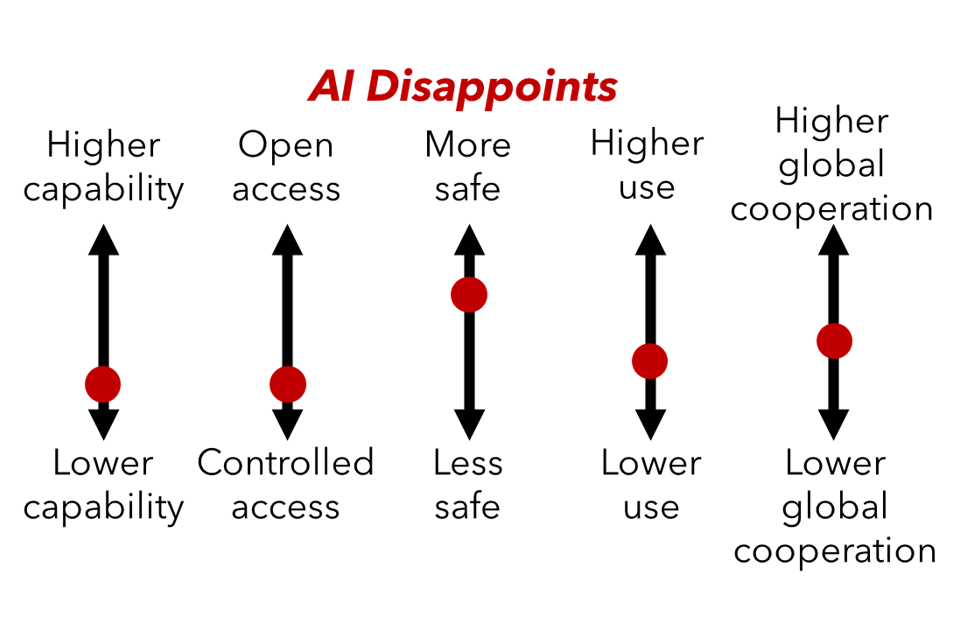

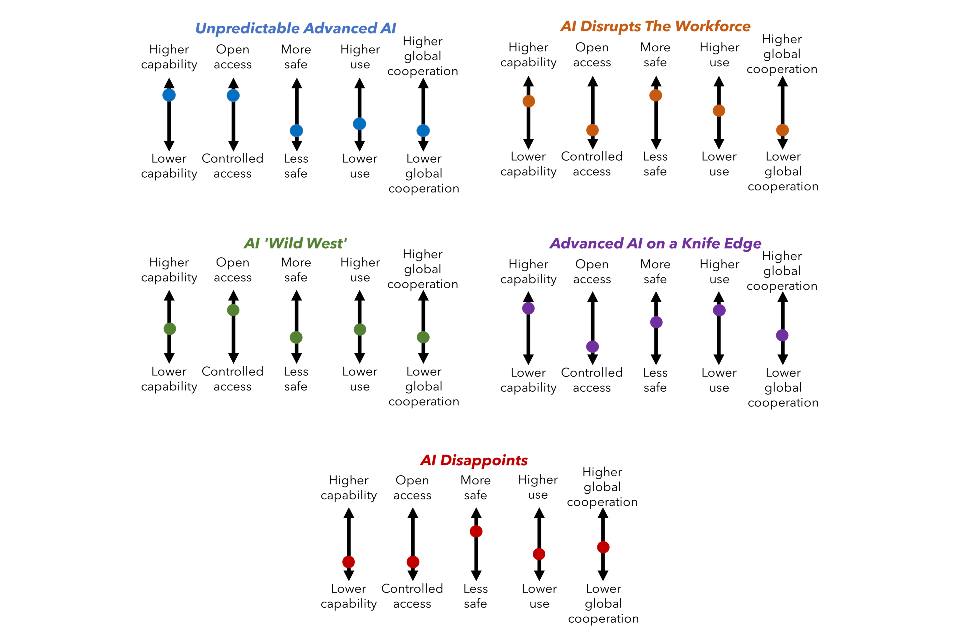

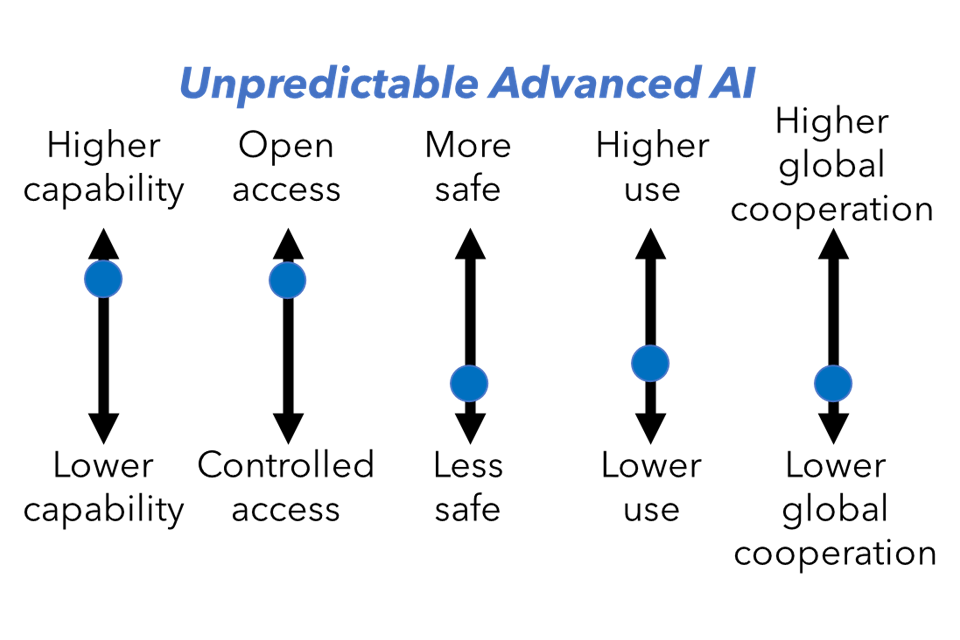

The scenarios are described by a set of narratives. These cover outcomes across the critical uncertainties, and a range of implications across the economy, society and for peoples daily lives. A high-level vision for a positive AI future is also provided. Below are brief summaries of the scenarios alongside a slider chart to illustrate the critical uncertainty outcomes in each case.

1.4 Scenarios summary

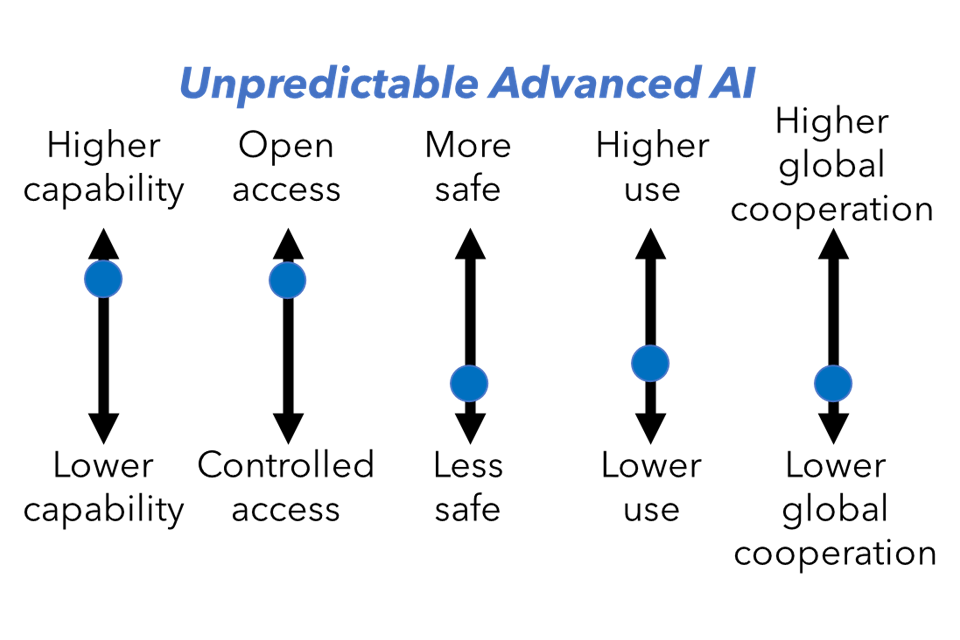

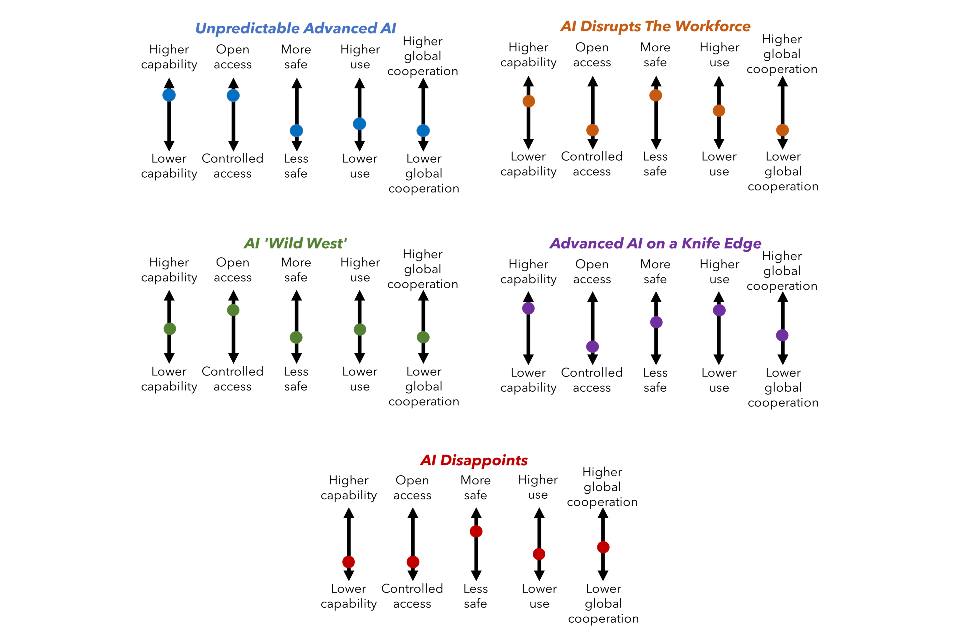

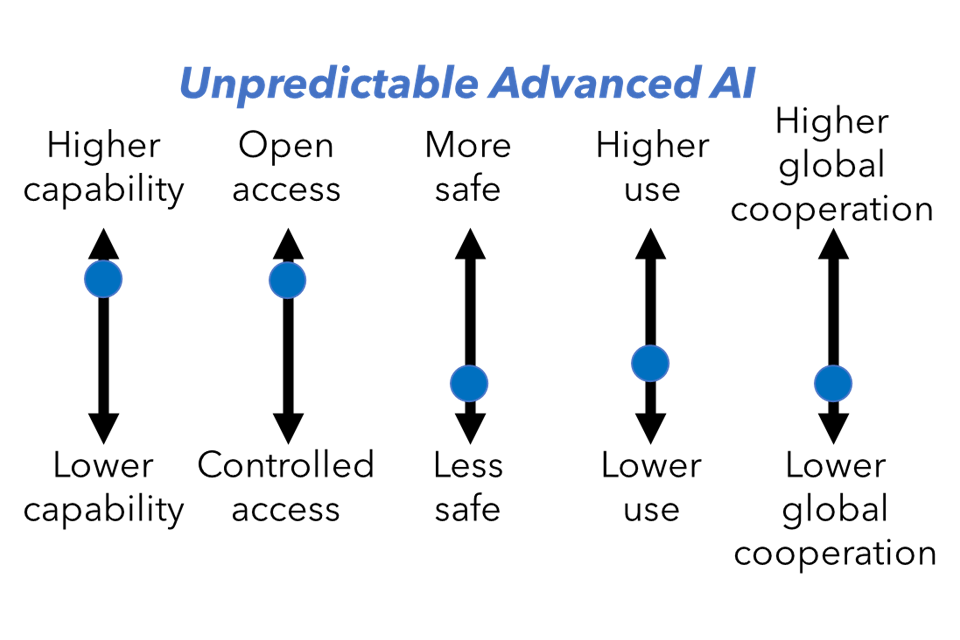

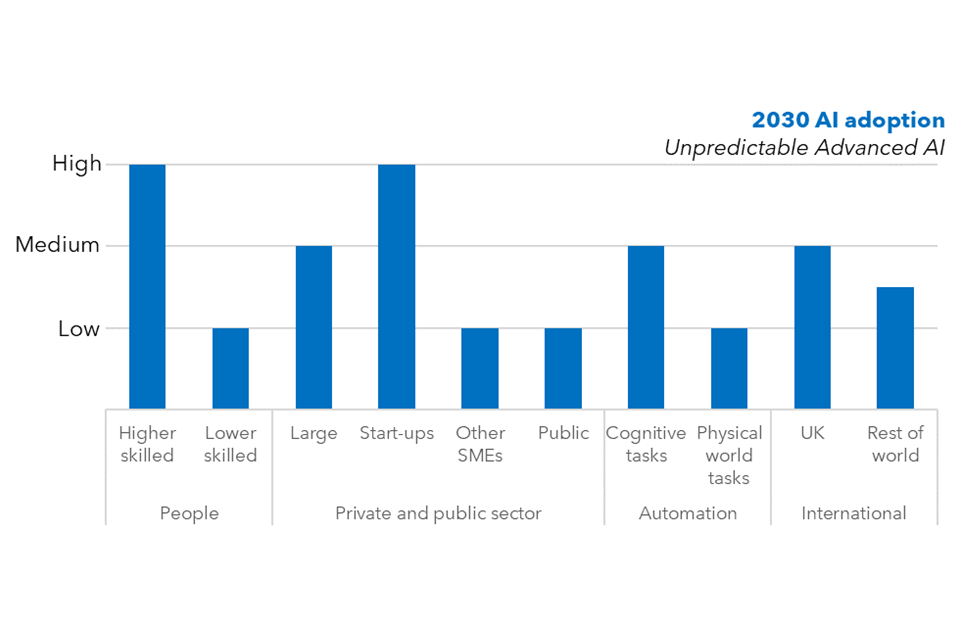

Unpredictable Advanced AI

Highly capable but unpredictable open source models are released. Serious negative impacts arise from a mix of misuse and accidents. There is significant potential for positive benefits if harms can be mitigated.

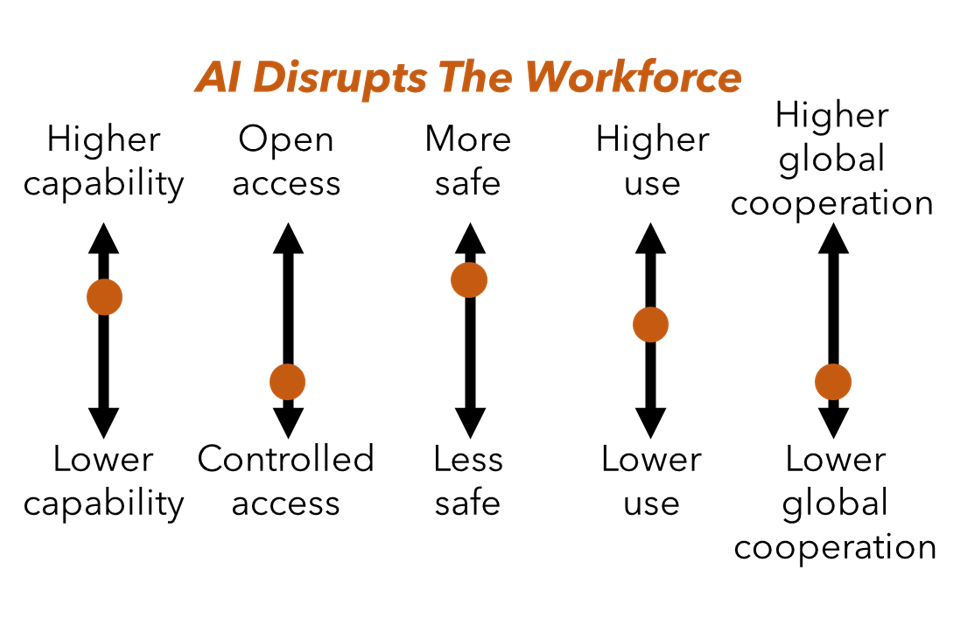

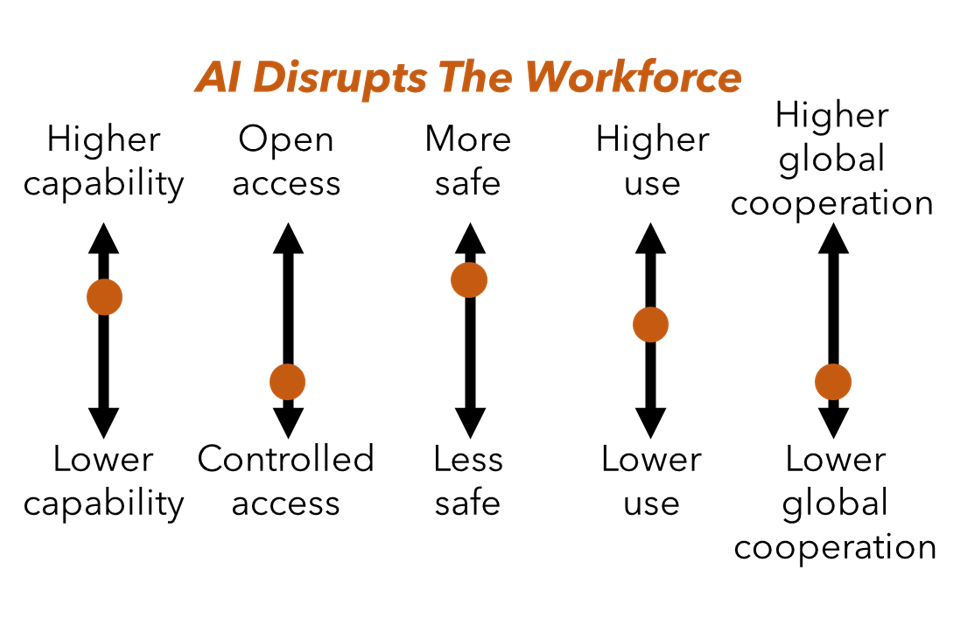

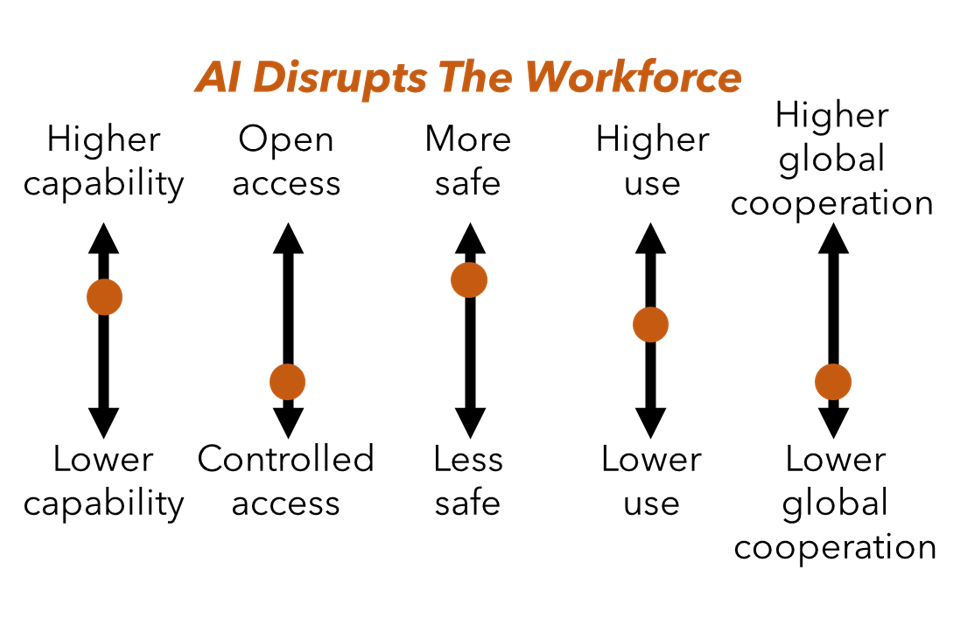

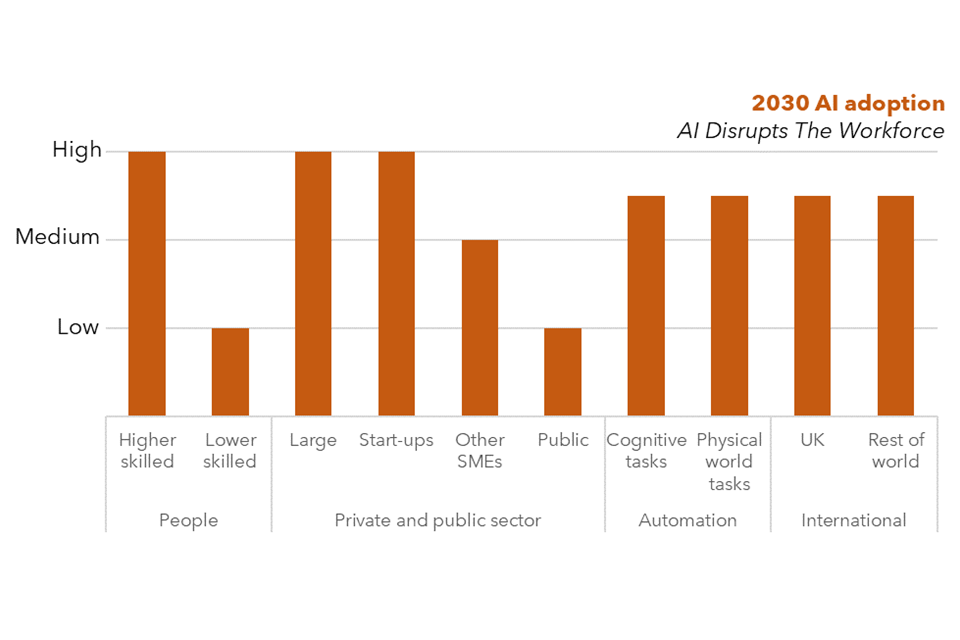

AI Disrupts the Workforce

Capable narrow AI systems controlled by tech firms are deployed across business sectors. Automation starts to disrupt the workforce. Businesses reap the rewards, but there is a strong public backlash.

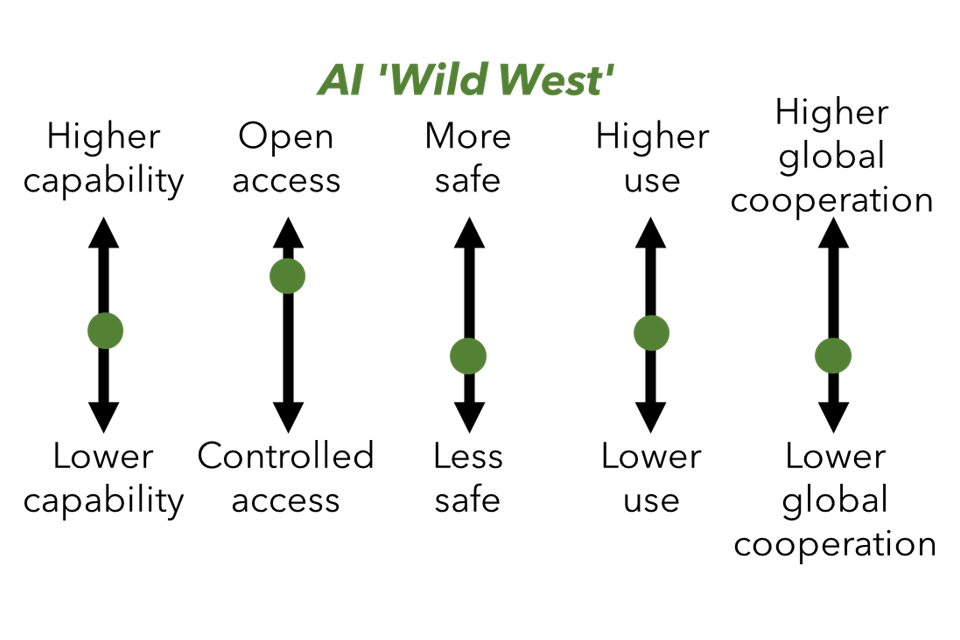

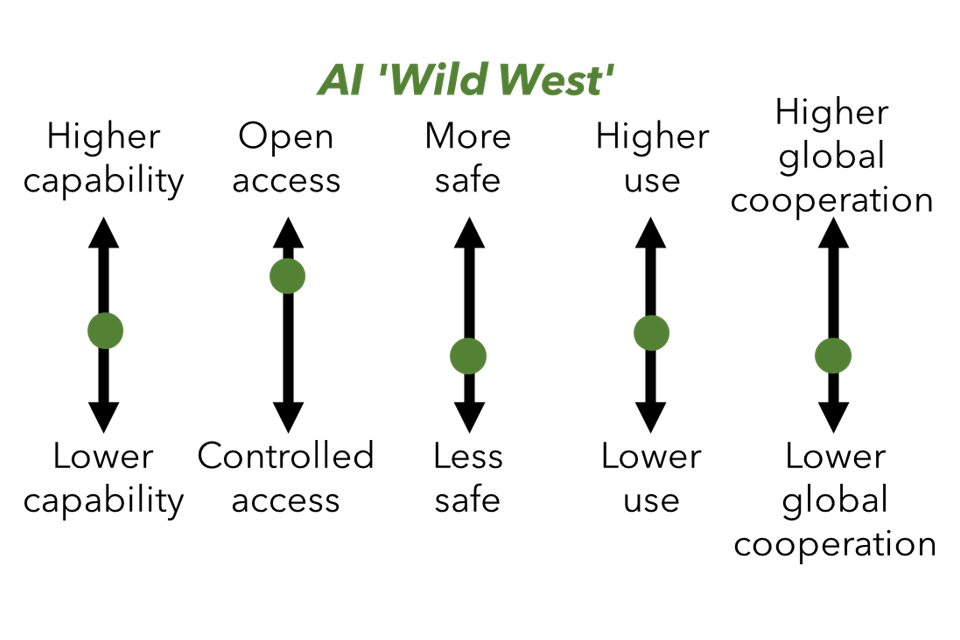

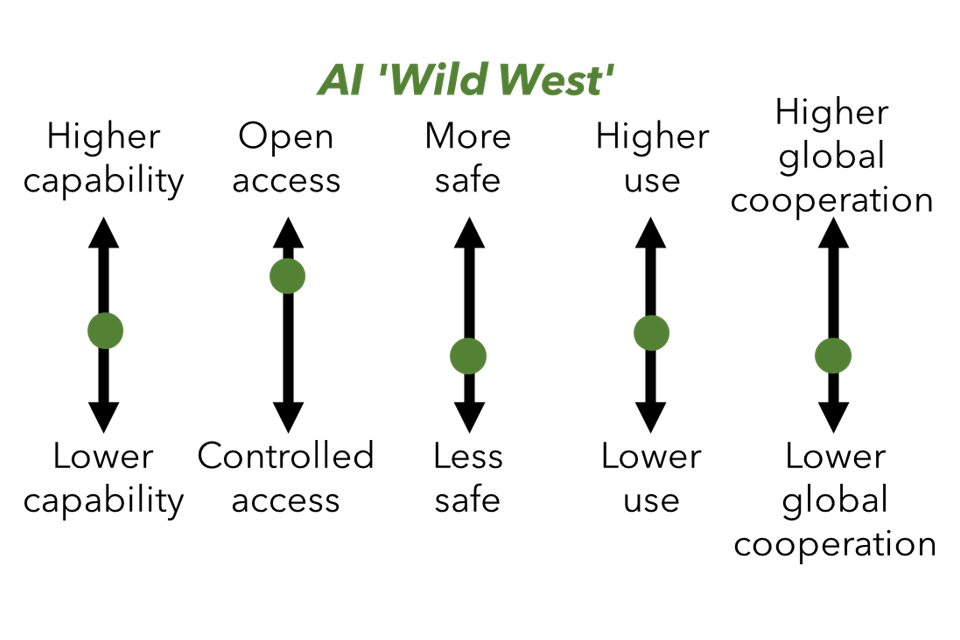

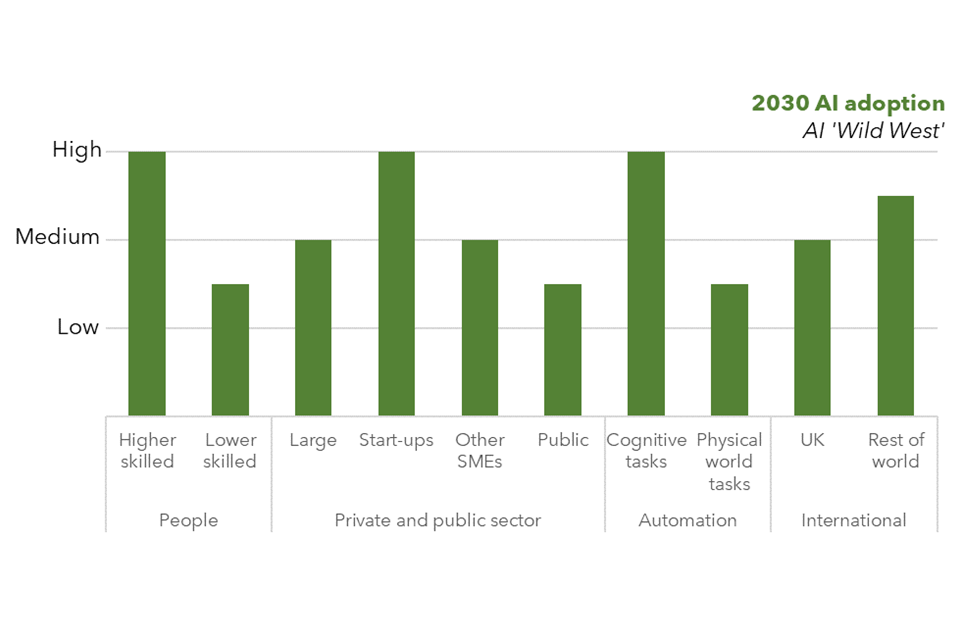

AI ‘Wild West’

A wide range of moderately capable systems are owned and run by different actors, including authoritarian states. There is a rise in tools tailored for malicious use. The volume of different AI systems is a problem for authorities.

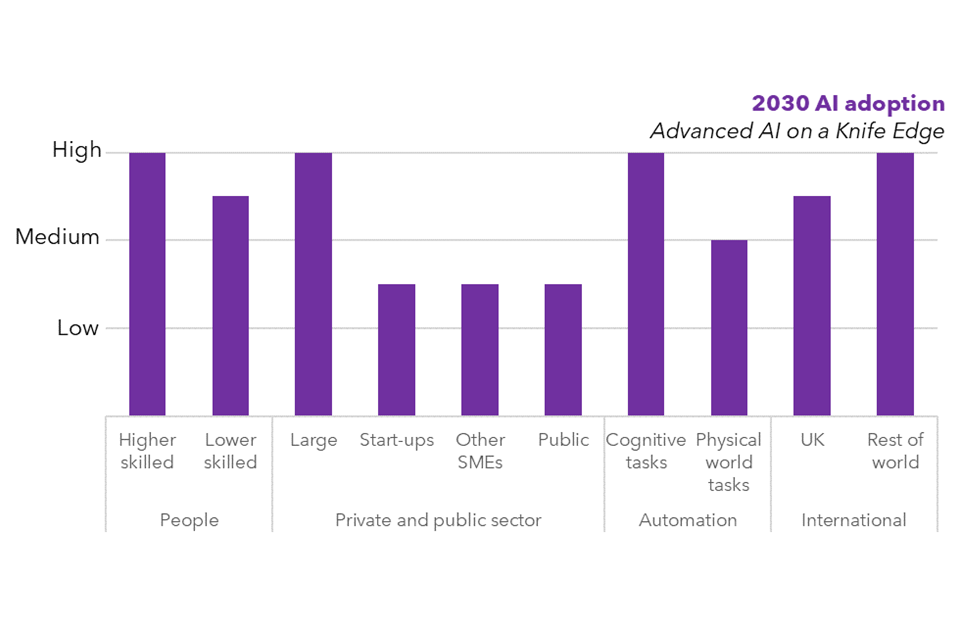

Advanced AI on a Knife’s Edge

Systems with high general capability are rapidly becoming embedded in the economy and peoples’ lives. One system may have become so generally capable that it is impossible to evaluate across all applications.

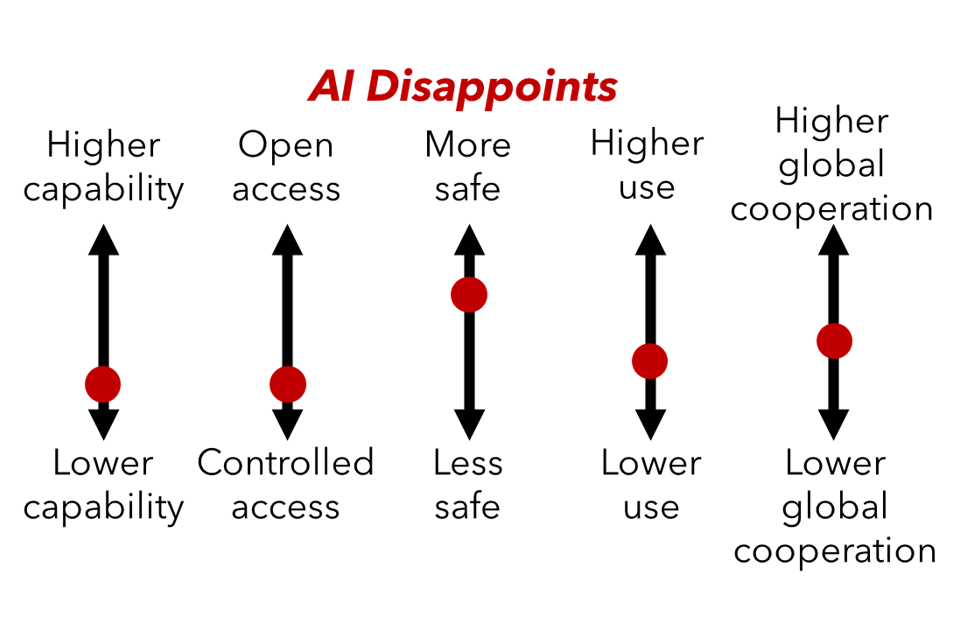

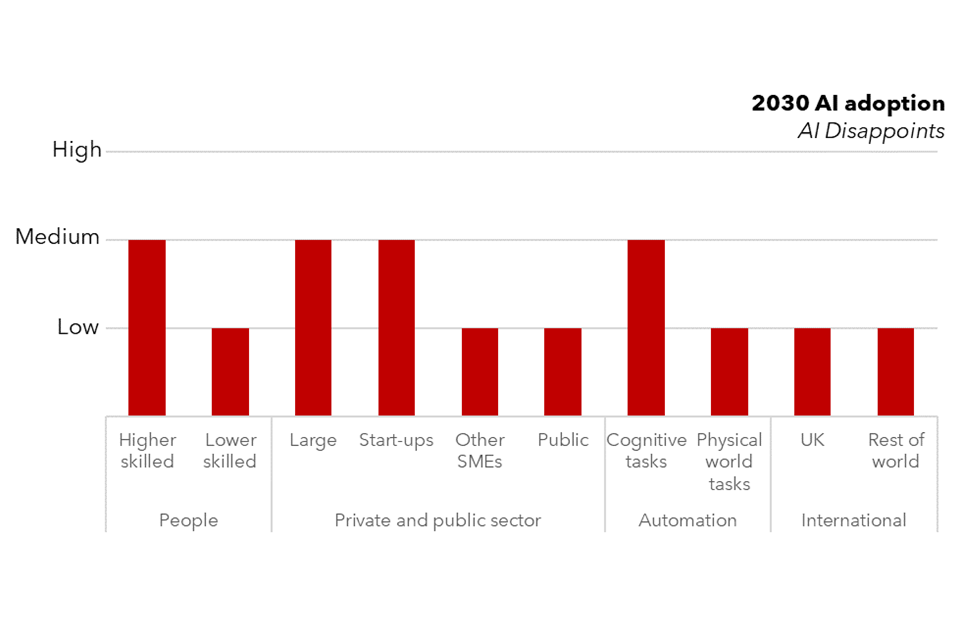

AI Disappoints

AI capabilities have improved somewhat since the early 2020s, but more slowly than many expected. Investors are disappointed and looking for the next big development. There is a mixed uptake across society.

This graphic brings together the five individual graphics from above. The data from these graphics is available below:

Scenario Sliders in relation to Critcal Uncertainties, Scored 1-5 (Low to High)

| Scenario | Capability | Access | Saftey | Use | Global Cooperation |

|---|---|---|---|---|---|

| Unpredictable Advanced AI | 5 | 5 | 1 | 2 | 1 |

| AI Disrupts the Workforce | 4 | 1 | 5 | 3 | 1 |

| AI ‘Wild West’ | 3 | 5 | 2 | 3 | 2 |

| Advanced AI on a Knife’s Edge | 5 | 1 | 4 | 5 | 2 |

| AI Disappoints | 1 | 1 | 4 | 2 | 3 |

1.5 Public engagement

AI development will not happen in isolation. The direction AI takes will be strongly influenced by how citizens use it, shape it, and are affected by it. GO-Science, with the support of Centre for Data Ethics and Innovation (CDEI), commissioned the research company Thinks to carry out a public engagement based on the five scenarios. The research objectives for the engagement were to understand:

- public perceptions of the scenarios, including the risks, opportunities and plausibility of each;

- which elements of the scenarios members of the public feel strongly about, particularly their key concerns; and

- what factors could underpin mitigations to reach a more positive future scenario.

Given the rapid nature of our project, this engagement was conducted on a smaller scale and more quickly than would ideally be the case. Experts we spoke to agreed that large-scale, deliberative public dialogue will be needed to inform the development of AI safety systems and regulations, which could build on the findings of our study.

Frontier or Foundation?

There are several terms used for the largest AI models deployed today:

- Large Language Models (LLMs) - machine learning models trained on large datasets that can recognise, understand, and generate text and other content.

- Generative AI - AI systems that can create new content (images, text etc). The ability to create defines this type of AI.

- Foundation Models - machine learning models trained on very large amounts of data that can be adapted to a wide range of tasks. Adaptability defines this type of AI.

- Frontier AI - AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models.

These terms overlap (see contents for a full glossary). Today’s Frontier models are mostly trained with language data. They can generate text and, with fine tuning, be the basis for multiple more specialist models. Arguably, a model like GPT-4 meets all four definitions. However, what constitutes Frontier will change over time and may not always be based on the same architecture and training data as today’s LLMs.

1.6 Key findings

The evidence gathering, scenario analysis and public engagement that underpins this report has helped to identify the key findings below. The findings from this Foresight report build on those of the Future Risks of Frontier AI1 paper, published by GO-Science in October 2023. They draw on a common evidence base and expert engagement.

AI in future will, like today, be a mix of different models developed and operated by many different actors. This will include narrow models trained for very specific purposes. AI is not a singular thing. However, this report, and the findings below, focus on the most advanced and general models at the stage of AI referred to as Frontier AI.

- Future Frontier AI systems could have widespread positive impacts for society, including improvements in productivity and living standards, better public services, and scientific breakthroughs in health or energy. However, most experts we spoke to agreed that policy makers will need to act to fully realise these benefits, whilst mitigating the potential risks.

- The risks posed by future Frontier AI will include the risks we see today, but with potential for larger impact and scale. These include enhancing mass mis- and disinformation; enabling cyber-attacks or fraud; reducing barriers to access harmful information; and harmful or biased decisions.

- New risks could also emerge. What Frontier AI will be capable of by 2030 is highly uncertain but will shape the risks it poses. Risks will also depend on wider uncertainties in access to AI systems, ownership, safety measures, use by people and businesses, and geopolitics.

- As Frontier AI systems become more generally capable, it will become difficult to evaluate their performance and safety across all possible applications. Approaches to evaluation that address this dynamic will be needed.

- Use of highly capable narrow AI systems could also present policy makers with challenges, for example if used in widespread automation. This will need to be considered, alongside more general AI systems, when developing risk management policies.

- Over the next few years, a few large technology companies are likely to dominate Frontier AI. Longer term there is more potential for a shift away from this dynamic. However, in scenarios where highly advanced AI systems remain controlled by a few companies, they will hold a huge amount of power.

- Highly capable open source AI systems could also emerge. In scenarios where that happens, effective regulations or laws may need to cover any potential developer or user of these systems, not just the original creators.

- Negative impacts from future Frontier AI could be driven by a range of factors. These include rapid changes in AI capability, malicious use, ineffective safety systems, and wider societal consequences of well intentioned use. Policy makers will therefore need to consider interventions that can prevent or mitigate impacts associated with all these factors.

-

The scenarios highlight some low regret areas for policy makers that could help navigate towards a more favourable AI future:

- Addressing bias uses of AI will be held back by any bias or unfairness, whether perceived or actually held within models. Measures to identify, minimise and mitigate bias in Frontier AI models are likely to be part of any favourable future.

- AI skills and awareness to support the safe use of AI all scenarios look more favourable if more people and organisations in the UK are well placed to use the latest advances in AI safely and effectively.

- Stronger international collaboration a consistent theme of the scenarios is that it s harder to manage challenges if the mitigations governments put in place are not global. It follows that building trust, and a global approach to AI, is likely to be part of navigating to a more positive future.

-

Navigating towards a favourable future for AI will also require policy makers to consider choices and trade-offs. Some highlighted by the scenarios include:

- How openly accessible - open source models could accelerate innovation, involve a wider community in identifying issues, and reduce market concentration. Are these benefits worth the risks that come from anyone being able to access and adapt a powerful Frontier AI system?

- Pace of change faster development should mean greater benefits, sooner, in terms of productivity and breakthroughs. But there are many voices in favour of slowing down to give regulatory mechanisms a chance to keep up.

- Who benefits a consistent theme of the scenarios is the risk to those (individuals or sectors) who are left behind. How to share the benefits of future AI between citizens and their creators, and the role of government in managing this, are likely to be hotly debated out to 2030.

- Members of the public we spoke to felt that safety is paramount. There was a consensus among public engagement participants that safety was a higher priority than other factors like AI capability. Participants found risks easier to imagine and tangible, whilst key opportunities for the future were harder to grasp and value.

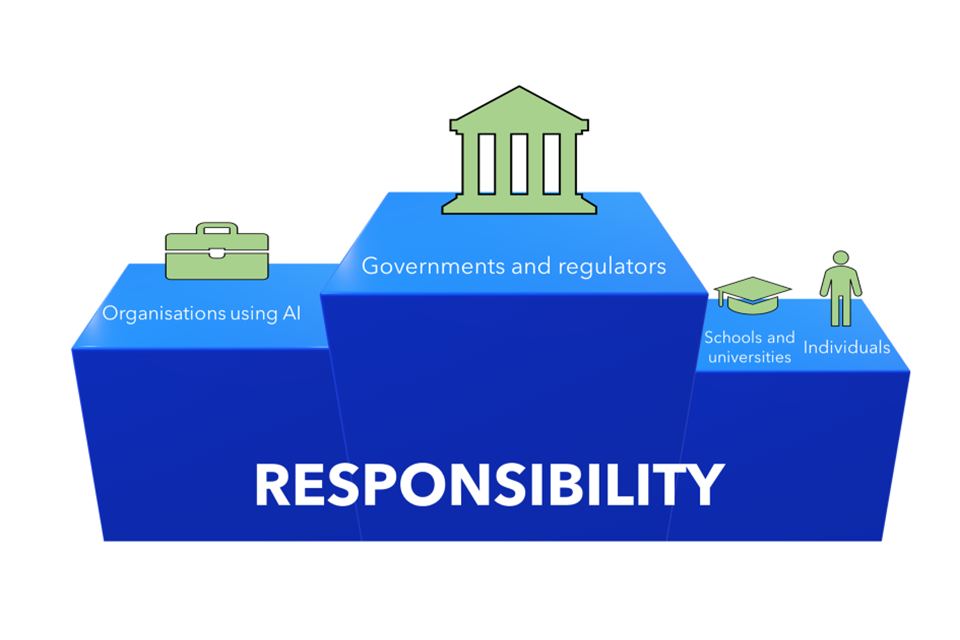

- Members of the public we spoke to felt that government and regulators are responsible for ensuring the safe use and development of AI, as well as safeguarding jobs. They also felt that organisations using AI were responsible for following regulation and behaving ethically, in order to build trust with the public.

1.7 How to use this report and the scenarios

These scenarios have been written policy off - they don’t describe any potential policies from the UK government that might be introduced to shape the future of AI. They all include challenges and problems for policy makers to solve.

Of course, none of the scenarios will describe the real future, which will likely feature elements of all these worlds. Another, more positive future is possible, and it is implausible to imagine the UK government does nothing between now and 2030 to steer towards it.

We shouldn’t overstate the influence a single government, any government, has over developments. But policies enacted now could help navigate toward a more favourable future than any presented in these scenarios. We recommend three different types of exercise to support development of such policies:

- Governments using this work to identify the future they do want for AI. Then working back from there to develop a plan to navigate towards this future. We call this backcasting.

- Exploring how different policy responses perform in each scenario, and how they might need to be adapted to achieve their objectives in different contexts. We call this stress-testing.

- Testing plans and assumptions against unanticipated shocks to ensure they are sufficiently resilient to a range of possible outcomes.

The primary audience for this report is government departments and expert stakeholders engaged in developing AI policy. Other organisations, such as businesses and local authorities, may also find these scenarios helpful for planning and strategy development.

2. Introduction

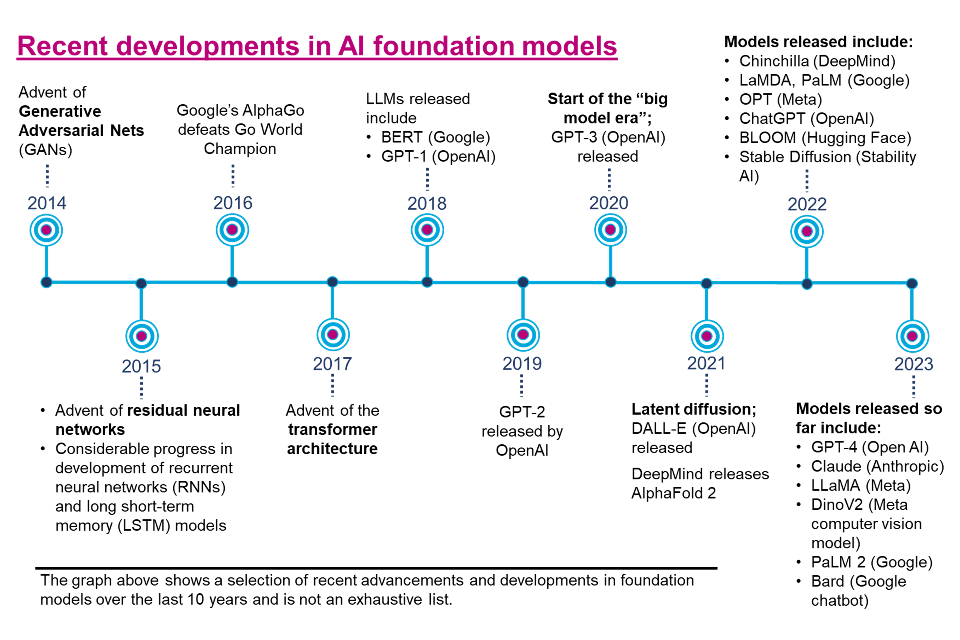

Recent developments in AI

Artificial intelligence (AI) is a broad and rapidly expanding field, encompassing the creation of machines that are capable of tasks otherwise requiring human intelligence to perform, for example problem solving and decision making. Multiple techniques are often used in combination to deliver a specific AI capability.

Historically, AI has been limited to systems able to carry out 1 or a small range of related tasks. Over the past 15 years, notable advances have come from machine learning approaches, using large neural networks and complex training methods that allow AI systems to learn how to perform a task from data.

More recently, this has led to the development of foundation models, trained using large amounts of data and compute, and capable of performing a range of tasks. This includes generative AI systems such as LLMs, capable of recognising, understanding, and generating text and other content.

An AI generated image depicting 2 people using AI, while working from home.

Figure 4 Generated with Dall-e 3. We prompted the tool to depict people using AI from home. See note below on AI generated content.

Technical developments, such as the advent of transformer architectures and approaches to reinforcement learning (RL), have also contributed in recent years to the creation of increasingly capable models at the Frontier of AI.

This report uses the government s chosen definition of Frontier AI is a model that can perform a wide variety of tasks and match or exceed the capabilities present in today s most advanced models . As of October 2023, this primarily encompasses foundation models consisting of huge neural networks using transformer architectures. However, there is uncertainty over the form that Frontier AI systems could take in future.

Alongside Frontier AI becoming increasingly capable and multimodal, AI tools are being made more accessible to the general public. The ability to access models through online platforms using natural language interfaces has brought AI into the public consciousness . Within 5 days of OpenAI releasing its LLM-based chatbot ChatGPT to the public, the platform had reportedly reached 1 million users, and this grew to 100 million users within 2 months.

Other Frontier AI companies have followed suit, releasing their AI models for use by the general public. Google have used their LaMDA family of LLMs, and more recently their PaLM LLMs, to power their Bard chatbot , whilst Meta have revealed plans to launch a range of chatbots with different personas, powered by their Llama 2 LLM . Recent advancements have also extended to the creation of multimodal models, such as GPT-4, the first GPT model capable of responding to prompts of both text and images.

Data from this graphic shown below:

| Date | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 |

|---|---|---|---|---|---|---|---|---|---|---|

| Developments | Advent of Generative Adversarial Nets (GANs) | Advent of residual nural networks. Considerable progress in development of recurrent nural networks (RNNs) and short-term memory (LSTM) models | Google’s AlphaGo defeats Go World Champion | Advent of the transformer architecture | LLMs realseased include: BERT (Google), GPT-1 (OpenAI) | GPT-2 realeased by OpenAI | Start of the ‘Big Model Era’, GPT-3 (OpenAI) realeases | Latent Diffusion: DALL-E (OpenAI) realeased, DeepMind realeases AlphaFold 2 | Models realseased include: Chinchilla (DeepMind), LaMDA PaLM (Google), OPT (Meta), ChatGPT (OpenAI), BLOOM (Hugging Face), Stable Diffusion (Stability AI) | Models released so far include: GPT-4 (OpenAI), Claude (Anthropic), LLaMA (Meta), DinoV2 (Meta computer vision model, PaLM 2 (Google), Bard (Google chatbot) |

Such Frontier AI models also now demonstrate limited capabilities in planning and reasoning, memory, and mathematics. A more comprehensive list of current Frontier AI capabilities can be found in the GO-Science Future Risks of Frontier AI paper1.

Beyond Frontier AI, other AI systems and tools are being applied in a range of contexts, such as protein structure prediction and cancer imaging and diagnosis. Machine learning technology is already embedded into many online services we use every day, including social media, maps, streaming services, insurance, and online shopping. Opportunities and benefits from use of AI are highly likely to grow in impact and scope as systems become more capable in the future.

Current market structure

AI is developed and deployed by many companies and institutions, big and small. However, developments in Frontier AI are dominated by a small number of companies. These include OpenAI, Google DeepMind and Anthropic, who have the resources to train the largest models.

Although smaller companies and the open source AI community have the potential to disrupt this market structure in future, barriers such as access to compute, data and funding would need to be overcome or become less important (see Chapter 3).

Risks from AI

Whilst the increasing capability of Frontier AI offers numerous potential positive impacts, it also presents significant risks. These include increasing mass disinformation and deepfakes, enabling cyber-attacks, reducing barriers to access harmful information, and enabling fraud.

Current Frontier AI models are also susceptible to amplifying existing biases within their training data and providing potentially harmful and discriminatory responses. Training on large swathes of UK and US English internet content can mean that misogynistic, ageist, and racist content is overrepresented in the training data .

This is by no means an exhaustive list of the current risks from AI. A number of high-quality reports cover this topic in more detail, including those HMG, Alan Turing Institute, Parliament, the IMF, CSET, OWASP, Stanford University, and the OECD.

Over the past year, several leading figures in the field have expressed concern at the pace of recent developments and the unpredictable trajectory of future AI capability.

In March 2023, a large group of prominent AI experts, researchers and entrepreneurs signed an open letter calling for a six-month pause on the training of AI systems more powerful than GPT-4.

The heated debate on the potential for AI to present catastrophic or existential risk to humans led to calls for governments around the world to consider this a global priority.

An AI generated image depicting a group of experts signing a letter agreeing upon AI safety and safe development.

Figure 5 Generated with Dall-e 3. We prompted the tool to depict a diverse group of experts signing a letter. See note below on AI generated content.

However, many experts consider existential risks to be very low likelihood with few plausible routes to being realised. They suggest there should be more focus on addressing current AI risks, which are set to get become more acute with increasing AI capability and access. Such differing expert views present policymakers with a high degree of uncertainty.

In this context of rapid change and rising concerns, GO-Science has developed this report to help policymakers plan and develop resilient AI policies and strategies.

2.1 Scope of this report

The future of AI development is surrounded by significant uncertainty. This includes uncertainty around the development of future capabilities and safety systems, as well as how people and businesses use AI and the resultant impacts both in the UK and globally. We have developed a set of scenarios to help explore these interacting uncertainties.

The scenarios are not predictions. Each scenario explores the events leading up to a plausible future in 2030. Plausible in this context means that the experts we spoke to generally felt these scenarios could happen given the bounds of uncertainty.

The scenarios are designed to help UK policy makers, industry and civil society explore AI risks and opportunities. Developments at a global level are described, but the focus is on implications for the UK. Policy makers can use these scenarios to test strategies and policies designed to navigate to a more favourable AI future for UK s economy, society, and public services. To make the scenarios usable for this purpose, we have intentionally avoided introducing new government interventions in the scenarios.

One consequence of this design choice is that all scenarios are challenging, with difficult policy issues to tackle. This does not mean a more positive AI future is implausible, or that the negative impacts described are inevitable. There are many beneficial outcomes that could arise from AI developments, some of which are included in the scenarios. However, most experts we spoke to agree that policy intervention will be needed to ensure risks can be comprehensively mitigated. As a result, we have explicitly avoided including any scenarios that are largely benign or favourable.

The scenarios focus on Frontier AI the most capable and general models available in 2030. However, the average AI systems in use could be significantly more capable than today. The scenarios therefore also consider some developments away from the Frontier.

2.2 A note on AI generated content

This report includes text artefacts and images that have been fully or partially generated by AI tools. This represents an experiment, designed to illustrate some of the current capabilities of AI.

Whilst we think this content does demonstrate some impressive AI capabilities, we also acknowledge variation in quality of some of the outputs. In particular, the AI tools we used struggled with concepts like diversity when depicting groups of people. They also produce errors, for instance a group of experts signing a table.

We decided to include these problematic images to help highlight these current biases and deficiencies. This content has been labelled as AI generated to alert the reader.

3. Methodology

This chapter sets out the methodology used to develop the AI 2030 scenarios and the key principles that informed this development.

3.1 Introduction

What are scenarios?

These scenarios are stories that describe alternative ways in which the uncertainties surrounding AI might play out in the future. Each scenario explores how different conditions might support or constrain delivery of policy and strategy objectives. Scenarios are never predictions, rather a way to imagine different versions of the future, explore how they could be brought about, identify the risks and opportunities they represent and help to guide decisions on what we should do now as a result.

The scenarios are not mutually exclusive and there is likely to be some overlap. The real future is likely to contain elements of all five the scenarios to varying degrees.

What are the principles for our scenarios?

The principles that underpin these scenarios are:

- Plausible: Scenario end states should feel like they could happen by 2030. This means uncertainties had to be combined in a coherent way, considering their likely interactions. To ensure plausibility, each scenario narrative also had to consider what might happen between now and 2030 to reach the end state.

- Stretching: As the scenarios are being developed to stress-test government strategy, they should feel stretching and, in some cases, uncomfortable or negative, diverging from what policy makers consider to be the business as usual trajectory.

- External to government action: These scenarios have been designed without UK government intervention i.e., we have intentionally avoided imagining any new regulations. UK policymakers can therefore use them to test a range of policy ideas and government actions in order to work out how best to mitigate the downsides of each scenario and navigate to a more favourable future. The potential outcomes in a more positive AI future are described in Chapter 4, but as many experts told us that reaching such a future will require policy interventions, this is not included in our scenario set.

3.2 Methodology

Our scenario development approach is a hybrid of the qualitative workshop-based approach set out in the Futures toolkit and a more technical General Morphological Analysis of how multiple related variables could plausibly combine. This hybrid approach allowed us to benefit from the qualitative insights of a large group of experts, whilst exploring complex interactions between uncertainties in a more structured way. We are grateful for the input received from over 70 experts from academia, industry, and government departments across various workshops and subsequent rounds of peer review. The methodology comprises four stages:

- Critical Uncertainties. Critical uncertainties are factors that are both important to the future of AI development, but also highly uncertain. They provide the building blocks for our scenarios and were developed using a combination of desk research and expert workshops. An initial list of 27 sub-uncertainties were identified. These were then clustered together into the five broad-brush critical uncertainties that were used to develop the scenarios. Table 1 shows the final five critical uncertainties and the sub-uncertainties that were clustered together to create them.

Table 1: Summary of critical uncertainties, descriptors, and sub-uncertainties

| Uncertainty | Description | Sub-uncertainty |

|---|---|---|

| Capability | What ability do AI systems have to successfully achieve a range of goals? Can they interact with the physical world? Does the level of success and range of goals achieve increase over time? | • Capability • Generality • Understanding the physical world • Autonomy and agency • Self-improvement • Developments in other technologies |

| Ownership, access, and constraints | Who owns systems? How do they deploy them and give access? What infrastructure and platforms are used to deploy systems? What constraints are there on availability of AI systems? | • Market structure • Access Closed vs open source models • System design and complexity • Levels of investment • Access to data and other inputs • Compute constraints |

| Safety | Can we build safe AI-based systems, and assure the validity and interpretability of their internal models? How robust are these systems to changes in deployment context? How successfully do their design processes ensure that their behaviour aligns to societal values? How much have we allowed AI to control systems that could impact our safety? | • Controllability • Model interpretability • Changes in deployment context • Alignment / potential for bias • Control over critical systems |

| Level and distribution of use | How much will people and businesses use AI systems? What will they use them for and why? Will they be consciously aware they are using AI, or will these systems be more subtly embedded in products and services? How does use of AI across society affect the education and training people undertake and the jobs people do? To what extent do other factors like levels of trust in institutions influence AI development and use? | • Integration into daily practices of members of the public • Level of public access, knowledge and skills inequality • Integration into business practices, products and services • UK professional skills • Public involvement in shaping how AI is used • Public sentiment • Level of AI misuse |

| Geopolitical context | What wider developments have there been at a global level that will influence AI development and use? | • International cooperation on AI • Links to cooperation on other global issues, particularly climate change • Level of conflict in the world • Reliance on globalised supply chains versus re-shoring |

- Axes of Uncertainty. For each critical uncertainty, we constructed a high-level Axis of Uncertainty consisting of 2 plausible but extreme 2030 outcomes for the uncertainty. These represent an unrealistically binary simplification, but this was necessary to help narrow down to a manageable set of scenarios, before reintroducing complexity and detail at the end of the process. Table 2 shows the final set of axes.

Table 2: Final set of axes of uncertainty

Axes of uncertainty

| Lower capability: Gradual improvement in generative AI and other specific tasks. System limited to ‘call and response’ with human oversight. Not self-improving | Higher capability: Initial AGI. Can act without human input, including in the physical world. Self-improving and can set own goals. AI can connect to other new technology. |

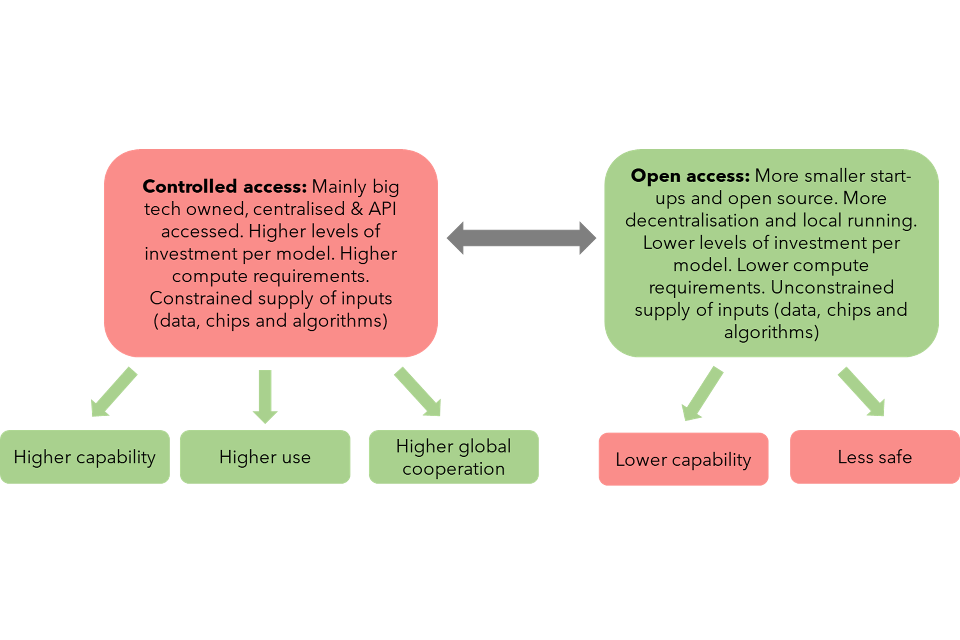

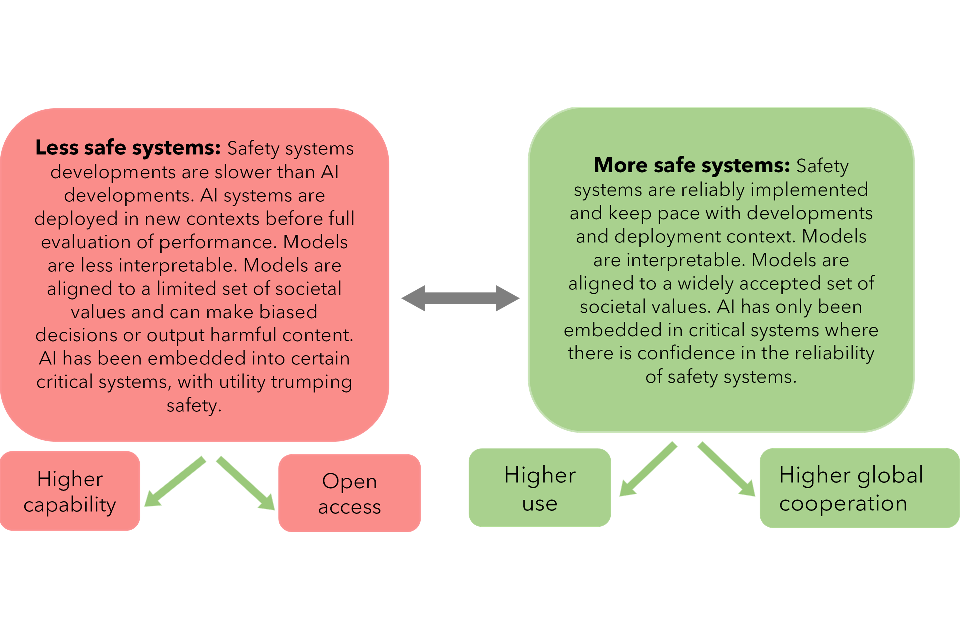

| Controlled access: Mainly big tech owned, centralised & API accessed. Higher levels of investment per model. Higher compute requirements. Constrained supply of inputs (data, chips and algorithms) | Open access: More smaller start-ups and open source. More decentralisation and local running. Lower levels of investment per model. Lower compute requirements. Unconstrained supply of inputs (data, chips and algorithms) |

| More safe: Safety systems are reliably implemented and keep pace with developments and deployment context. Models are interpretable. Models are aligned to a widely accepted set of societal values. AI has only been embedded in critical systems where there is confidence in the reliability of safety systems. | Less safe: Safety systems developments are slower than AI developments. AI systems are deployed in new contexts before full evaluation of performance. Models are less interpretable. Models are aligned to a limited set of societal values and can make biased decisions or output harmful content. AI has been embedded into certain critical systems, with utility trumping safety. |

| Higher use: AI systems are embedded in many daily practices. Businesses develop attractive AI-based products, and use of AI in these products is disclosed to the public. Government and businesses seek public views on in AI-use decision making. This contributes to positive public sentiment on AI. | Lower use: AI systems are less embedded in daily practices. Businesses develop few attractive AI-based products, and use of AI in these products is opaque to the public. Government and businesses ignore public views on in AI-use decision making. This contributes to negative public sentiment on AI. |

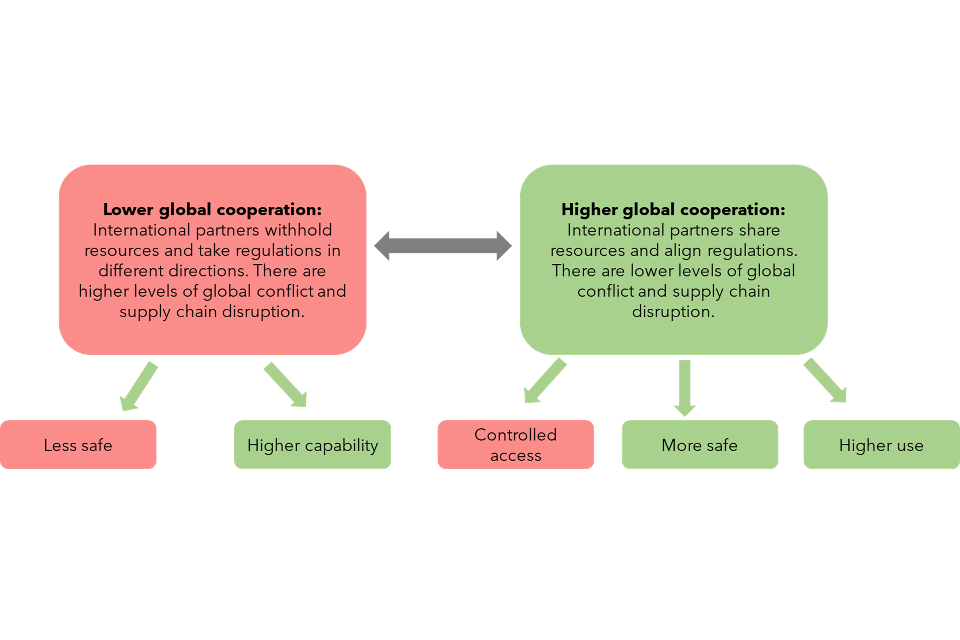

| Higher global cooperation: International partners share resources and align regulations. There are lower levels of global conflict and supply chain disruption. | Lower global cooperation: International partners withhold resources and take regulations in different directions. There are higher levels of global conflict and supply chain disruption. |

-

Narrowing down the possible scenarios. Even just using the extremes of each axis of uncertainty, there are 32 combinations of outcomes and possible scenarios. We needed to narrow these down to a more manageable number. To do so, we used a process of impact mapping and morphological analysis to test each axis of uncertainty outcome against every other outcome to identify combinations that were particularly implausible or incoherent and which could be eliminated. The result was a shortlist of 13 possible scenarios, which were then voted on by experts to help identify the final five scenarios deemed to be sufficiently diverse and interesting.

-

Scenario narrative development. Each of the five scenarios is made up of different combinations of outcomes for the five critical uncertainties to create a coherent, plausible, and diverse set. The final stage of the process involved developing fuller scenario narratives, informed by expert input and our own assessments of plausible outcomes for all the critical uncertainty combinations. Detailed scenario narratives are provided in Chapter 5.

4. Evidence and uncertainties

4.1 Introduction

As the capabilities of AI systems rapidly improve, researchers and policymakers are working to monitor and measure these developments and their impacts. But difficulties in measuring such rapid changes mean that there is considerable uncertainty about the scale and nature of these impacts, and how they will evolve in the future.

This section summarises the evidence base that has informed our scenarios, as well as highlighting the areas where there is significant residual uncertainty. We focus on the five Critical Uncertainties introduced above, but first cover more certain drivers of change.

Drivers of change with more certainty

Whilst the Critical Uncertainties form the building blocks for our scenarios, there are also other, more certain drivers of change for AI development that will shape the majority of plausible futures. The following factors have been identified by engagement with a range of experts from academia and industry, in combination with a review of the existing literature in this area.

These factors are not all mentioned explicitly in each scenario narrative, but it can be assumed that the changes described below are, to some extent, present in the background of all five of our scenarios.

Frontier models will continue to get more capable over the next few years. The trend in recent years has been that increased amounts of compute and training data has yielded increased capability of models, referred to as scaling laws. Whilst these improvements have not always followed a neat linear trajectory, and have sometimes been unexpected, bigger models have, in general, been more capable. With industry experts suggesting that the next iteration of Frontier models will be trained using an order of magnitude more compute than current systems, it looks certain that these models will see some level of capability improvement.

As well as becoming more proficient at existing capabilities, like content creation and computer vision, experts also expect future Frontier AI systems to demonstrate new capabilities, such as increased multi-modality, creating more long-form structure text, and carrying out data analysis and visualisation. Current and potential future capabilities are discussed in more detail in the Capability section below and in the GO-Science Future Risks of Frontier AI paper1.

Although some experts have voiced concerns that compute or data shortages or the end of scaling laws could constrain Frontier AI developments, this was not anticipated to be an immediate concern.

A few AI companies are likely to remain at or close to the frontier of AI. The leading, most capable AI models are currently developed by big tech companies such as OpenAI, Google DeepMind and Anthropic who have access to the funding, skills, data and compute required. It is likely that a select group of AI laboratories will carry on developing the Frontier models as academia and open source communities continue to be unable to access the same levels of funding.

While big companies will likely dominate the AI frontier, open source models, like Falcon or Mistral, cannot be discounted. If such an open source model were to approach the capability of an AI system like GPT-4, it would be powerful tool for developers outside these big companies to adapt . However, even if notable advancements were to originate from the open source community, the leading AI companies would quickly be able to catch up.

Existing harms are already being exacerbated by AI, and this is likely to get worse. Harms from activities like spreading mis- and disinformation, deception, or creation and dissemination of other harmful content are already being increased by use of AI tools. Digitally manipulated images are also being used for political purposes, and there are concerns that deepfakes and misinformation could threaten democratic processes.

Evidence has shown that AI can produce compelling disinformation that can be harder to spot than disinformation produced by humans . As AI systems continue to become more widely available and more capable, many experts believe that this will lower the bar for such activities and increase their impacts.

Machine learning technology is already embedded into many online services, and this is likely to continue. Integration of AI into services such as healthcare and banking has already taken off in recent years . Many of the online tools we use every day rely on integrated machine learning algorithms to automate and personalise services, including social media, maps, streaming services, insurance, and online shopping (discussed in more detail in the Level and distribution of use section below). As AI systems become increasingly capable and readily available, it is somewhat inevitable that more private and public services will incorporate these tools and technologies.

Critical uncertainties

As described in Chapter 3, we have identified five broad critical uncertainties for AI development: ‘Capability’, ‘Ownership, access, and constraints’, ‘Safety’, ‘Level and distribution of use’, and ‘Geopolitical context’. These form the building blocks of our scenarios. Each is made up of a series of sub-uncertainties, described in more detail below. Each section also includes ‘wildcards’, more radical possible outcomes associated with the critical uncertainty, alongside a high-level description of its relationship to the other critical uncertainties.

| Capability: What ability will AI systems have to successfully achieve a range of goals? Will this include interaction with the physical world? How quickly will the performance and range of capabilities increase over time? |

| Ownership, access, and constraints: Who controls systems? How accessible are they? What infrastructure and platforms are used to deploy systems? What constraints are there on the availability of AI systems? |

| Safety: Can we build safe AI-based systems, assuring their validity and interpretability? How robust are systems to changes in deployment context? How successfully does system design ensure AI behaviour aligns to societal values? |

| Level and distribution of use: How much will people and businesses use AI systems? What for and why? Will they be consciously aware they are using AI, or not? How will people be affected by AI misuse? How will use affect the education and jobs people do? |

| Geopolitical context: What wider developments have there been at a global level that will influence AI development and use? Will there generally be more cooperation on big issues, or more conflict? |

4.2 Capability

Overview

Capability can be defined as the range of tasks or functions that an AI system can perform and the proficiency with which it can perform them. This includes how the level of success and range of goals achieved increases over time, as well as the extent to which these systems are able to interact with the real world.

Whilst today’s Frontier AI models are significantly more capable than their predecessors, there is ongoing debate over whether they display some capabilities, such as planning or reasoning. And whilst it is almost certain that Frontier AI will get more capable over the next few years, there is significant uncertainty over which capabilities will be developed and when.

This in turn means that the risks posed by future Frontier AI systems are highly uncertain. However, experts highlighted several capabilities, that if realised, could increase the likelihood of future AI systems posing a serious risk. These included: agency, autonomy, self-improvement, and the ability to evade human oversight and shut down.

Sub-uncertainties

Generality

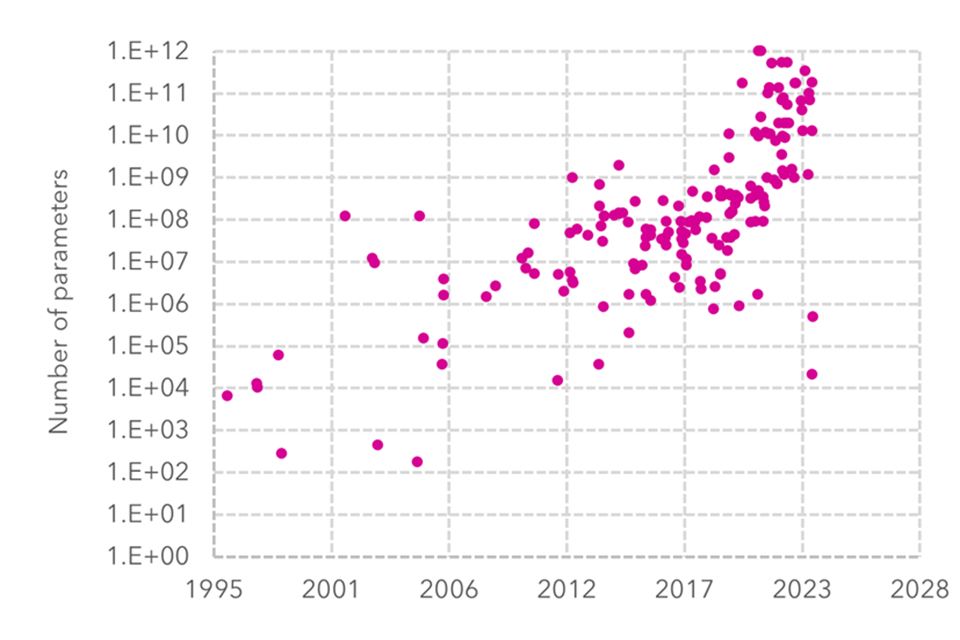

Historically, AI systems have tended to be trained on a narrow dataset to carry out 1 or a small range of related tasks. More recently, there has been a shift towards using complex training approaches and increasing amounts of data and compute to develop large and powerful neural networks. The graph below illustrates the trend of an increasing number of parameters in leading AI systems .

The last decade has seen a clear trend of increasing model size and compute yielding improved capabilities and performance on benchmark tests – so called “Scaling Laws”28. In addition to models showing improved accuracy in tasks they have been trained for, such as next word prediction , new capabilities have also “emerged” as models have increased in size, resulting in improved generality (see box below) .

Figure 6: The number of parameters (variables adjusted during training to establish how input data gets transformed into desired output) in notable AI systems from 1995-2023. Source: Epoch (2023)

Data from this graphic shown below:

Number of Noteable Systems by E+ Parameter

| Date | E+00 | E+01 | E+02 | E+03 | E+04 | E+05 | E+06 | E+07 | E+08 | E+09 | E+10 | E+11 | E+12 |

| 1995-2001 | 1 | 3 | 1 | ||||||||||

| 2001-2006 | 1 | 1 | 3 | 1 | 3 | 2 | |||||||

| 2006-2012 | 1 | 4 | 5 | 1 | |||||||||

| 2012-2017 | 2 | 5 | 10 | 22 | 3 | ||||||||

| 2017-2023 | 6 | 9 | 18 | 22 | 13 | 10 | 5 | ||||||

| 2023-2028 | 1 | 1 | 1 | 2 | 5 | 1 |

Emergence: a disputed paradigm

As foundation models have increased in size, a range of “emergent” capabilities have been reported. This refers to a skill that the model was not explicitly designed to do, and was not present in smaller models, “emerging” above a certain scale. 1 example of this is ability to perform addition, subtraction, and multiplication .

There is ongoing debate as to how many capabilities truly “emerged”. Some experts cite early experiments suggesting “emergent” capabilities can be explained by models following instructions and pattern matching in their training data (in-context learning and memorisation respectively). Further it was suggested to us that many emergent abilities are at least within the expected domain of the model in question (for example, producing text).

However, while it remains plausible that unexpected capabilities could emerge as models get bigger, the capabilities and generality of future Frontier AI systems will be highly uncertain and making detailed predictions will be challenging.

Some experts believe we are on a trajectory towards artificial general intelligence (AGI), a system capable of human-level or higher performance across most cognitive tasks. In 2023, researchers at Microsoft were struck by the capabilities exhibited by GPT-4 across a variety of domains and tasks, enough to posit the existence of “sparks of artificial general intelligence” . How close we are to AGI remains the subject of debate . Many experts are sceptical about it arriving soon, and without new model architectures. Others, including some who build current Frontier Models, are more bullish.

Given the potential future risks that a highly advanced, general AI system could pose, many believe it is a possibility that should be planned for. The ‘Safety’ section below discusses challenges in evaluating the performance and safety of a highly advanced, general AI system across all possible applications.

Whilst many labs are aiming to develop increasingly general systems, experts we spoke to also expect the development of capable but narrow AI systems to continue. Such systems can provide cutting-edge performance for specific applications and use cases, for example, protein structure prediction using AlphaFold . Narrow AI systems, fine-tuned to perform specific tasks, may also be easier to integrate into business processes.

How much more general will Frontier AI systems be by 2030? Will unexpected capabilities emerge? What will the role of advanced narrow AI systems be?

The rest of this section covers other capabilities that one might expect from a highly general future AI system.

Computer vision

Computer vision covers a range of tasks from image labelling to object classification which are becoming increasingly well developed in today’s Frontier AI systems. Large multimodal datasets have led to adaptable models that can respond to text and images, such as GPT-4, SAM, CLIP, PALM-E, and DINO. Continuing to improve these capabilities is likely to be important for developing more general Frontier AI systems in future although there remains uncertainty over whether computer vision capability will scale in line with increasing size of multimodal datasets.

Planning and reasoning

current Frontier AI models have displayed limited planning and reasoning abilities, for example passing exams that require problem solving. However, there is ongoing debate about the extent to which they show true abstract reasoning or are matching to patterns in their training data. Novel architectures, training, and fine-tuning approaches are already being explored to enhance planning and reasoning capabilities. However, there is uncertainty over which of these novel approaches, if any, will prove the most successful for future Frontier AI systems.

Enhanced memory

Current Frontier AI models do not have an explicitly built-in capability to remember previous tasks, outcomes and users. Enabling models to query up to date databanks or increasing the length of user inputs can confer some of the useful properties of memory but often at the expense of accuracy and cost. Several approaches to improving AI memory are being pursued, although it is uncertain which will be most successful. These include developing cheaper and more effective ways of processing large prompts, as well as connecting models to banks of relevant information, known as retrieval augmented generation.

Understanding the physical world

Another key uncertainty is the extent to which AI systems will be successfully integrated with robotic systems to interact with the physical world. As an example, Google DeepMind’s Robotic Transformer 2 (RT-2) system, underpinned by their PaLM-E language model, has displayed some capability to recognise and move physical objects when prompted . Developing AI systems with an understanding of the physical world remains a key outstanding challenge and is likely to be crucial for the future application of Frontier AI systems for the design and optimisation of physical processes (e.g. manufacturing). This will also require the ability of AI systems to apply concepts such as space, time, gravity, and understand how different objects interact.

Experts have suggested that training models on an increasingly wide range of multimodal data (e.g. images and video) could accelerate the degree to which AI systems can understand the real world.

At what rate will this capability improve by 2030? How will it interact with developments in the generality vs narrowness of Frontier AI systems?

Autonomy and agency

Developments in foundation models over the last 18 months have prompted efforts to develop more autonomous digital systems. This encompasses the ability to plan and complete multiple sequential tasks to achieve a given goal without direct human oversight. Increased autonomy and agency of AI systems has been facilitated by the development of “wrappers” that harness the capabilities of LLMs for more complex multi-step problem solving and decision-making. Notable examples include apps such as LangChain and Auto-GPT . The latter is powered by GPT-4 and breaks down its goal into sub-tasks before using tools on the internet to achieve these without human oversight . As 1 example, when asked to build an app, it is claimed it was able to: work out what software and content is needed, secure access to the tools, write code, test it, and review the results. This was reported, on an unverified blog, to have been completed with AutoGPT asking and answering its own questions until the task was complete. However, other users note that AutoGPT frequently gets stuck in a loop of questions and answers.

Currently, autonomous agents possess a small range of capabilities and can only independently carry out relatively basic tasks. However, research is ongoing in this area to develop the necessary platforms and infrastructure to connect agents to a wide range of tools. Improving other capabilities such as memory, planning and reasoning and self-correction are likely to be crucial to delivering truly autonomous AI agents ,.

As outlined in the “Generality” section above, research is ongoing into the development of improved planning and reasoning and memory capabilities but there is uncertainty over which approaches will be most successful, and how they can be integrated into truly autonomous AI agents in future. Will such agents be available by 2030?

Self-improvement

Some experts believe that in future, AI models could become able to suggest improvements or autonomously self-improve but how this capability could emerge remains unclear. Some have suggested that using AI to produce training datasets or provide feedback to models during reinforcement learning could provide an important initial step towards self-improvement . Researchers at Google DeepMind have recently developed a self-improving AI agent for robotics called RoboCat that can self-generate new training data to improve its technique.

It is also plausible but uncertain that future AI systems may be able to autonomously edit and fine-tune their own code and training. Some experts view the ability of future AI systems to edit their own code as a potential pathway to an “intelligence explosion” event along the trajectory towards AGI although there is significant uncertainty about how this could occur. Suggestions that AI systems are already capable of improving on the code-writing ability of humans currently remain disputed and this form of self-improvement has so far required human oversight.

Changes in model architecture

As well as uncertainty over the capabilities that may emerge in the next generation of Frontier AI, there is also uncertainty over the development of new model architectures. The advent of the transformer architecture in 2017 paved the way for the Frontier LLMs in existence today. Scaling of data and compute used to train LLMs has in recent years resulted in new and unexpected capabilities. However, it is plausible that a new architectural development could be an alternative accelerant for the development of increasingly advanced AI systems in future.

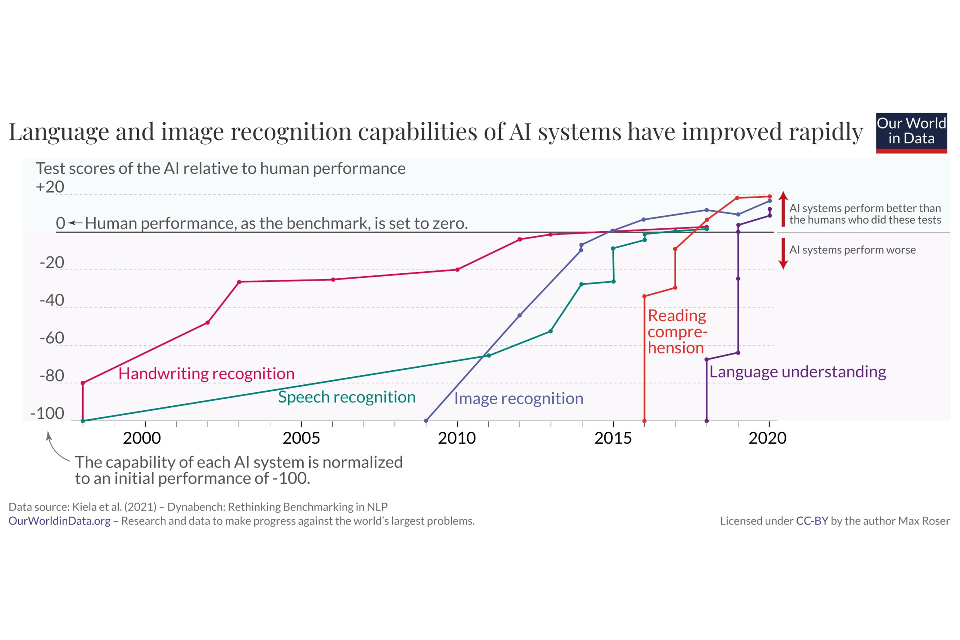

Figure 7: The change in capabilities of AI systems, 1998 – 2020. Developments in “deep learning” (2012) and transformer architecture (2017). resulted in step changes in capability. Source: Kiela et al. (2021) – Dynabench: Rethinking Benchmarking in NLP.

Data from this graphic shown below:

Test Scores of AI Relative to Human Performance, human performance as a benchmark is set to 0

| Date | Handwriting Recognition | Speech Recognition | Image Recognition | Reading Comprehension | Language Understanding |

|---|---|---|---|---|---|

| 1998-2000 | -70 | -95 | N/A | N/A | N/A |

| 2000-2005 | -35 | -90 | N/A | N/A | N/A |

| 2005-2010 | -25 | -75 | -80 | N/A | N/A |

| 2010-2015 | -5 | -55 | -30 | N/A | N/A |

| 2015-2020 | 5 | 5 | 10 | 20 | 10 |

Developments in other technologies

The emergence of new AI capabilities could be facilitated by developments in other technologies. For example, improvements in hardware and robotic platforms could enable the integration of machine learning with embedded systems, which can reduce latency and increase the capability of machine learning in edge devices, such as smart watches and cameras .

The fields of neuroscience and AI are heavily intertwined and continue to drive each other forwards ,. Future developments in applied neuroscience could provide more insight into how the brain performs computation, leading to advances in neuromorphic computing and the design of more complex machine learning models . Developments in other emerging technologies, such as quantum computing and future telecoms could also impact on developments in AI in future.

Relationships to other critical uncertainties

This section provides a few key examples how different outcomes for the ‘Capability’ critical uncertainty could be correlated with outcomes on other critical uncertainties, including how these correlations might be contingent on other factors. This information was developed using outputs of the scenario development workshops (see Chapter 2).

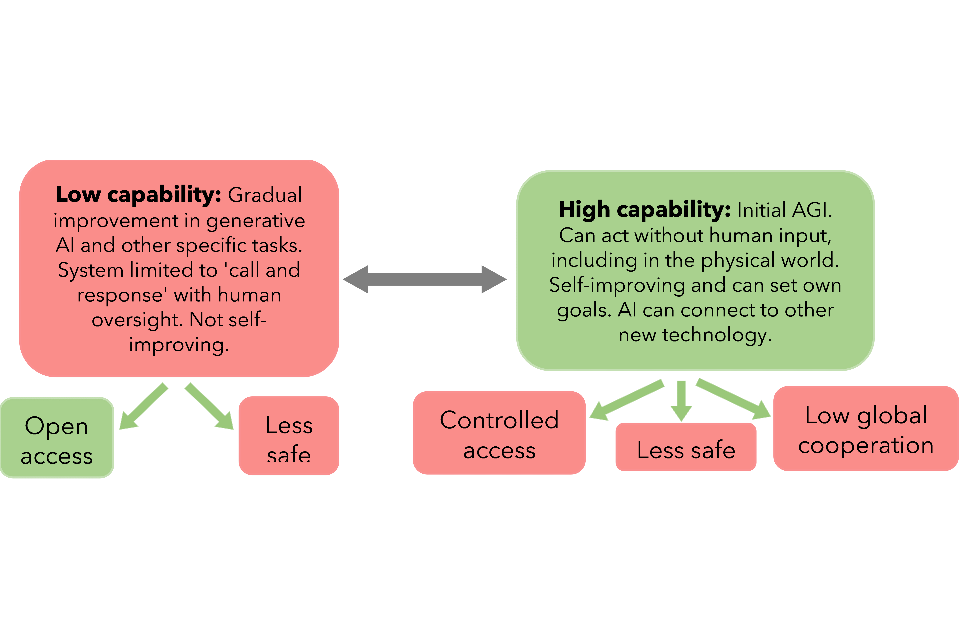

|

Low capability: Gradual improvement in generative AI and other specific tasks. System limited to ‘call and response’ with human oversight. Not self-improving. Experts considered lower capability to be most strongly coherent with: Open access: If capability development is slow (suggesting no big new breakthroughs and scaling laws running out), open source will catch up to big labs. Less safe: Although many safety concerns arise from the potential for increasing capability, others related to misuse could still grow in a low capability world. This could be particularly problematic if there is a diverse array of lower capability AI systems that are harder to regulate. |

High capability: Initial AGI. Can act without human input, including in the physical world. Self- improving and can set own goals. AI can connect to other new technology. Experts considered higher capability to be most strongly coherent with: Controlled access: A world with higher capability is more likely to be 1 in which big tech firms have accelerated developments. It is also likely that this would be a world with high data and compute requirements, and where firms control access to these capable systems. Less safe: Safety and regulations tend to lag behind capability – the faster the capability improvement, the more this will be the case. Low global cooperation: It’s plausible that development of high capability systems could drive an ‘arms race’ dynamic. |

Wildcards

Wildcards are radical outcomes associated with each critical uncertainty. These are considered possible, but not likely, on a 2030 timescale. Policy makers could use these as possible ‘shocks’ to test their plans against (see Chapter 6).

Superintelligence

An AI system is developed that is capable of autonomous and recursive self-improvement. This results in an intelligence explosion event, leading to the emergence of an ‘artificial superintelligence’, which exceeds the cognitive performance of all humans at all tasks. Whilst the possibility of such an outcome is disputed, particularly on a 2030 timescale, its impacts could be so significant that there may be value in policy makers considering such a potential shock.

Neurological integration

With advances in AI capability and complementary hardware, people start to embed AI to improve their own capabilities. This begins with wearable tech but escalates to people using technological implants designed to enhance mental and physical performance. This has the potential to divide society, both in terms of inequality, with those who can afford to enhance their own capabilities benefiting most, as well as a broader philosophical debate about whether this signals a change in what it means to be human.

4.3 Ownership, constraints and access

Overview

The Frontier AI industry is heavily concentrated, with a handful of technology firms such as OpenAI, Google DeepMind and Anthropic developing and controlling the leading models. As systems becomes increasingly capable and complex, there is uncertainty over whether computational and data requirements will hinder the ability of smaller companies and the open source community to keep pace with Frontier AI firms.

The accessibility of Frontier AI to developers and users, driven by both open source and closed-source technologies, has significantly increased in recent years. It remains to be seen how future AI systems will be deployed and how access will be controlled.

Sub-uncertainties

Market structure

The current Frontier AI market is dominated by a small number of private sector organisations, including technology giants such as Meta and Google DeepMind . Their dominance is evidenced by the fact that 76 AI startups were acquired by five technology companies between 2010 and 2021 , with Apple and Google responsible for 60% of these acquisitions.

A recent report commissioned by the UK Department for Science, Innovation and Technology (DSIT), estimated that large firms generated 71% of all UK AI revenues despite comprising only 4% of the total number of UK AI firms . The future market structure of the Frontier AI ecosystem is uncertain, however continued market concentration could pose risks around unequal access to, and impacts from, AI. This is particularly true if the leading models continue to be closed-access. Open source developments could help to diversify the Frontier AI market, although are often dependent on big tech corporations .

Access

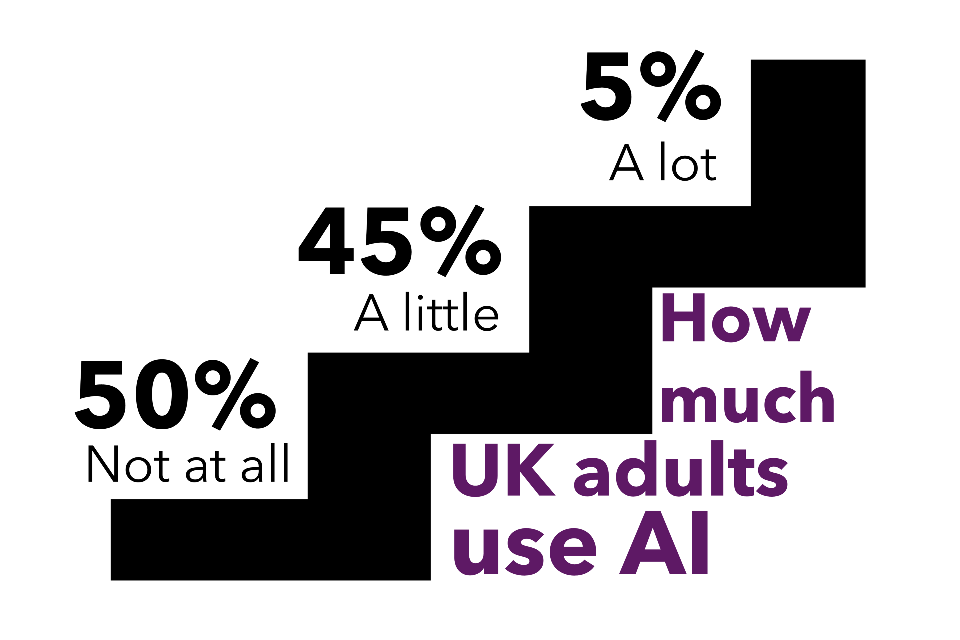

Over the last year, the public release of generative AI systems, including LLM chatbots such as OpenAI’s ChatGPT, has paved the way for rising public access to and use of AI applications. ONS data from May 2023 indicate that 5% of adults in the UK use AI a lot and 45% use AI a little in their day-to-day life . A third of adults also report using chatbots in the past month. However, access to Frontier AI services is increasingly being controlled using paywalls and the rise in the number of people experimenting with AI tools for fun appears to be slowing. Following reports in February 2023 suggesting that ChatGPT was the fastest-growing app in history , visits to the ChatGPT website subsequently declined for three consecutive months between May and August 2023 . Analysis has also suggested that the average amount of time spent by users on the website declined monthly from March through to August 2023.

Closed vs open source models

The majority of Frontier AI models released to date by the leading companies, such as OpenAI, Google DeepMind and Anthropic, have been closed access. The underlying code and parameters such as weights and biases have remained confidential. However, the increasing availability of data and computational resources has bolstered the open source AI community.

In May 2023, an unverified leaked memo, allegedly from an unnamed senior software engineer at Google DeepMind, expressed concern that open source models were quickly catching up to the capabilities of closed-source models produced by the likes of Google and OpenAI . Meta have the resources to compete with other Frontier organisations and continue to release open source models of greater scale and capability (e.g. Llama2 ). Other open source models such as Falcon are also showing signs of closing the gap to the leading closed models.

Making a system accessible to many developers or users may increase the risk of misuse by malicious actors compared to private systems. However, open systems support scrutiny by a larger developer community who could spot biases, risks, or faults, and systems can be tailored for specific user needs. Alternatively, if the Frontier AI ecosystem comes to be dominated by one, or a small number of closed models which have undetected biases, this could heighten the potential for safety failures (e.g. prompt injection attacks ) or undetected biases being propagated across multiple use cases.

Whilst it seems almost certain that open source models will continue to improve in future, it is uncertain whether they will be able to keep pace with the closed models released by Frontier labs. If capability continues to scale with data and compute, it appears unlikely that the open source community will be able to access the same levels of funding, data and compute that a select few companies have access to.

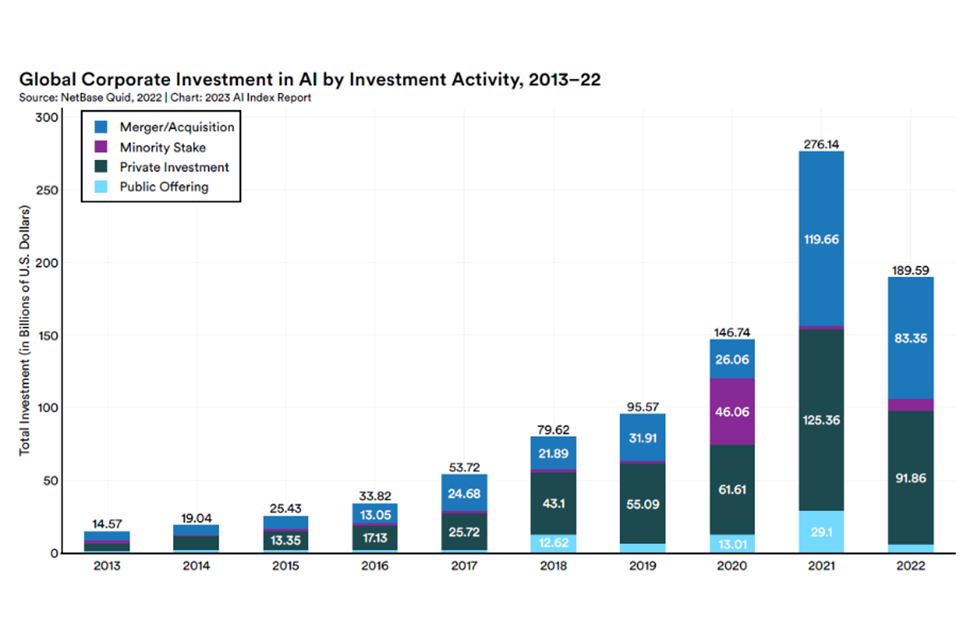

Levels of investment

Between 2013 and 2022, global corporate investment in AI has increased by 1200% from ~$15 billion to ~$190 billion (Figure 8). Although year-on-year investment dipped by 30% from 2021 to 202232, the overall upward trend in AI investment is expected to continue, with spending on AI-centric systems estimated to exceed $300 billion in 2026 . The global annual value of venture capital funding in AI has increased by 25x, from $3 billion to $75 billion between 2012 and 2020 . Whilst increases seem likely to continue in the short term, the medium-term trajectory is more uncertain, with potential for ongoing increases, slowing or volatility.

Figure 8: Global Corporate Investment in AI by Investment Activity, 2013-22. Source: NetBase Quid, 2022 | Chart: 2023 AI Index Report Data from this graphic shown below.

Data from this graphic shown below:

Global Corporate Investment in AI by Investment Activity 2013-2022, Total Investment (in Billions of U.S. Dollars)

| Date | Merger/Acquisition | Minority Stake | Private Investment | Public Offering | Total |

|---|---|---|---|---|---|

| 2013 | Number not shown | Number not shown | Number not shown | Number not shown | 14.57 |

| 2014 | Number not shown | Number not shown | Number not shown | Number not shown | 19.04 |

| 2015 | Number not shown | Number not shown | 13.35 | Number not shown | 25.43 |

| 2016 | 13.05 | Number not shown | 17.13 | Number not shown | 33.82 |

| 2017 | 24.68 | Number not shown | 25.72 | Number not shown | 53.72 |

| 2018 | 21.89 | Number not shown | 43.1 | 12.62 | 79.62 |

| 2019 | 31.91 | Number not shown | 55.09 | Number not shown | 95.57 |

| 2020 | 26.06 | 46.06 | 61.61 | 13.01 | 146.74 |

| 2021 | 119.66 | Number not shown | 125.36 | 29.1 | 276.14 |

| 2022 | 83.35 | Number not shown | 91.86 | Number not shown | 189.59 |

Compute constraints

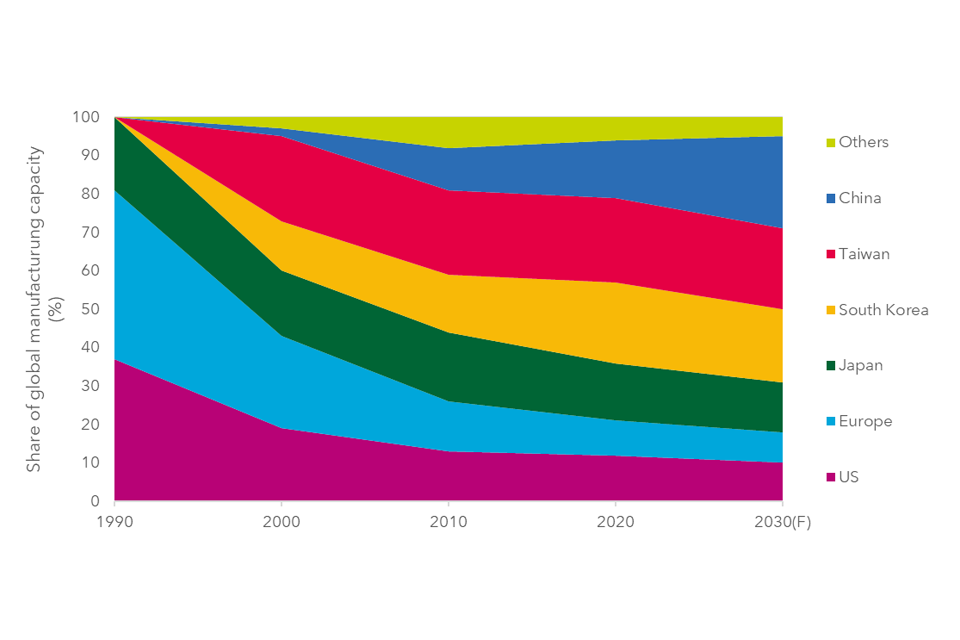

Development of Frontier AI systems has become highly dependent on access to significant compute resource for training AI models, which itself is dependent on the semiconductor supply chain , . Restricted access to cloud computing services could impact future AI model training, development and deployment, as could increases in cost or disruption of access to next generation hardware (e.g. Nvidia’s GH200 chip ). In particular, rising GPU demand coupled with the concentration of the market is already resulting in chip shortages, impacting the development and deployment of Frontier AI systems . The production of semiconductors is concentrated in Asia, with Taiwan alone producing 60% of the global semiconductor supply, and 90% of the most advanced chips . Geopolitical tensions are already influencing the supply of hardware critical to AI systems and there is potential for this to continue to impact developments at the Frontier of AI (see Geopolitical Context section).

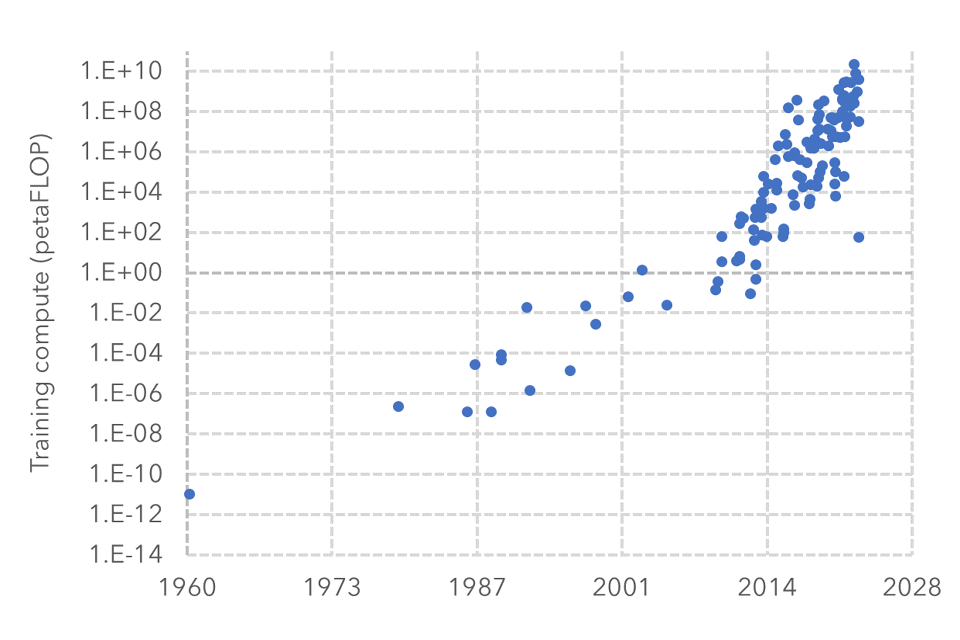

Figure 9 – Training compute used for notable AI systems, measured in petaflop (1015 floating point operations estimated from AI literature, though with some uncertainty). Source: Epoch (2023)

Data from this graphic shown below:

Training compute used for notable AI systems, measured in petaflop (E+/-)

| Date | E-14 | E-12 | E-10 | E-8 | E-6 | E-4 | E-2 | E+00 | E+02 | E+04 | E+06 | E+08 | E+10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1960-1973 | N/A | 1 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| 1973-1987 | N/A | N/A | N/A | N/A | 2 | 1 | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| 1987-2001 | N/A | N/A | N/A | N/A | 2 | 3 | 3 | N/A | N/A | N/A | N/A | N/A | N/A |

| 2001-2014 | N/A | N/A | N/A | N/A | N/A | N/A | 3 | 9 | 10 | 7 | N/A | N/A | N/A |

| 2014-2028 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | 5 | 17 | 24 | 27 | 7 |

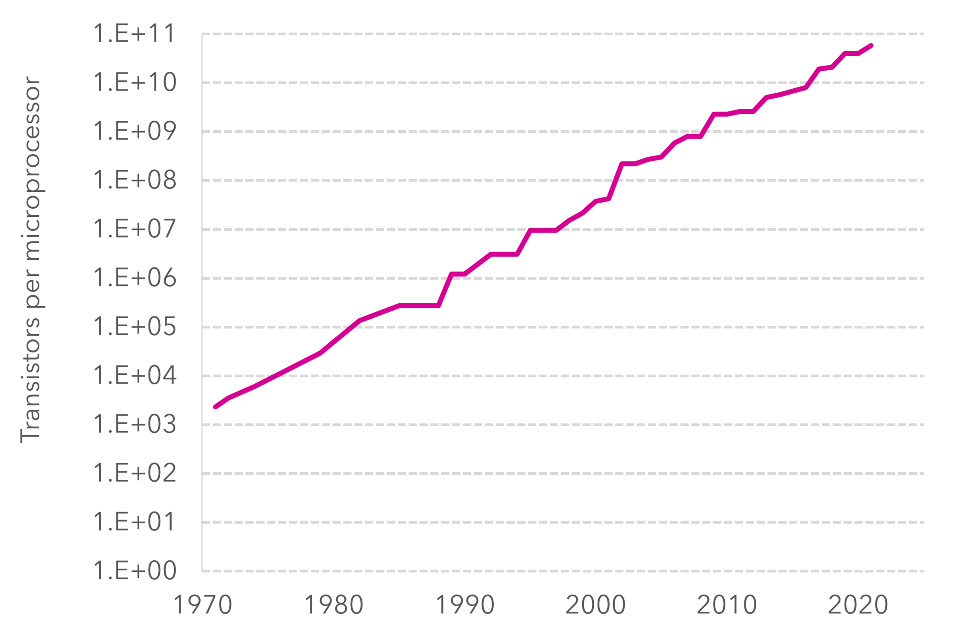

Figure 10 – The number of transistors that fit into a microprocessor 1971-2021. Source: Karl Rupp, Microprocessor Trend Data (2022)

Data from this graphic shown below:

| Date | Transistors by microprocessor (E+) |

|---|---|

| 1970 | E+3.3 |

| 1980 | E+4.6 |

| 1990 | E+6.1 |

| 2000 | E+7.3 |

| 2010 | E+9.4 |

| 2020 | E+10.6 |

Furthermore, there is uncertainty over whether the increases in compute that have powered recent advances in AI are sustainable in the long term. The amount of compute used in training runs for AI models has been increasing exponentially over the last decade , as illustrated in Figure 9 . Such training runs are extremely costly and energy-intensive, as is the running of servers to deploy Frontier AI models. The environmental impact of training and deploying AI in this way is likely to be challenging from a sustainability point of view and could influence future development .

In addition, Moore’s Law, the observation that the number of transistors on an integrated circuit doubles approximately every 2 years, has held true over the past fifty years (Figure 10 ). However, experts are becoming increasingly concerned that this relationship will soon be at an end as we reach the limit of transistors to operate in increasingly smaller circuits at higher temperatures . At this point, firms may find it increasingly difficult to reduce computational costs, creating uncertainty over the potential knock-on effects on developments in AI. The emergence of cost-effective alternative compute technologies, designed with AI in mind, could potentially provide solutions.

Access to data and other inputs

Both the quantity and quality of data available for training and fine-tuning AI models is a crucial consideration for the development of current and future AI systems. Being unable to access the same scale and quality of training data as some of the leading technology corporations is likely to hinder AI start-ups in their development of new systems and capabilities, giving Frontier firms a competitive advantage .

There are concerns that the increasing demand for high-quality language data may soon outstrip supply, with some estimating that these data sources may be exhausted as early as 2026 . Additionally, there is the potential for existing datasets to become “poisoned” over-time by AI generated content, including mistakes of previous AI models . This has been referred to as “model collapse” by researchers and could reduce the performance of future AI systems , . However, there is a vast amount of non-language data yet to be used, including photos and videos. Some organisations are also exploring the use of computer-made “synthetic” data , although its utility for training future models remains uncertain.

One of the other main concerns with regards to data for AI models is the issue of intellectual property. Several lawsuits have already been brought against companies including Microsoft, OpenAI and Stability AI, claiming that the use of web-scraped data to train their models was an infringement of copyright laws . There is uncertainty over the impacts that the outcomes of these legal challenges could have on the AI industry. Were access to high-quality data sources to become restricted, such that they were only affordable to big tech companies, it could result in a further increase in the concentration of market power, restricting competition and impeding the open source community. The development of more capable models in future could be hampered or become significantly more costly.

System design and complexity

Some AI systems can be run on local devices, containing the necessary algorithms and data for specific use cases. For larger more complex systems, data and processing is sent to external machines for task execution. Due to significant compute requirements, some small start-ups have pooled their computing resources77. The potential future impacts of pooling resources are uncertain but there is the potential for significant leaps in capability that could challenge the leading players. It appears unlikely that the large technology firms developing Frontier models will follow suit, in order to try and maintain a competitive advantage.

Researchers are experimenting with the use of combinations of different foundation models to enhance capabilities and there is the potential for this to continue with both closed and open source models to increase functionality49. Frontier models can also be modified using “wrappers” to fine-tune the system for a particular function, or to facilitate increased autonomy and agency, as discussed previously in the Capability critical uncertainty .

Relationships to other critical uncertainties

This section provides a few key examples how different outcomes for the ‘Ownership, access, and constraints’ critical uncertainty could be correlated with outcomes on other critical uncertainties, including how these correlations might be contingent on other factors. This is based on outputs of the scenario development workshops (Chapter 2).

|

Controlled access: Mainly big tech owned, centralised & API accessed. Higher levels of investment per model. Higher compute requirements. Constrained supply of inputs (data, chips and algorithms) Experts consider controlled access to be most strongly coherent with: Higher capability: More compute is expected (at least in the short term) to continue to produce more capable models. Higher use: Big firms are generally better at driving mass adoption of tech. Higher global cooperation: Cooperation is difficult if accessibility is uncontrolled. |

Open access: More smaller start-ups and open source. More decentralisation and local running. Lower levels of investment per model. Lower compute requirements. Unconstrained supply of inputs (data, chips and algorithms) Experts consider open access to be most strongly coherent with: Lower capability: In a world with lower capabilities, the market will likely be more diverse, with start-ups and open source developers catching up to big tech. Less safe – The dispersed nature of open source makes it harder to regulate, although can support greater scrutiny for spotting biases, risks and faults. |

Wildcards

Wildcards are radical outcomes associated with each critical uncertainty. These are considered possible, but not likely, on a 2030 timescale. Policy makers could use these as possible ‘shocks’ to test their plans against (see Chapter 6).

Leaks of Frontier models

A cyber security breach of the leading AI firm has resulted in the unrestricted public access of their proprietary Frontier AI models. This results in any sufficiently resourced and competent user having the freedom to deploy a highly capable AI model as they choose, including across a range of unsuitable and malicious uses.

Stifled AI innovation

Seeing the profit potential behind AI and the threat from open access AI, companies work to create patents and copyrights to maintain their market position. The pace of growth slows and becomes more product focused. AI software prices increase, and it becomes more constrained and safer as companies fear reputational damage due to AI malfunction.

4.4 Safety

Overview

The development of increasingly capable AI systems has heightened interest in ensuring that they can be deployed and used safely and responsibly. AI safety encompasses a range of social and technical considerations and challenges. Technical measures to monitor, evaluate and report model faults and shut down systems are all areas of active research but there is uncertainty over how effectively such systems can be designed and implemented.

There also remains significant uncertainty over the alignment of future AI systems to societal values and who should decide which values to embed. Industry, academia, civil society, governments, and the public all have an important role to play in tackling the social and technical challenges and consequential safety risks presented by increasingly capable Frontier AI systems. But exactly how the perspectives of these groups will influence the development of future AI safety measure remains uncertain.

Defining AI safety

The term “AI safety” can mean different things to different communities, with experts outlining several schools of thought to us, including:

- The range of risks and dangers that AI systems could pose, particularly to the safety of humans.

- Upstream, technical evaluations of AI safety, using a mix of theoretical measures and analytical methods.

- How precisely AI models operate within set constraints, including their tolerances to faults and errors.

- Being able to use AI safely for clinical practices and applications.

- Responsible AI, including considerations of bias, ethical principles, and the differential impacts of AI systems for different groups of people.

AI safety is a complex and multi-faceted socio-technical challenge that cannot be resolved with technical interventions alone. Measures will need to incorporate considerations of all of the elements above into a broader framework of governance, processes, and policy that considers people, systemic effects and how risks emerge in practice , . The rest of this section outlines key sub-uncertainties around how future Frontier AI systems can be developed and used in a safe way.

Sub-uncertainties

Controllability