International scientific report on the safety of advanced AI: interim report

Published 17 May 2024

Disclaimer

The report does not represent the views of the Chair, any particular individual in the writing or advisory groups, nor any of the governments that have supported its development. This report is a synthesis of the existing research on the capabilities and risks of advanced AI. The Chair of the report has ultimate responsibility for it, and has overseen its development from beginning to end.

Research series number: DSIT 2024/009

Contributors

Chair

Prof. Yoshua Bengio, Université de Montréal / Mila - Quebec AI Institute

Expert Advisory Panel

Prof. Bronwyn Fox, The Commonwealth Scientific and Industrial Research Organisation (CSIRO) (Australia)

André Carlos Ponce de Leon Ferreira de Carvalho, Institute of Mathematics and Computer Sciences, University of São Paulo (Brazil)

Dr. Mona Nemer, Chief Science Advisor of Canada (Canada)

Raquel Pezoa Rivera, Federico Santa María Technical University (Chile)

Dr. Yi Zeng, Institute of Automation, Chinese Academy of Sciences (China)

Juha Heikkilä, DG Connect (European Union)

Guillaume Avrin, General Directorate of Enterprises (France)

Prof. Antonio Krüger, German Research Center for Artificial Intelligence (Germany)

Prof. Balaraman Ravindran, Indian Institute of Technology, Madras (India)

Prof. Hammam Riza, KORIKA (Indonesia)

Dr. Ciarán Seoighe, Science Foundation Ireland (Ireland)

Dr. Ziv Katzir, Israel Innovation Authority (Israel)

Dr. Andrea Monti, University of Chieti-Pescara (Italy)

Dr. Hiroaki Kitano, Sony Group (Japan)

[Interim] Mary Kerema, Ministry of Information Communications Technology and Digital Economy (Kenya)

Dr. José Ramón López Portillo, Q Element (Mexico)

Prof. Haroon Sheikh, Netherlands’ Scientific Council for Government Policy (Netherlands)

Dr. Gill Jolly, Ministry of Business, Innovation and Employment (New Zealand)

Dr. Olubunmi Ajala, Innovation and Digital Economy (Nigeria)

Dominic Ligot, CirroLytix (Philippines)

Prof. Kyoung Mu Lee, Department of Electrical and Computer Engineering, Seoul National University (Republic of Korea)

Ahmet Halit Hatip, Turkish Ministry of Industry and Technology (Republic of Turkey)

Crystal Rugege, National Center for AI and Innovation Policy (Rwanda)

Dr. Fahad Albalawi, Saudi Authority for Data and Artificial Intelligence (Kingdom of Saudi Arabia)

Denise Wong, Data Innovation and Protection Group, Infocomm Media Development Authority (IMDA) (Singapore)

Dr. Nuria Oliver, ELLIS Alicante (Spain)

Dr. Christian Busch, Federal Department of Economic Affairs, Education and Research (Switzerland)

Oleksii Molchanovskyi, Expert Committee on the Development of Artificial intelligence in Ukraine (Ukraine)

Marwan Alserkal, Ministry of Cabinet Affairs, Prime Minister’s Office (United Arab Emirates)

Saif M. Khan, U.S. Department of Commerce (United States)

Dame Angela McLean, Government Chief Scientific Adviser (United Kingdom)

Amandeep Gill, UN Tech Envoy (United Nations)

Scientific Lead

Sören Mindermann, Mila - Quebec AI Institute

Writing Group

Daniel Privitera (lead writer), KIRA Center

Tamay Besiroglu, Epoch AI

Rishi Bommasani, Stanford University

Stephen Casper, Massachusetts Institute of Technology

Yejin Choi, University of Washington/A12

Danielle Goldfarb, Mila - Quebec AI Institute

Hoda Heidari, Carnegie Mellon University

Leila Khalatbari, Hong Kong University of Science and Technology

Shayne Longpre, Massachusetts Institute of Technology

Vasilios Mavroudis, Alan Turing Institute

Mantas Mazeika, University of Illinois at Urbana-Champaign

Kwan Yee Ng, Concordia AI

Chinasa T. Okolo, Ph.D, The Brookings Institution

Deborah Raji, Mozilla

Theodora Skeadas, Humane Intelligence

Florian Tramèr, ETH Zürich

Senior Advisers

Bayo Adekanmbi, Data Science Nigeria

Paul Christiano, contributed as a Senior Adviser prior to taking up his role at the US AI Safety Institute

David Dalrymple, Advanced Research + Invention Agency (ARIA)

Thomas G. Dietterich, Oregon State University

Edward Felten, Princeton University

Pascale Fung, Hong Kong University of Science and Technology, contributed as a Senior Adviser prior to taking up her role at Meta

Pierre-Olivier Gourinchas, International Monetary Fund (IMF)

Nick Jennings CB FREng FRS, University of Loughborough

Andreas Krause, ETH Zurich

Percy Liang, Stanford University

Teresa Ludermir, Federal University of Pernambuco

Vidushi Marda, REAL ML

Helen Margetts OBE FBA, University of Oxford/Alan Turing Institute

John A. McDermid OBE FREng, University of York

Arvind Narayanan, Princeton University

Alondra Nelson, Institute for Advanced Study

Alice Oh, KAIST School of Computing

Gopal Ramchurn, RAI UK/UKRI TAS Hub/University of Southampton

Stuart Russell, University of California, Berkeley

Marietje Schaake, Stanford University

Dawn Song, University of California, Berkeley

Alvaro Soto, Pontificia Universidad Católica de Chile

Lee Tiedrich, Duke University

Gaël Varoquaux, The National Institute for Research in Digital Science and Technology (Inria)

Andrew Yao, Institute for Interdisciplinary Information Sciences, Tsinghua University

Ya-Qin Zhang, Tsinghua University

Secretariat

UK Government Secretariat hosted by the AI Safety Institute

Benjamin Prud’homme, Mila - Quebec AI Institute

Acknowledgements

The Secretariat appreciate the helpful support, comments, and feedback from the following UK-based organisations: Ada Lovelace Institute, The Alan Turing Institute, The Centre for Long-Term Resilience, Centre for the Governance of AI, and UK AI Safety Institute. Also a special thanks to Dan Hendrycks, Dylan Hadfield-Menell, and Pamela Samuelson.

Forewords

This report is the beginning of a journey on AI Safety

I am honoured to be chairing the delivery of the inaugural International Scientific Report on Advanced AI Safety. I am proud to publish this interim report which is the culmination of huge efforts by many experts over the 6 months since the work was commissioned at the Bletchley Park AI Safety Summit in November 2023.

We know that advanced AI is developing very rapidly, and that there is considerable uncertainty over how these advanced AI systems might affect how we live and work in the future. AI has tremendous potential to change our lives for the better, but it also poses risks of harm. That is why having this thorough analysis of the available scientific literature and expert opinion is essential. The more we know, the better equipped we are to shape our collective destiny.

Our mission is clear: to drive a shared, science-based, up-to-date understanding of the safety of advanced AI, and to continue to develop that understanding over time. The report rightly highlights that there are areas of consensus among experts and also disagreements over the capabilities and risks of advanced AI, especially those expected to be developed in the future. In order to meet our mission effectively, we have aimed to address disagreement amongst the expert community with intellectual honesty. By dissecting these differences, we pave the way for informed policy-making and stimulate the research needed to help clear the fog and mitigate risks.

I am grateful to our international Expert Advisory Panel for their invaluable comments, initially shaping the report’s scope and later providing feedback on the full draft. Their diverse perspectives and careful review have broadened and strengthened this interim report. Equally deserving of recognition are my dedicated team of writers and senior advisers. Their commitment over the past few months has created an interim product that has surpassed my expectations. My thanks also go to the UK Government for starting this process and offering outstanding operational support. It was also important for me that the UK Government agreed that the scientists writing this report should have complete independence.

This interim report is only the beginning of a journey. There are no doubt perspectives and evidence that this report has failed to capture in this first attempt. In a scientific process such as this, feedback is precious. We will incorporate additional evidence and scientific viewpoints as we work toward the final version.

Professor Yoshua Bengio ,

Université de Montréal / Mila - Quebec AI Institute & Chair

AI Safety is a shared global issue

I am delighted to present this interim update on the first International Scientific Report on the Safety of Advanced AI, a key outcome of the groundbreaking AI Safety Summit held at Bletchley Park in November 2023. This landmark report represents an unprecedented global effort to build a shared, science-based understanding of the opportunities and risks posed by rapid advancements in AI, and is a testament to the ‘Bletchley Effect’ - the power of convening brilliant minds to tackle one of humanity’s greatest challenges.

We believe that realising the immense potential of AI to benefit humanity will require proactive efforts to ensure these powerful technologies are developed and deployed safely and responsibly. No one country can tackle this challenge alone. That is why I was so passionate about bringing together a diverse group of world-leading experts to contribute their knowledge and perspectives. I want to especially thank Professor Yoshua Bengio for his leadership as Chair in skilfully shepherding this complex international effort.

Crucially the report also shines a light on the significant gaps in our current knowledge and the key uncertainties and debates that urgently require further research and discussion. It is my sincere hope that this report, and the cooperative process behind it, can serve as a catalyst for the research and policy efforts needed to close critical knowledge gaps and a valuable input for the challenging policy choices that lie ahead.

We still have much to learn, but this report marks an important start. The UK looks forward to continuing to work with international partners to promote a responsible, human-centric approach to AI development - one that harnesses these powerful tools to improve lives and livelihoods while vigilantly safeguarding against downside risks and harms. Together, we can work to build a future in which all of humanity can benefit from the wonders of AI.

The Rt Hon Michelle Donelan MP,

Secretary of State, Department for Science, Innovation, and Technology

A critical step forward and a Call to Action on AI Safety

The rapid advancement of AI stands poised to reshape our world in ways both profound and unforeseen. From revolutionising healthcare and transportation to automating complex tasks and unlocking scientific breakthroughs, AI’s potential for positive impact is undeniable.

However, alongside these notable possibilities lie significant challenges that necessitate a forward-looking approach. Concerns range from unintended biases embedded in algorithms to the possibility of autonomous systems exceeding human control. These potential risks highlight the urgent need for a global conversation to ensure the safe, and responsible advancement of AI.

In this context, the International AI Safety Report will provide vital groundwork for global collaboration. The report represents a convergence of knowledge from experts across 30 countries, the European Union, and the United Nations, providing a comprehensive analysis of AI safety. By focusing on the early scientific understanding of capabilities and risks from general purpose AI and evaluating technical methods for assessing and mitigating them, the report will spark ongoing dialogue and collaboration among multi-stakeholders.

I hope that based on this report, experts from 30 countries, the EU, and the UN continue to engage in balanced discussions, achieving AI risk mitigation that is acceptable and tailored to the specific context of both developed and developing countries, thereby creating a future where innovation and responsible AI coexist harmoniously.

Lee Jong-Ho,

Minister of MSIT, Republic of Korea

Executive summary

About this report

This is the interim publication of the first ‘International Scientific Report on the Safety of Advanced AI’. A diverse group of 75 artificial intelligence (AI) experts contributed to this report, including an international Expert Advisory Panel nominated by 30 countries, the European Union (EU), and the United Nations (UN).

Led by the Chair of this report, the independent experts writing this report collectively had full discretion over its content.

At a time of unprecedented progress in AI development, this first publication restricts its focus to a type of AI that has advanced particularly rapidly in recent years: General-purpose AI, or AI that can perform a wide variety of tasks. Amid rapid advancements, research on general-purpose AI is currently in a time of scientific discovery and is not yet settled science.

People around the world will only be able to enjoy general-purpose AI’s many potential benefits safely if its risks are appropriately managed. This report focuses on identifying these risks and evaluating technical methods for assessing and mitigating them. It does not aim to comprehensively assess all possible societal impacts of general-purpose AI, including its many potential benefits.

For the first time in history, this interim report brought together experts nominated by 30 countries, the EU, and the UN, and other world-leading experts, to provide a shared scientific, evidence-based foundation for discussions and decisions about general-purpose AI safety. We continue to disagree on several questions, minor and major, around general-purpose AI capabilities, risks, and risk mitigations. But we consider this project essential for improving our collective understanding of this technology and its potential risks, and for moving closer towards consensus and effective risk mitigation to ensure people can experience the potential benefits of general-purpose AI safely. The stakes are high. We look forward to continuing this effort.

Highlights of the executive summary

If properly governed, general-purpose AI can be applied to advance the public interest, potentially leading to enhanced wellbeing, more prosperity, and new scientific discoveries. However, malfunctioning or maliciously used general-purpose AI can also cause harm, for instance through biased decisions in high-stakes settings or through scams, fake media, or privacy violations.

As general-purpose AI capabilities continue to advance, risks such as large-scale labour market impacts, AI-enabled hacking or biological attacks, and society losing control over general-purpose AI could emerge, although the likelihood of these scenarios is debated among researchers. Different views on these risks often stem from differing expectations about the steps society will take to limit them, the effectiveness of those steps, and how rapidly general-purpose AI capabilities will be advanced.

There is considerable uncertainty about the rate of future progress in general-purpose AI capabilities. Some experts think a slowdown of progress is by far most likely, while other experts think that extremely rapid progress is possible or likely.

There are various technical methods to assess and reduce risks from general-purpose AI that developers can employ and regulators can require, but they all have limitations. For example, current techniques for explaining why general-purpose AI models produce any given output are severely limited.

The future of general-purpose AI technology is uncertain, with a wide range of trajectories appearing possible even in the near future, including both very positive and very negative outcomes. But nothing about the future of AI is inevitable. It will be the decisions of societies and governments that will determine the future of AI. This interim report aims to facilitate constructive discussion about these decisions.

This report synthesises the state of scientific understanding of general-purpose AI – AI that can perform a wide variety of tasks – with a focus on understanding and managing its risks

The capabilities of systems using AI have been advancing rapidly. This has highlighted the many opportunities that AI creates for business, research, government, and private life. It has also led to an increased awareness of current harms and potential future risks associated with advanced AI.

The purpose of the International Scientific Report on the Safety of Advanced AI is to take a step towards a shared international understanding of AI risks and how they can be mitigated. This first interim publication of the report restricts its focus to a type of AI whose capabilities have advanced particularly rapidly: general-purpose AI, or AI that can perform a wide variety of tasks.

Amid rapid advancements, research on general-purpose AI is currently in a time of scientific discovery and is not yet settled science. The report provides a snapshot of the current scientific understanding of general-purpose AI and its risks. This includes identifying areas of scientific consensus and areas where there are different views or open research questions.

People around the world will only be able to enjoy the potential benefits of general-purpose AI safely if its risks are appropriately managed. This report focuses on identifying risks from general-purpose AI and evaluating technical methods for assessing and mitigating them, including the beneficial use of general-purpose AI to mitigate risks. It does not aim to comprehensively assess all possible societal impacts of general-purpose AI, including what benefits it may offer.

General-purpose AI capabilities have grown rapidly in recent years according to many metrics, and there is no consensus on how to predict future progress, making a wide range of scenarios appear possible

According to many metrics, general-purpose AI capabilities are progressing rapidly. Five years ago, the leading general-purpose AI language models could rarely produce a coherent paragraph of text. Today, some general-purpose AI models can engage in multi-turn conversations on a wide range of topics, write short computer programs, or generate videos from a description. However, the capabilities of general-purpose AI are difficult to estimate reliably and define precisely.

The pace of general-purpose AI advancement depends on both the rate of technological advancements and the regulatory environment. This report focuses on the technological aspects and does not provide a discussion of how regulatory efforts might affect the speed of development and deployment of general-purpose AI.

AI developers have rapidly advanced general-purpose AI capabilities in recent years mostly by continuously increasing resources used for training new models (a trend called ‘scaling’) and refining existing algorithms. For example, state-of-the-art AI models have seen annual increases of approximately 4x in computational resources (‘compute’) used for training, 2.5x in training dataset size, and 1.5-3x in algorithmic efficiency (performance relative to compute). Whether ‘scaling’ has resulted in progress on fundamental challenges such as causal reasoning is debated among researchers.

The pace of future progress in general-purpose AI capabilities has substantial implications for managing emerging risks, but experts disagree on what to expect even in the near future. Experts variously support the possibility of general-purpose AI capabilities advancing slowly, rapidly, or extremely rapidly. This disagreement involves a key question: will continued ‘scaling’ of resources and refining existing techniques be sufficient to yield rapid progress and solve issues such as reliability and factual accuracy, or are new research breakthroughs required to substantially advance general-purpose AI abilities?

Several leading companies that develop general-purpose AI are betting on ‘scaling’ to continue leading to performance improvements. If recent trends continue, by the end of 2026 some general-purpose AI models will be trained using 40x to 100x more compute than the most compute-intensive models published in 2023, combined with training methods that use this compute 3x to 20x more efficiently. However, there are potential bottlenecks to further increasing both data and compute, including the availability of data, AI chips, capital expenditure, and local energy capacity. Companies developing general-purpose AI are working to navigate these potential bottlenecks.

Several research efforts aim to understand and evaluate general-purpose AI more reliably, but our overall understanding of how general-purpose AI models and systems work is limited

Approaches to managing risks from general-purpose AI often rest on the assumption that AI developers and policymakers can assess the capabilities and potential impacts of general-purpose AI models and systems. But while technical methods can help with assessment, all existing methods have limitations and cannot provide strong assurances against most harms related to general-purpose AI. Overall, the scientific understanding of the inner workings, capabilities, and societal impacts of general-purpose AI is very limited, and there is broad expert agreement that it should be a priority to improve our understanding of general-purpose AI. Some of the key challenges include:

- Developers still understand little about how their general-purpose AI models operate. This is because general-purpose AI models are not programmed in the traditional sense. Instead, they are trained: AI developers set up a training process that involves a lot of data, and the outcome of that training process is the general-purpose AI model. These models can consist of trillions of components, called parameters, and most of their inner workings are inscrutable, including to the model developers. Model explanation and interpretability techniques can improve researchers’ and developers’ understanding of how general-purpose AI models operate, but this research is nascent.

- General-purpose AI is mainly assessed through testing the model or system on various inputs. These spot checks are helpful for assessing strengths and weaknesses, including vulnerabilities and potentially harmful capabilities, but do not provide quantitative safety guarantees. The tests often miss hazards and overestimate or underestimate capabilities because general-purpose AI systems may behave differently in different circumstances, with different users, or with additional adjustments to their components.

- Independent actors can, in principle, audit general-purpose AI models or systems developed by a company. However, companies often do not provide independent auditors with the necessary level of direct access to models or the information about data and methods used that are needed for rigorous assessment. Several governments are beginning to build capacity for conducting technical evaluations and audits.

- It is difficult to assess the downstream societal impact of a general-purpose AI system because research into risk assessment has not been sufficient to produce rigorous and comprehensive assessment methodologies. In addition, general-purpose AI has a wide range of use cases, which are often not predefined and only lightly restricted, complicating risk assessment further. Understanding the potential downstream societal impacts of general-purpose AI models and systems requires nuanced and multidisciplinary analysis. Increasing the representation of diverse perspectives in general-purpose AI development and evaluation processes is an ongoing technical and institutional challenge.

General-purpose AI can pose severe risks to individual and public safety and wellbeing

This report classifies general-purpose AI risks into 3 categories: malicious use risks, risks from malfunctions, and systemic risks. It also discusses several cross-cutting factors that contribute to many risks.

Malicious use

Like all powerful technologies, general-purpose AI systems can be used maliciously to cause harm. Possible types of malicious use range from relatively well-evidenced ones, such as scams enabled by general-purpose AI, to ones that some experts believe might occur in the coming years, such as malicious use of scientific capabilities of general-purpose AI.

- Harm to individuals through fake content generated by general-purpose AI is a relatively well-documented class of general-purpose AI malicious use. General-purpose AI can be used to increase the scale and sophistication of scams and fraud, for example through ‘phishing’ attacks enhanced by general-purpose AI. General-purpose AI can also be used to generate fake compromising content featuring individuals without their consent, such as non-consensual deepfake pornography.

- Another area of concern is the malicious use of general-purpose AI for disinformation and manipulation of public opinion. General-purpose AI and other modern technologies make it easier to generate and disseminate disinformation, including in an effort to affect political processes. Technical countermeasures like watermarking content, although useful, can usually be circumvented by moderately sophisticated actors.

- General-purpose AI might also be maliciously used for cyber offence, uplifting the cyber expertise of individuals and making it easier for malicious users to conduct effective cyber-attacks. General-purpose AI systems can be used to scale and partially automate some types of cyber operations, such as social engineering attacks. However, general-purpose AI could also be used in cyber defence. Overall, there is not yet any substantial evidence suggesting that general-purpose AI can automate sophisticated cybersecurity tasks.

- Some experts have also expressed concern that general-purpose AI could be used to support the development and malicious use of weapons, such as biological weapons. There is no strong evidence that current general-purpose AI systems pose this risk. For example, although current general-purpose AI systems demonstrate growing capabilities related to biology, the limited studies available do not provide clear evidence that current systems can ‘uplift’ malicious actors to obtain biological pathogens more easily than could be done using the internet. However, future large-scale threats have scarcely been assessed and are hard to rule out.

Risks from malfunctions

Even when users have no intention to cause harm, serious risks can arise due to the malfunctioning of general-purpose AI. Such malfunctions can have several possible causes and consequences:

- The functionality of products based on general-purpose AI models and systems might be poorly understood by their users, for example due to miscommunication or misleading advertising. This can cause harm if users then deploy the systems in unsuitable ways or for unsuitable purposes.

- Bias in AI systems generally is a well-evidenced problem and remains unsolved for general-purpose AI, too. General-purpose AI outputs can be biased with respect to protected characteristics like race, gender, culture, age, and disability. This can create risks, including in high-stakes domains such as healthcare, job recruitment, and financial lending. In addition, many widely-used general-purpose AI models are primarily trained on data that disproportionately represents Western cultures, which can increase the potential for harm to individuals not represented well by this data.

- ‘Loss of control’ scenarios are potential future scenarios in which society can no longer meaningfully constrain general-purpose AI systems, even if it becomes clear that they are causing harm. There is broad consensus that current general-purpose AI lacks the capabilities to pose this risk. Some experts believe that current efforts to develop general-purpose autonomous AI – systems that can act, plan, and pursue goals – could lead to a loss of control if successful. Experts disagree about how plausible loss-of-control scenarios are, when they might occur, and how difficult it would be to mitigate them.

Systemic risks

The widespread development and adoption of general-purpose AI technology poses several systemic risks, ranging from potential labour market impacts to privacy risks and environmental effects:

- General-purpose AI, especially if it further advances rapidly, has the potential to automate a very wide range of tasks, which could have a significant effect on the labour market. This could mean many people could lose their current jobs. However, many economists expect that potential job losses could be offset, possibly completely, by the creation of new jobs and by increased demand in non-automated sectors.

- General-purpose AI research and development is currently concentrated in a few Western countries and China. This ‘AI Divide’ is multicausal, but in part stems from differing levels of access to the compute needed to develop general-purpose AI. Since low-income countries and academic institutions have less access to compute than high-income countries and technology companies do, they are placed at a disadvantage.

- The resulting market concentration in general-purpose AI development makes societies more vulnerable to several systemic risks. For instance, the widespread use of a small number of general-purpose AI systems in critical sectors like finance or healthcare could cause simultaneous failures and disruptions on a broad scale across these interdependent sectors, for instance because of bugs or vulnerabilities.

- Growing compute use in general-purpose AI development and deployment has rapidly increased energy usage associated with general-purpose AI. This trend shows no indications of moderating, potentially leading to further increased CO2 emissions and water consumption.

- General-purpose AI models or systems can pose risks to privacy. For instance, research has shown that by using adversarial inputs, users can extract training data containing information about individuals from a model. For future models trained on sensitive personal data like health or financial data, this may lead to particularly serious privacy leaks.

- Potential copyright infringements in general-purpose AI development pose a challenge to traditional intellectual property laws, as well as to systems of consent, compensation, and control over data. An unclear copyright regime disincentivises general-purpose AI developers from declaring what data they use and makes it unclear what protections are afforded to creators whose work is used without their consent to train general-purpose AI models.

Cross-cutting risk factors

Underpinning the risks associated with general-purpose AI are several cross-cutting risk factors – characteristics of general-purpose AI that increase the probability or severity of not one but several risks:

- Technical cross-cutting risk factors include the difficulty of ensuring that general-purpose AI systems reliably behave as intended, our lack of understanding of their inner workings, and the ongoing development of general-purpose AI ‘agents’ which can act autonomously with reduced oversight.

- Societal cross-cutting risk factors include the potential disparity between the pace of technological progress and the pace of a regulatory response, as well as competitive incentives for AI developers to release products quickly, potentially at the cost of thorough risk management.

Several technical approaches can help mitigate risks, but no currently known method provides strong assurances or guarantees against harm associated with general-purpose AI

While this report does not discuss policy interventions for mitigating risks from general-purpose AI, it does discuss technical risk mitigation methods on which researchers are making progress. Despite this progress, current methods have not reliably prevented even overtly harmful general-purpose AI outputs in real-world contexts. Several technical approaches are used to assess and mitigate risks:

- There is some progress in training general-purpose AI models to function more safely. Developers also train models to be more robust to inputs that are designed to make them fail (‘adversarial training’). Despite this, adversaries can typically find alternative inputs that reduce the effectiveness of safeguards with low to moderate effort. Limiting a general-purpose AI system’s capabilities to a specific use case can help to reduce risks from unforeseen failures or malicious use.

- There are several techniques for identifying risks, inspecting system actions, and evaluating performance once a general-purpose AI system has been deployed. These practices are often referred to as ‘monitoring’.

- Mitigation of bias in general-purpose AI systems can be addressed throughout the lifecycle of the system, including design, training, deployment, and usage. However, entirely preventing bias in general-purpose AI systems is challenging because it requires systematic training data collection, ongoing evaluation, and effective identification of bias. It may also require trading off fairness with other objectives such as accuracy and privacy, and deciding what is useful knowledge and what is an undesirable bias that should not be reflected in the outputs.

- Privacy protection is an active area of research and development. Simply minimising the use of sensitive personal data in training is one approach that can substantially reduce privacy risks. However, when sensitive data is either intentionally or unintentionally used, existing technical tools for reducing privacy risks struggle to scale to large general-purpose AI models, and can fail to provide users with meaningful control.

Conclusion: A wide range of general-purpose AI trajectories are possible, and much will depend on how societies and governments act

The future of general-purpose AI is uncertain, with a wide range of trajectories appearing possible even in the near future, including both very positive and very negative outcomes. But nothing about the future of general-purpose AI is inevitable. How general-purpose AI gets developed and by whom, which problems it gets designed to solve, whether societies will be able to reap general-purpose AI’s full economic potential, who benefits from it, the types of risks we expose ourselves to, and how much we invest into research to mitigate risks — these and many other questions depend on the choices that societies and governments make today and in the future to shape the development of general-purpose AI.

To help facilitate constructive discussion about these decisions, this report provides an overview of the current state of scientific research and discussion on managing the risks of general-purpose AI. The stakes are high. We look forward to continuing this effort.

1. Introduction

We are in the midst of a technological revolution that will fundamentally alter the way we live, work, and relate to one another. Artificial Intelligence (AI) promises to transform many aspects of our society and economy.

There is broad scientific consensus that the capabilities of AI systems have progressed rapidly on many tasks in the last 5 years. Large Language Models (LLMs) are a particularly salient example. In 2019, GPT-2, then the most advanced LLM, could not reliably produce a coherent paragraph of text and could not always count to 10. At the time of writing, the most powerful LLMs like Claude 3, GPT-4, and Gemini Ultra can engage consistently in multi-turn conversations, write short computer programs, translate between multiple languages, score highly on university entrance exams, and summarise long documents.

This step-change in capabilities, and the potential for continued progress, could help advance the public interest in many ways. Among the most promising prospects are AI’s potential for education, medical applications, research advances in a wide range of fields, and increased innovation leading to increased prosperity. This rapid progress has also increased awareness of the current harms and potential future risks associated with the most capable types of AI.

This report aims to contribute to an internationally shared scientific understanding of advanced AI safety

To begin forging a shared international understanding of the risks of advanced AI, government representatives and leaders from academia, business, and civil society convened in Bletchley Park in the United Kingdom in November 2023 for the first international AI Safety Summit. At the Summit, the nations present, as well as the EU and the UN, agreed to support the development of an International Scientific Report on Advanced AI Safety. This report aims to contribute to an internationally shared scientific understanding of advanced AI safety. This is the first interim publication of that report: the final version of the first report will be published ahead of the France AI Summit.

An international group of 75 AI experts across a breadth of views and, where relevant, a diversity of backgrounds contributed to this interim report. The evidence considered for the report includes relevant scientific, technical, and socio-economic evidence. Since the field of AI is developing at pace, not all sources used for this report are peer-reviewed. However, the report is committed to citing only high-quality sources. Criteria for a source being of high quality include:

- the piece constitutes an original contribution that advances the field

- the piece engages comprehensively with the existing scientific literature, references the work of others where appropriate, and interprets it accurately

- the piece discusses possible objections to its claims in good faith

- the piece clearly describes the methods employed for its analysis. It critically discusses the choice of methods

- the piece clearly highlights its methodological limitations

- this piece has been influential in the scientific community

Because a scientific consensus on the risks from advanced AI is still being forged, in many cases the report does not put forward confident views. Rather, it offers a snapshot of the current state of scientific understanding and consensus, or lack thereof. Where there are gaps in the literature, the report identifies them, in the hope that this will be a spur to further research. Further, this report does not comment on what policy options are appropriate responses to the risks it discusses. Ultimately, policymakers must choose how to balance the opportunities and risks that advanced AI poses.

Policymakers must also judge the appropriate level of prudence and caution to display in response to risks that remain ambiguous.

This first iteration of the report focuses on ‘general-purpose’ AI, or AI that can perform a wide range of tasks

Artificial Intelligence (AI) refers to advanced machine-based systems developed with broadly applicable methodologies to achieve given goals or answer given questions. AI is a broad and quickly evolving field of study, and there are many different kinds of AI. This interim report does not address all potential risks from all types of advanced AI. This first iteration of the report focuses on general-purpose AI, or AI that can perform a wide range of tasks. General-purpose AI systems, now known to many through applications like ChatGPT, have generated unprecedented interest in AI both among the public and policymakers in the last 18 months. Its capabilities have been improving particularly rapidly. General-purpose AI is different from so-called ‘narrow AI’, a kind of AI that is specialised to perform one specific task or a few very similar tasks.

To better understand how we define general-purpose AI for this report, making a distinction between ‘AI models’ and ‘AI systems’ is useful. AI models can be thought of as the raw, mathematical essence that is often the ‘engine’ of AI applications. An AI system is an ensemble of several components, including one or more AI models, that is designed to be particularly useful to humans in some way. For example, the ChatGPT app is an AI system. Its core engine, GPT-4, is an AI model.

This report covers risks from AI models and AI systems if they are ‘general-purpose’ AI models or systems. We consider an AI model to be general-purpose if it can perform, or can be adapted to perform, a wide variety of tasks. We consider an AI system to be general-purpose if it is based on a general-purpose model, but also if it is based on a specialised model that was derived from a general-purpose model. Within the domain of general-purpose AI, this report focuses on general-purpose AI that is at least as capable as today’s most advanced general-purpose AI such as GPT-4 Turbo, Claude 3 and Gemini Ultra. In our definition, a model or system does not need to have multiple modalities, like speech, text, and image, to be considered general-purpose. Instead, AI that can perform a wide variety of tasks within specific domains, like structural biology, also counts as general-purpose in our definition.

Importantly, general-purpose AI is not to be confused with ‘Artificial General Intelligence’ (AGI), a term sometimes used to refer to a potential future AI system that equals or surpasses human performance on all or almost all cognitive tasks. General-purpose AI is a much weaker concept.

This report does not address risks from ‘narrow AI’, which is trained to perform a very limited task and captures a correspondingly very limited body of knowledge. The limited timeframe for writing this interim report has led to this focus on advanced general-purpose AI, where progress has been most rapid, and the associated risks are less studied and understood. Narrow AI, however, can also be highly relevant from a risk and safety perspective, and evidence relating to the risks of these systems is used across the report. Narrow AI models and systems are used in a vast range of products and services in fields like medicine, advertising, or banking, and can pose significant risks in many of them. These risks can lead to harms like biased hiring decisions, car crashes, or harmful medical treatment recommendations. Narrow AI also gets used in various military applications. One application, though a very small subset of the application of AI to militaries, [reference 1] involves, for instance, Lethal Autonomous Weapon Systems (LAWS). Such topics are covered in other fora and are outside the scope of this interim report.

A large and diverse group of leading international experts contributed to this report, including representatives nominated by 30 nations from all UN Regional Groups, and the EU and the UN. While our individual views sometimes differ, we share the conviction that constructive scientific and public discourse on AI is necessary for people around the world to reap the benefits of this technology safely. We hope that this interim report can contribute to that discourse and be a foundation for future reports that will gradually improve our shared understanding of the capabilities and risks of advanced AI.

The report is organised into 6 main sections. After this introduction, 2. Capabilities provides information on the current capabilities of general-purpose AI, underlying principles, and potential future trends. 3. Methodology to assess and understand general-purpose AI systems explains how researchers try to understand what general-purpose AI can do and what risks it might pose. 4. Risks discusses specific risks and cross-cutting risk factors. 5. Technical approaches to mitigate risks presents technical approaches to mitigating risk from general-purpose AI and evaluates their strengths and limitations. 6. Conclusion summarises and concludes.

2. Capabilities

2.1 How does General-Purpose AI gain its capabilities?

Key information

- General-purpose AI models and systems can produce text, images, video, labels for unlabelled data, and initiate actions.

- The lifecycle of general-purpose AI models and systems typically involves computationally intensive ‘pre-training’, labour-intensive ‘fine-tuning’, and continual post-deployment monitoring and updates. There are various types of general-purpose AI. Examples of general-purpose AI models include:

- Chatbot-style language models, such as GPT-4 [reference 2*], Gemini-1.5 [reference 3*], Claude-3 [reference 4*], Qwen1.5 [reference 5*], Llama-3 [reference 6*], and Mistral Large [reference 7*].

- Image generators [reference 8], such as DALLE-3 [reference 9*], Midjourney-5 [reference 10*], and Stable Diffusion-3 [reference 11*].

- Video generators such as SORA [reference 12*].

- Robotics and navigation systems, such as PaLM-E [reference 13].

- Predictors of various structures in molecular biology such as AlphaFold 3 [reference 14].

General-purpose AI models rely on deep learning [reference 15], or the training of artificial neural networks, which are AI models composed of multiple layers of interconnected nodes, loosely inspired by the structure of biological neural networks brains. Most state-of-the-art general-purpose AI models are based on the ‘Transformer’ neural network architecture [reference 16], which has proven particularly efficient at converting increasingly large amounts of training data and computational power into better model performance. General-purpose AI models are, broadly speaking, developed and deployed following the same series of distinct stages: pre-training, fine-tuning, system integration, deployment, and post-deployment updates. Each requires different methods and resources.

Both pre-training and fine-tuning are ways of ‘training’ a general-purpose AI model. During training, a general-purpose AI model is given some data, which it processes to predict some other data. For example, the model might be given the first 500 words of a Wikipedia article and then predict the 501st word. Initially, it predicts randomly, but as it sees more data it is automatically adapted to learn from its mistakes, and its predictions improve. Each prediction requires some amount of computational resources (‘compute’), and so training requires both data and compute. The model architecture, designed by the developers, dictates the broad types of calculations that occur when the model makes a prediction, and the exact numbers used in those calculations are adjusted during training.

Pre-training:

The goal of pre-training is to build general background knowledge into a general-purpose AI model. During pre-training, general-purpose AI models typically learn from patterns in large amounts of data (usually taken from the Internet). Collecting and preparing pre-training data are large-scale operations, and in most cases, pre-training is the most computationally intensive stage of development. The pre-training of general-purpose AI models today takes weeks or months and uses thousands of Graphics Processing Units (GPUs) - specialised computer chips, designed to rapidly process complex parallelised calculations. For example, the Falcon-180B model used 4,096 GPUs for multiple months, and PaLM (540B) used 6,144 chips for 50 days [reference 13]. Today, this process uses roughly 10 billion times more compute compared to state-of-the-art model training in 2010 [reference 17]. Some developers conduct pre-training with their own compute, while others use resources provided by specialised cloud compute providers.

Fine-tuning:

After pre-training, most general-purpose AI models undergo one or more additional fine-tuning stages, to refine their ability to accomplish the intended tasks. Fine-tuning can include various techniques including learning from desirable examples [reference 18], pairs of desirable and undesirable examples [reference 19], or rewards and penalties [references 20, 21*].

Fine-tuning usually requires significant human involvement, and tends to be the most labour-intensive part of training, with millions of instances of human feedback needed to fine-tune modern models [reference 22*]. Often, this feedback is provided by thousands of contracted knowledge workers.

System integration:

After a model is trained, it can be used to build a general-purpose AI system by integrating it with other system components aimed at enhancing both capabilities and safety. In practice, general-purpose AI models are typically integrated with user interfaces, input pre-processors, output postprocessors and content filters.

Deployment:

Once they are trained, models can be deployed for use. Deployment can be ‘internal’, where a system is only used by the developers, or ‘external’, allowing the public or other non-developer entities to use it. External deployments can be ‘closed-source’ or ‘open-source’. Closed-source means that the public can only use the system through a limited interface. Open-source means that the entire system, including all of the model parameters, are made available. Some state-of-the-art general-purpose AI systems, such as GPT-4 [reference 2*], are closed source, while others like Llama-3[reference 6*] are open source. From a risk mitigation perspective, there are advantages and disadvantages of open-source models which are the subject of ongoing discussions in the scientific community. This interim report does not provide a detailed discussion of the advantages and disadvantages of open-source models.

Post-deployment monitoring and updates:

Many general-purpose AI systems are continually updated after deployment. This lets developers update capabilities and try to address flaws and vulnerabilities as they are discovered. These changes often amount to a type of ‘cat-and-mouse’ game where developers continually update high-profile systems in response to newly discovered vulnerabilities [reference 22*].

2.2 What current general-purpose AI systems are capable of

Key information

- General-purpose AI capabilities are difficult to estimate reliably but most experts agree that current general-purpose AI capabilities include:

- Assisting programmers and writing short computer programs

- Engaging in fluent conversation over several turns

- Solving textbook mathematics and science problems

- Most experts agree that general-purpose AI is currently not capable of tasks including:

- Performing useful robotic tasks such as household tasks

- Reliably avoiding false statements

- Developing entirely novel complex ideas

- A key challenge for assessing general-purpose AI systems’ capabilities is that performance is highly context-specific. Methods that elicit improved model capabilities are sometimes discovered only after a model has been deployed, so initial capabilities might be underestimated. Alternatively, general-purpose AI model and system capabilities may be overestimated due to a lack of robustness across different contexts and using different methods to elicit capabilities.

This section focuses on the capabilities of general-purpose AI models and systems categorised by modality (such as video and language) and by skill (such as reasoning and knowledge). Capabilities can also be categorised by performance on specific benchmarks (see 3. Methodology to assess and understand general-purpose AI systems). While this section covers capabilities generally, 4.4.1. Cross-cutting technical risk factors focuses on ‘high-risk’ capabilities.

Difficulty of defining capabilities:

Although general-purpose AI systems are often described in terms of their capabilities, there is no widely-accepted definition of the term ‘capability’ in the field of AI. Part of the difficulty of defining a capability is that it is not directly observed - AI researchers can only observe an AI system’s behaviour: the set of outputs or actions that a system actually produces and the context in which it does so (for example, the prompt that leads to the observed behaviour) [reference 23]. AI researchers can merely summarise the observed system behaviour in many contexts, and thus arrive at an impression of what the system is capable of - the capability. It is difficult to define and measure the full capabilities of a new general-purpose AI model, even after the model is built; researchers and users have often discovered new ways to elicit capabilities after a model is deployed, for example through prompting a model to ‘think step-by-step’ [references 24, 25] . Another complication in defining a general-purpose AI system’s capabilities is that they are shaped by the affordances in its environment - the tools and resources it can access. For instance, when a general-purpose AI system is connected to the internet and equipped with a web browser, it gains new affordances for retrieving information and interacting with the real world, effectively expanding its capabilities [reference 26].

2.2.1 Capabilities by modality

General-purpose AI models can be categorised by the modalities they process (e.g. text, images, video) as input and generate as output. General-purpose AI models exist for 10+ modalities [reference 27] such as time series [reference 28*] and music [reference 29*], but text-processing models are the source of much of the present attention on general-purpose AI models. Advanced general-purpose AI models are increasingly able to process and generate text, images, video, audio, robotic actions, and proteins and large molecules:

- Text - Advanced language models can generate fluent text and can be used for multi-turn conversations across a variety of natural languages, topics, and formats. Text and natural language interfaces are useful for people interacting with general-purpose AI models. Some general-purpose AI models can use text as both input and output, such as OpenAI’s GPT-3 [reference 30]; while others take text as input like Stability AI’s Stable Diffusion 3 [reference 11*] and can process increasingly long textual sequences - for example, Google’s Gemini-Pro-1.5 can process 30,000 lines of code [reference 31*]. Text can include many types of data encoded as text, such as mathematical formulae and software code. In the software domain, language models can write short programs and assist programmers [reference 32]. Images - Many image-related general-purpose AI models can take images as inputs potentially combined with text, such as Anthropic’s Claude 3 [reference 33*], and can be used to classify [reference 34], describe [reference 2*], encode [reference 35], or segment images in order to distinguish different objects inside of them [reference 36*]. General-purpose AI models can also generate images as outputs, such as OpenAI’s DALL-E 3 [reference 9*]. Advanced general-purpose AI models can generate increasingly controllable images, with notable improvements for more complex concepts and the rendering of text in images [reference 9*].

- Video - Video-related general-purpose AI models take existing videos as inputs, such as Meta’s V-JEPA [reference 37*], or can generate video from text, like OpenAI’s Sora [reference 38*]. Some general-purpose AI models learn to encode object properties that can be tracked across time in videos. Current models can generate realistic videos, but are limited in terms of length (generally less than one minute), fidelity, and consistency.

- Robotic actions - General-purpose AI models can be used for planning multi-step robot actions, and to interpret instructions to guide lower-level actions [reference 39]. Initial efforts are also exploring general-purpose AI models that not only plan or interpret, but also generate, robotic actions such as Google’s RT-2-X [reference 40*], but general-purpose AI model capabilities to generate robotic actions are relatively rudimentary. Part of the reason is that data collection is challenging, although substantial efforts are being made [references 41, 42].

- Proteins and large molecules - General-purpose AI models that work with proteins and other large molecules operate on various representations (e.g. residue sequences, 3D structures). These models can predict protein folding, generate useful novel proteins, and perform a range of protein-related tasks. They therefore fall under the definition of general-purpose AI models outlined in 1. Introduction. Protein general-purpose AI models can be increasingly controlled to generate designs of proteins with predictable functions across large protein families [references 43, 44].

2.2.2 Capabilities and limitations by skill

To assess general-purpose AI capabilities fully, it can be helpful to categorise them by well-known skills such as displaying knowledge, reasoning, and creativity. Compared to categorising by modality, skills are harder to precisely define, but provide a more intuitive lens into general-purpose AI capabilities.

Viewed through this lens of skills, today’s most capable general-purpose AI systems show partial proficiency but are not perfectly reliable. Experts often disagree on whether current general-purpose AI systems can be said to have a specific skill or not. One way to look at this is through capability-limitation pairs.

- Knowledge (capability) and inconsistency (limitation) - General-purpose AI models encode an extensive range of facts that are found across the public internet [reference 45], yet are limited in identifying subtle factual differences and do not always generate self-consistent text, so the elicitation of knowledge from a general-purpose AI model can be inconsistent [references 45, 46].

- Creativity (capability) and hallucination (limitation) - General-purpose AI models can generate novel examples (for example, new images or text). This type of ‘creativity’ can be useful, but can also lead to ‘hallucinations’ fabricating content. For example, language models commonly generate non-existent citations, biographies, or facts [references 46, 47*, 48, 49, 50] which pose risks from misinformation (see 4.1. Malicious use risks).

- Common sense reasoning (capability) and causality (limitation) - Terms such as ‘common sense’ or ‘reasoning’ are often not well defined in the field of artificial intelligence, and are often used in ways that differ from their everyday use to describe human capabilities. In some circumstances, general-purpose AI models demonstrate the ability to emulate broad ‘common sense knowledge’ [reference 51], the ability to work through relatively complex problems step-by-step [reference 24], and the ability to learn and apply novel patterns within a given context (known as ‘in-context learning’) [references 30, 52]. However, appropriate forms of ‘reasoning’ are contextual and task-dependent. The extent to which general-purpose AI systems demonstrate genuine ‘reasoning’ or ‘common sense’ is contested. Research has shown [reference 53] that some reasoning capabilities are improving even for common sense reasoning problems in unusual contexts [reference 53], although there are significant limitations for other forms of basic ‘common sense knowledge’ [reference 54]. Even when general-purpose AI models appear to correctly ‘reason’ about the world, they may not have identified the underlying causal basis for this reasoning [reference 55]. There is a general consensus that currently, general-purpose AI falls short of human-level reasoning abilities.

- Formal reasoning (capability) and compositionality (limitation) - Language models, especially when given additional resources such as tools and multiple attempts, can perform some formal reasoning tasks in domains like mathematics, computer programming, and the natural sciences, noting the caveat above regarding use of the term ‘reasoning’ in this domain. For example, research shows that the Claude 3 model approaches the performance of graduate-level experts on relevant questions in biology, physics, and chemistry [reference 4*] - on a benchmark created after Claude 3 was initially trained (for discussion of benchmarking, see 2.4. Capability progress in coming years and 3. Methodology to assess and understand general-purpose AI systems). However, such models use the ‘Transformer’ architecture, which most of today’s general-purpose AI systems are based on, introduced in 2017 [reference 16]. In principle, this architecture has fundamental limitations in performing arbitrary compositional reasoning, which underpins formal reasoning [reference 56] it remains unclear how relevant these theoretical limitations are in practice.

- Forecasting (capability) and novel concepts (limitation) - Language models, when integrated into more complex systems, can forecast future events with reasonable predictive accuracy in restricted domains. A recent study [reference 57] shows that language model systems using retrieval can match the aggregate performance of expert forecasters on statistical forecasting problems (i.e. predicting the probability of events). However, while current capabilities show models can synthesise information to reason about the likelihood of future events, the extent to which models can synthesise entirely new concepts appears to be limited [reference 58].

- Simulation (capability) and embodiment (limitation) - General-purpose AI models can simulate the behaviour of virtual agents when integrated into virtual environments [reference 59]. For example, recent research shows that 25 virtual agents powered by OpenAI’s ChatGPT can operate a virtual town in a way that appears to match aspects of human behaviour [reference 60]. However, while current general-purpose AI models can simulate virtual agents, they are limited in ‘embodiment’ and cannot yet effectively control physical robots or machines, as the integration of general-purpose AI models with motor control systems remains a challenge [reference 61].

2.3 Recent trends in capabilities and their drivers

Key information

- In recent years, general-purpose AI capabilities have advanced rapidly according to many metrics, thanks to both increasing the resources used for training and algorithmic improvements. Per model, these are estimated to have increased:

- Compute for training: 4x/year

- Training dataset size: 2.5x/year

- Algorithmic training efficiency: 1.5x to 3x/year

- Energy used for powering computer chips during training: 3x/year

- Hardware efficiency: 1.3x/year

- Using ever more compute and data to train general-purpose AI models in recent years is referred to as ‘scaling up’ models. Performance on broad metrics improves predictably with scale, and many AI researchers agree that scaling has driven most of the increase in advanced general-purpose AI capabilities in recent years. However, it is debated if this has resulted in progress on fundamental challenges such as causal reasoning.

2.3.1 Recent trends in compute, data, and algorithms

Increased investment in computing resources, enhancements in hardware efficiency, the existence of readily accessible datasets online, and incremental innovations in algorithms have contributed to advances in general-purpose AI over the last decade. This section examines recent trends in computing power, data, and algorithms.

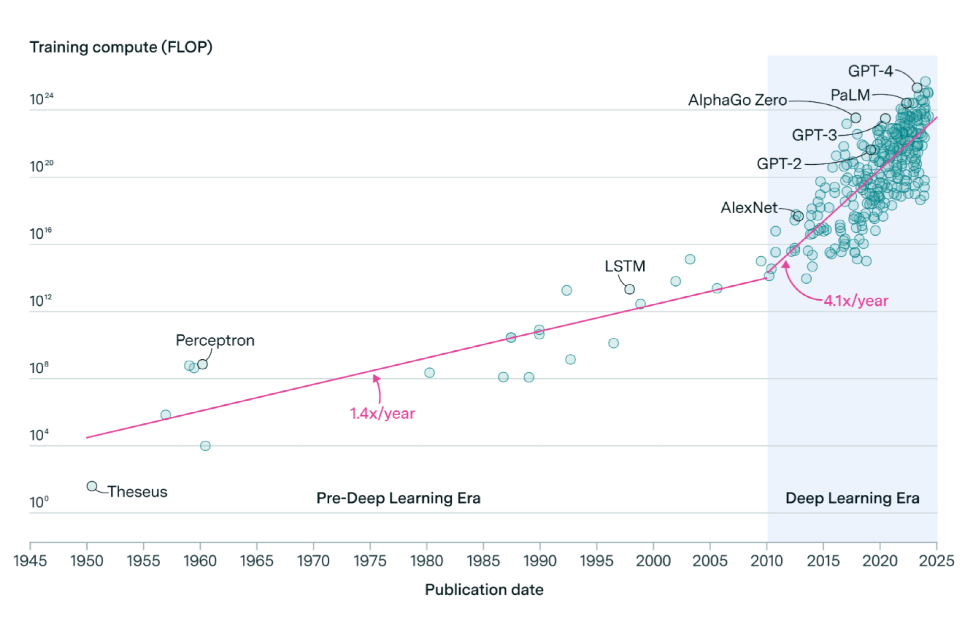

Trends in compute used in training and inference

Computing resources used for training AI models have been increasing fast. Computing resources, often referred to as ‘compute’, represent the number of operations performed. This has grown exponentially since the early 2010s, with the average amount used to train machine learning models doubling approximately every 6 months [reference 17]. In 2010, notable machine learning models [references 62, 63, 64] used an average of approximately 1e15 floating-point operations (FLOP) [reference 65], but by 2023 Inflection-2, the largest model with a publicly reported compute budget, used 1e25 FLOP [reference 66*] - a 10 billion-fold increase. This progress is driven by industry labs’ willingness to use more data centre capacity for large-scale general-purpose AI training. There is insufficient data to determine if this trend is changing over a shorter period such as the 2020s.

Figure 1.

Training compute of notable machine learning models over time [references 17, 65]. Computation is measured in total floating-point operations (FLOP) estimated from AI literature. Estimates are expected to be accurate within a factor of 2, or a factor of 5 for recent undisclosed models like GPT-4.

Reproduced with the kind permission of Epoch AI from ‘Parameter, Compute and Data Trends in Machine Learning’. Published online at epochai.org. Retrieved from: ‘https://epochai.org/data/epochdb/visualization’.

Over the last 15 years, the amount of compute per dollar has increased between around 50- to 200-fold [references 67, 68]. However, the total amount of compute used for training general-purpose AI models far outpaced the reduction in computing costs: for example, Google’s Word2vec model was trained using around 3e16 FLOP in 2013, around a billion-fold smaller than current frontier models [reference 65]. While GPU performance improvements have helped, these have been partially limited by data centre GPU shortages and high prices for top-tier GPUs used in AI applications. Supply chain shortages of high-end processors, packaging, high-bandwidth memory, and other components are delaying the technology sector’s ability to meet the enormous demand for artificial intelligence hardware like AI servers [reference 69]. The expansion in general-purpose AI compute usage is mainly the result of industry labs being increasingly willing to allocate data centre resources and engineering staff to large-scale general-purpose AI training runs.

The discovery of neural ‘scaling laws’, which describe predictable relationships between the amount of compute, the size of the model and data, and performance, has contributed to a compute-centric view of AI development that is prominent at some leading AI labs. The development of flagship general-purpose AI models such as Google Gemini Ultra and OpenAI’s GPT-4 was guided by work on scaling laws [references 2, 3]. As a result, there is a greater need for hardware infrastructure expertise, and there are tighter collaborations between AI labs and technology giants such as Microsoft and Google.

Computational resources for deployment have also seen significant growth. Companies are rapidly expanding infrastructure to meet these growing demands. The computational resources required for inference (a key part of serving general-purpose AI systems to users) have experienced significant growth [reference 76] because the number of users for deployed general-purpose AI systems has increased rapidly. In April 2023, OpenAI’s AI systems were reportedly estimated to incur $700k/day in inference costs [reference 77]. Some estimates indicate that the total computation spent on general-purpose AI inference already exceeds that devoted to training new models for example, AI inference represented 60% of Google’s AI infrastructure emissions as of 2022 [reference 78].

Growing compute resources both for training and inference have also rapidly expanded AI’s energy usage (see 4.3.4 Risks to the environment).

Training data trends: larger datasets, multimodal, synthetic data and human preferences

General-purpose AI developers have been able to significantly increase training dataset sizes thanks to the availability of content from the internet including open repositories of web data. These larger datasets contribute to higher performance on a wide range of metrics. Dataset sizes for training general-purpose AI have increased from around 2 billion tokens (a token is a word, a character, or sometimes part of a word) for the original Transformer model in 2017 to over 3 trillion tokens in 2023 [references 79, 80], growing approximately 10x every 3 years [reference 65].

However, general-purpose AI developers only have a limited amount of text data available on the internet to draw on [references 81, 82]. While this could be overcome, for example by training on the same data many times, using AI-generated data, or training on other non-text data sources like YouTube videos, some believe that by 2030 shortages of accessible online high-quality text data could slow the rate at which models can be productively scaled (see 2.4.2 Will resources be scaled rapidly?).

Data quality plays a critical role in training high-performing language models. Selecting high-quality data and optimising the overall composition of the dataset can significantly improve model performance, but this process is labour-intensive [references 83, 84, 85]. Moreover, measuring and analysing data to identify and mitigate problematic artefacts, such as biases and lack of diversity, is essential for producing high-quality models [reference 86*].

Training general-purpose AI models on diverse modalities like images, audio, and video alongside text, has recently gained traction. General-purpose AI models such as GPT-4, Claude 3, and Gemini Ultra combine different modalities to perform tasks requiring joint processing of textual, visual, and auditory information, such as analysing documents with text and graphics or creating multimedia presentations [references 2, 3, 4*].

‘Human preference’ data captures the types of outputs users prefer and has become crucial for developing general-purpose AI systems. This data cannot be mined from publicly available sources, but must be produced specifically for training; as such it is more expensive than the text data used for pre-training. This data helps fine-tune language models to conform with user and developer needs, adapt to diverse preferences, and ground the models in human judgments of quality and helpfulness [references 20, 21, 87]. AI labs and large companies may have an advantage in producing and accessing large quantities of proprietary human preference data.

Techniques and training methods for general-purpose AI have improved consistently

The techniques and training methods underpinning the most capable general-purpose AI models have consistently and reliably improved over time [references 88*, 89]. The efficiency of AI techniques and training methods has been increasing 10x approximately every 2 to 5 years in key domains such as image classification, game-playing, and language modelling. For example, the amount of compute required to train a model to perform image classification to achieve a set level of performance decreased by 44x between 2012 and 2019, meaning that efficiency doubled every 16 months. Game-playing AI systems require half as many training examples every 5 to 20 months [reference 90]. In language modelling, the compute required to reach a fixed performance level has halved approximately every 8 months on average since 2012 [reference 89]. These advances have enabled general-purpose AI researchers and labs to develop more capable models over time within a limited hardware budget.

There have also been incremental advances in algorithms that are not best understood as increasing compute efficiency. For example, new techniques have significantly increased the size of context windows, allowing general-purpose AI systems to process larger quantities of information [references 31, 91, 92*], and post-training algorithms allow general-purpose AI systems to use tools and take actions in the world without human assistance (see 2.4.3. Will algorithmic progress lead to rapid progress?).

Despite significant advancements in AI algorithms, general-purpose AI has seen relatively few major conceptual breakthroughs in recent years. The ‘Transformer architecture’ remains perhaps the most significant innovation, and is used by most advanced general-purpose AI systems [reference 16]. While many alternative architectures have been proposed, none have yet substantially and consistently outperformed the Transformer. Recent ‘selective state space models’ [reference 93] might prove to be more efficient than the Transformer, once properly tested. These models reinforce the recent trend of allowing language models to analyse longer contexts, such as books and large software projects. If more fundamental conceptual breakthroughs are needed to advance general-purpose AI capabilities, this could be a key barrier to further development even if incremental improvements and scaling continue to drive rapid progress in some areas (see 2.4.1. If resources continue to be scaled rapidly, would this lead to rapid advancements?).

2.3.2 Recent trends in capabilities

General-purpose AI has advanced increasingly rapidly, nearing or surpassing human-level performance on some metrics, with debated implications

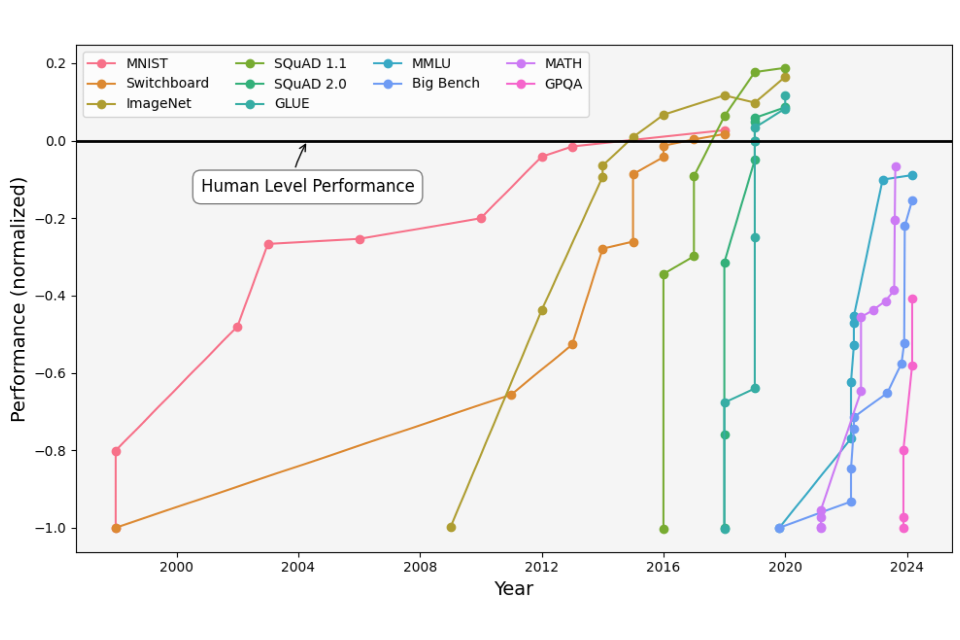

The pace of recent general-purpose AI progress has been rapid, often surpassing the expectations of AI experts on some metrics. Over the last decade, AI has achieved or exceeded average human-level performance on some benchmarks in domains such as computer vision, speech recognition, image recognition, and natural language understanding (Figure 2). The latest advances in LLMs build upon this longer-running trend.

Figure 2.

Performance of AI models on various benchmarks from 1998 to 2024, including computer vision (MNIST, ImageNet), speech recognition (Switchboard), natural language understanding (SQuAD 1.1, MMLU, GLUE), general language model evaluation (MMLU, Big-Bench, and GPQA), and mathematical reasoning (MATH). Many models surpass human-level performance (black solid line) by 2024, demonstrating significant advancements in AI capabilities across different domains over the past 2 decades. Data are from [reference 94] for MNSIT, Switchboard, ImageNet, SQuAD 1.1, 2 and GLUE. Data for MMLU, Big Bench, GPQA are from the relevant papers [reference 95, 96, 97].

LLM capabilities have advanced significantly in multiple domains between 2020 and 2024, shown by broad benchmarks such as Massive Multitask Language Understanding (MMLU) [reference 95], Big-Bench [reference 96], and Graduate-Level Google-Proof Q&A (GPQA) [reference 97]. In 2020, general-purpose AI models performed substantially worse than average human test subjects on many of these benchmarks; in 2024, advanced general-purpose AI models have approached human-level performance. For example, consider the MATH benchmark [reference 98], which tests mathematical problem-solving skills. Initially general-purpose AI systems performed weakly on this benchmark, but 2 years after its release, GPT-4 seemed to achieve 42.5% accuracy [reference 99*] and subsequent work pushed state-of-the-art performance using GPT-4 to 84.3% [reference 100], which is close to the score obtained by expert human testers.

Despite rapid progress on benchmark metrics, these benchmarks are highly limited compared to real-world tasks, and experts debate whether these metrics effectively evaluate true generalisation and meaningful understanding [reference 101]. State-of-the-art general-purpose AI models often exhibit unexpected weaknesses on some benchmarks, indicating that they partly or fully rely on memorising patterns rather than employing robust reasoning or abstract thinking [references 102, 103]. In some cases, models were accidentally trained on the benchmark solutions, leading to high benchmark performance despite the absence of the actual capability [references 104, 105]. Models also struggle to adapt to cultures that are less represented in the training data [reference 106]. This underscores the significant disparity between benchmark results and the capacity to reliably apply knowledge to practical, real-world scenarios.

AI and human capabilities have distinct strengths and weaknesses, making comparisons challenging

While it may be tempting to compare the cognitive capabilities of humans to the capabilities of general-purpose AI systems, they have distinct strengths and weaknesses, making these comparisons less meaningful in many cases. While general-purpose AI excels in some domains, it is arguably lacking the deep conceptual understanding and abstract reasoning capabilities of humans [reference 102]. Current general-purpose AI systems often demonstrate uneven performance, excelling in some narrow domains while struggling in others [reference 102].

Current general-purpose AI systems are prone to some failures that humans are not [reference 107, 108]. General-purpose AI reasoning can be ‘brittle’ (unable to cope with novel scenarios) and overly influenced by superficial similarities [reference 102]. LLMs can fail at reasoning in contexts where humans typically excel. For example, a model trained on data including the statement: “Olaf Scholz was the ninth Chancellor of Germany” will not automatically be able to answer the question “Who was the ninth Chancellor of Germany?” [reference 107]. In addition, LLMs can be exploited by nonsensical input to deviate from their usual safeguards, while humans would recognise these prompts (see 5.2. Training more trustworthy models).

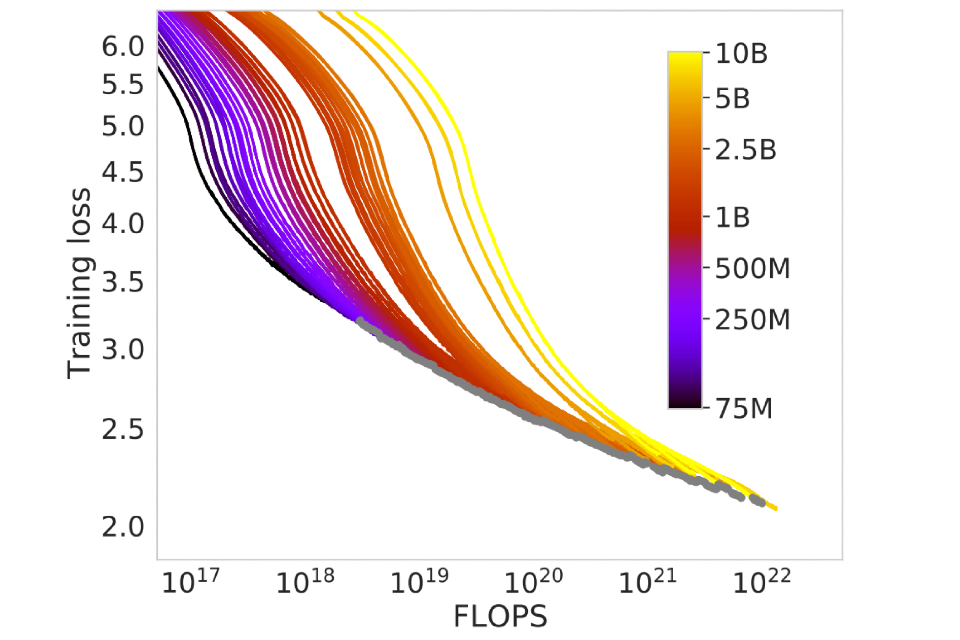

As general-purpose AI models are scaled up, their capabilities improve overall, but to date, this growth has been hard to predict for specific capabilities

The aggregate performance of language models has improved reliably and predictably with the scale of computing power and data. Researchers have discovered empirical ‘scaling laws’ that quantify the relationship between these inputs and the capabilities of the model on broad performance measures like next-word-prediction [references 109, 110]. Empirical studies across diverse domains have evidenced performance improvements in machine learning systems with increased computational resources, including in vision [references 111*, 112], language modelling [references 109, 110], and games [reference 113*].

Figure 3.

Cross-entropy loss scales predictably with compute across a broad range of empirically-studied training runs. FLOPS refers to the number of operations performed during training. Figure from [reference 110*]. Different colours represent models with different numbers of parameters. Each line shows how loss falls as training FLOP increases for a model. Permissions were sought from the author.